-1-1.png)

In How Split Testing Is Changing Consulting, Will sums up why high priority SEO changes linger in developer backlogs, and how we’re addressing these issues with our SearchPilot platform that allows us to test and roll out these recommendations without using our clients’ developer resources: we can substantiate best practices like H1 changes, alterations to internal links, and rendering content with and without Javascript.

Let’s get started with three tests you should try to see if you can increase organic traffic to your site.

1. Do H1 changes still work?

It won’t come as any surprise to SEOs that testing on page elements can produce significant changes in rankings. That said, I’ve found that folks can put too much stock in on page elements: we tend to get keyword-tunnel vision and chock up our rankings to keyword targeting alone. As a result, being able to test these assumptions on Google can help (dis)prove our hypotheses (and help us prioritize the right development work).

For iCanvas.com, prioritizing web development work is key: they’re a canvas print company with a robust team of developers, but like most companies, they have limited resources to test technical changes. As a result, dubious SEO-driven changes can’t be prioritized over user experience-driven ones.

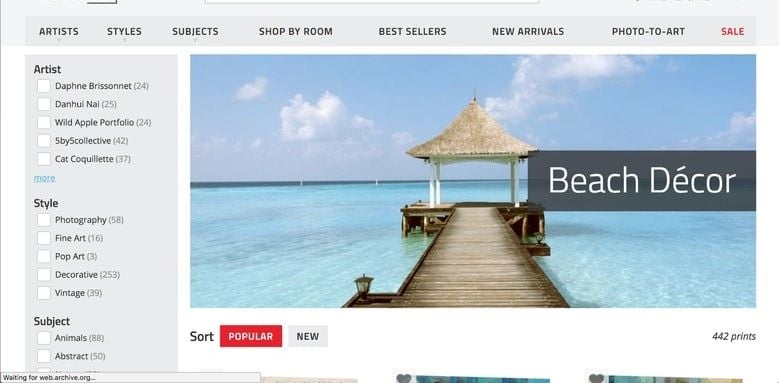

We did, however, notice that iCanvas was not targeting product type in their H1 tags. As a result, this is what a typical category page (like this one) looked like.

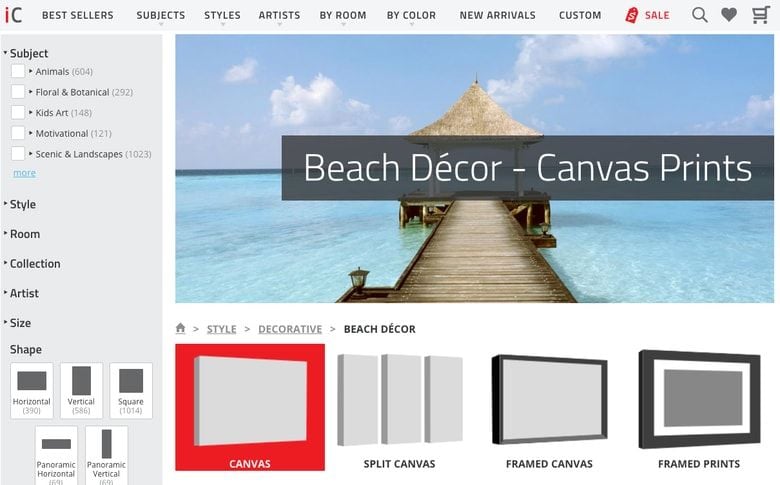

Here, the H1 tag was simply “Beach Decor.” iCanvas was communicating the style and subject of their products in their title tags–that product being canvas art prints–but that context was lost on a given category page. We hypothesized that if we told the world (and, more specifically, Google) what the products are (canvas prints), that we would better meet users’ search intents resulting in more organic search traffic to our test pages. Here’s what the H1 looked like for the test::

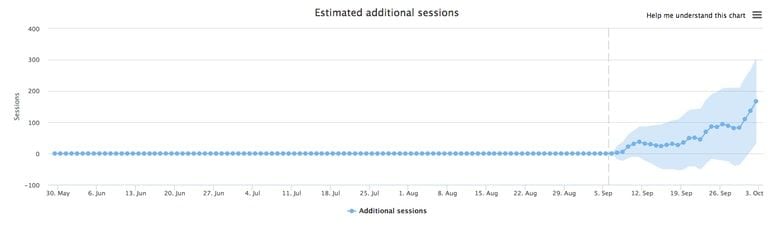

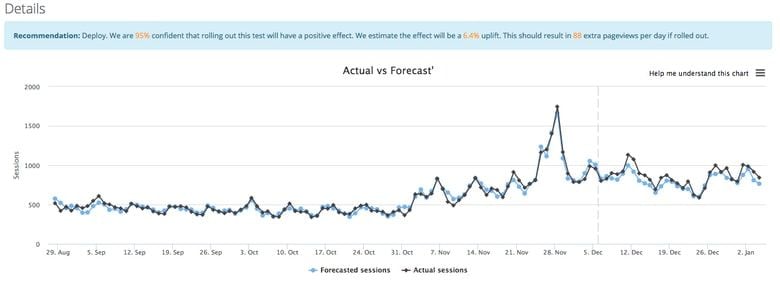

After less than a month, we had our answer: our test pages with canvas prints appended to H1 tags gained significantly more traffic than our control pages. How’d we measure that?

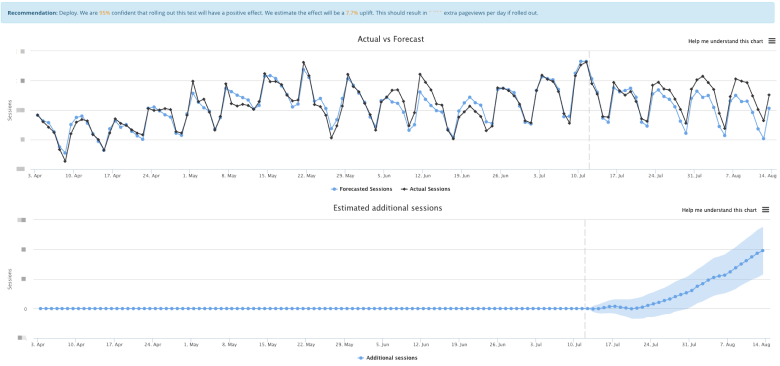

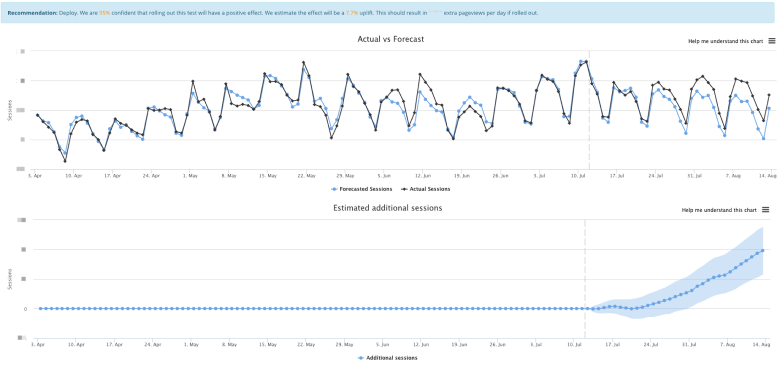

It helps to know how SearchPilot works (also check out Craig’s post, What is SEO Split Testing?). The most important thing to know in understanding the chart above is that SearchPilot observes the organic traffic your site captures in real time to develop a forecast for the organic traffic we’d expect to receive in the future. That’s how we got to the nice “7.7% uplift if rolled out” estimate. There is of course volatility–forecasts are rarely perfect, and ours isn’t an exception. Which is why we also measure statistical significance within the normal range of variance we’d expect.

As a result, we were confident that this change would positively impact traffic to their site, so we declared this test a winner and rolled the change out to all of their category pages through SearchPilot. This meant that we didn’t have to hijack our developers’ work queue in order to see an immediate benefit. Additionally, we had evidence we could bring to our devs instead of relying exclusively on the promise of following “best practices” in keyword targeting.

2. Will altering internal links give you a big payoff?

Testing changes to internal links is often an ill-defined endeavor. Do you measure changes to PageRank (dubbed local PageRank by Will Critchlow)? Should you look at your log files to observe changes to Google’s crawling behavior?

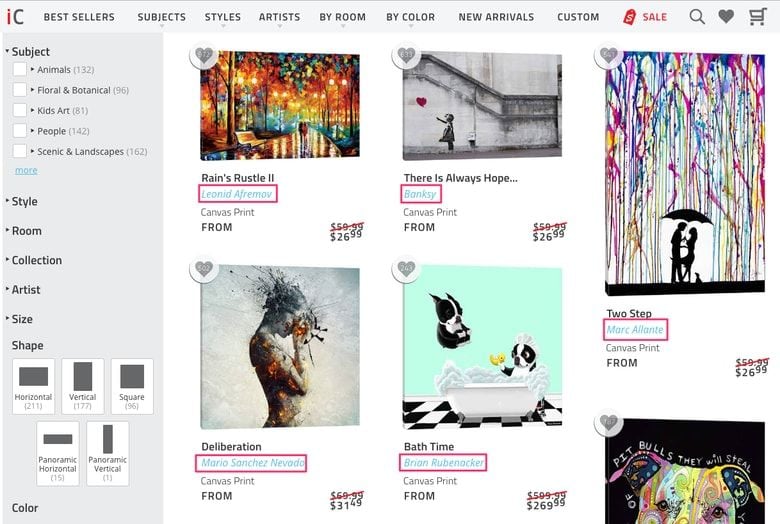

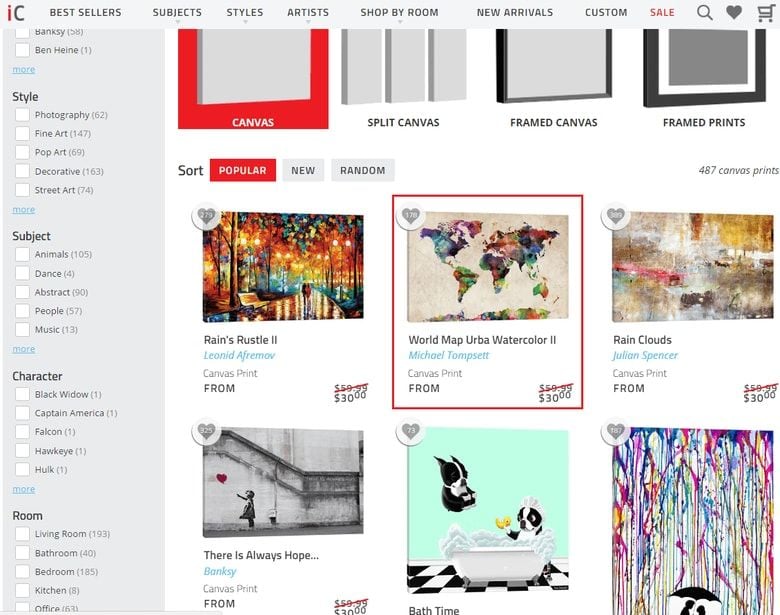

In our case, iCanvas had a somewhat simpler internal linking issue we wanted to address: self-referential links. As an art company, it’s essential to attribute the creator’s name to their work of art.

As a result, they had made the decision to include a link to the artist of the work on every product listing.

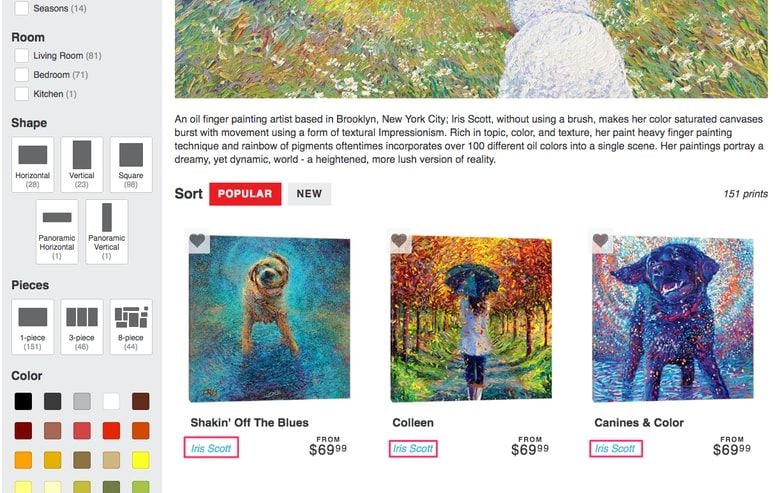

For instance, in the above screenshot of a category page, you can see that each product has its artist listed, and those artists’ names are linked to pages listing all of their available artworks on iCanvas. While this application made sense for category pages where various artists’ products are featured alongside each other, it resulted in redundant links on those individual artists’ pages.

Each of these artist attributions, on the artist’s category page, were linking back to themselves (thus: self-referential links). Our hypothesis was that if we removed these redundant links, we’d better consolidate our PageRank. We knew this change could have a dramatic impact on artists’ products, resulting in more organic traffic flowing to their product pages. Our test, however, would measure the impact of organic traffic acquisition to our test group of artist pages. So how did it turn out?

As it turned out, our test was a success: artist pages in our test group received more organic traffic than our control pages. We were again able to test something that would’ve been touted as “best practice” before rolling it out sitewide, or manually setting up test and control groups and measuring the results ourselves. Once we saw the positive impact (less than a month later), we rolled this change out sitewide and the validation we needed to get the necessary development work prioritized.

3. How good is Google at crawling JavaScript?

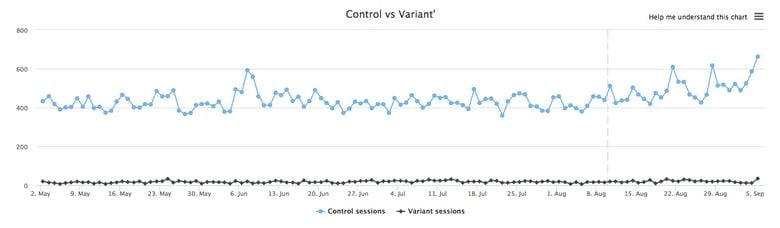

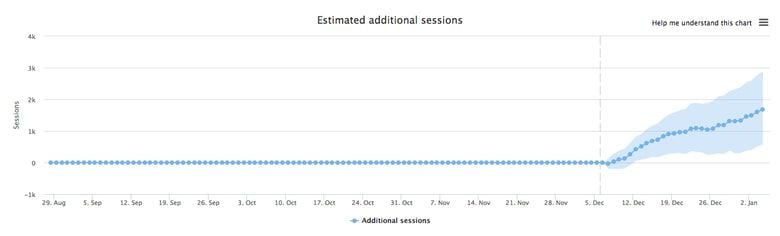

If you follow our blog, you’ve already read about how we tested Google’s ability to crawl and render JavaScript. We posited that, because Google wasn’t reliably displaying iCanvas’ products in its Fetch and Render tool, iCanvas’ category and product pages would receive more organic traffic if we used a CSS trigger to load their products instead of relying exclusively on JavaScript.

Above is a screenshot of what we saw (and, presumably, what Googlebot saw) in Fetch and Render of a category page.

After our tweak, however, we plugged one of our test URLs into Fetch and Render, and we could finally produce what users see in their browsers with JS enabled. But did it actually result in additional organic traffic to our test pages?

As you can see above, it did. Based on the performance of our test pages, iCanvas would see an extra 88 pageviews daily with their products triggered through a line of CSS instead of JS. Measuring the impact of this relatively simple change could have taken much longer than this month-long experiment. By the end though, we were ready to roll this out sitewide to ensure that all iCanvas products were crawlable and discoverable.

Split testing something as simple as on page SEO can produce meaningful traffic changes that’ll allow you to validate best practices and get necessary evidence for your stakeholders (and developers) to buy into your suggestions.