We have now been publishing SEO A/B test results for some time and for each one, we have polled our audience to get them to say whether the specific changes in question were a good idea or not. For the average case study, only 35% of poll respondents get it right.

It’s really no surprise that SEO is so hard:

1. All complex optimisation problems are hard

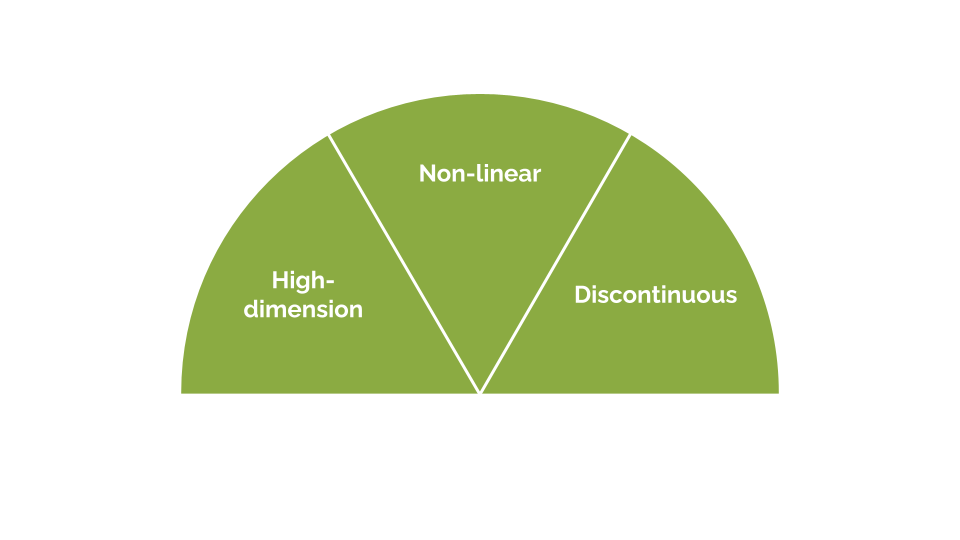

For at least the last two decades, the algorithms underpinning rankings have represented high-dimension non-linear, discontinuous functions. These are not things that humans are well-designed to understand, never mind compute in their heads.

If you want to know more about what I mean by any of those words, check out my 2017 presentation knowing ranking factors won’t be enough: how to avoid losing your job to a robot, but the quick version is that:

- There are a lot of factors that combine in surprising ways

- Just because increasing something a little bit improves rankings a little bit, It’s not necessarily the case that increasing it a lot will improve rankings a lot (or even at all!)

- Sometimes small changes can have outsized impacts

2. They (used to) call it competitive webmastering for a reason

Even if you could figure out exactly what you needed to do to perfect your landing page, everyone else is trying to do the same. I don’t subscribe to the view that SEO as a whole is zero-sum because, done well, many of the activities create value - for example, by making great new content, by providing great results for queries that previously had lower-quality results, and overall by creating “searcher surplus”. While these activities might create value for searchers however, they are closer to zero-sum considered only over the population of competitor websites. If you move up the rankings, or jump in with new content, others inevitably move down. (You can read more about my thoughts on zero-sum thinking in SEO here).

As a result, with webmasters around the world competing to rank, it’s inevitably going to be hard. If it was easy to rank #1, everyone would be doing it.

3. Machine learning makes everything less understandable

I wrote recently, in the context of discussing whether the future of SEO looks more like CRO, about how the shift from rules-based (“these signals, weighted like this, tell us which site is most relevant and authoritative for this query”) to objective-based (“dear computer, create the rank ordering most likely to satisfy searcher intent”) rankings changes things for SEOs.

It means that signals and their respective weights are more likely to vary across different kinds of queries and industries, which on its own makes things harder, but the other changes have an even greater impact:

- We have no reason to expect all the “learned” weightings to relate to human-understandable concepts and so we should not necessarily expect to have intuition about how to improve some of the factors even if we could peek inside the algorithm

- Even the engineers building the system are unlikely to know how it will respond to tweaks to features, so we should not expect to be able to derive rules of thumb about what will work and what won’t

You can read the full post here.

It is hard. But how hard?

I’ve tried before to quantify how good SEO professionals are at understanding ranking algorithms by asking them to figure out which of two given pages ranked better for a specified query. The results were not good. In a study with thousands of respondents, even experienced SEOs were no better than a coin flip.

In theory, this question is the same as “why does this page rank where it does?” if you can answer all the pairwise combinations, but even that isn’t quite the same as real-world SEO problems which are typically more of the form “is this specific change a good idea?” (or, of course, in reality, “which change should we make next?” which is equivalent to rating a wide range of changes and comparing their impacts to their costs).

It was harder to design a test to measure this but we realised that we, at SearchPilot, could not only regularly publish our SEO test results (sign up to the email list here to hear when new results are published) but also poll our audience on twitter before we released the results of each test, to see what they thought would happen.

We’ve now done this for dozens of tests - outlined the change we were proposing, asked our audience what they thought the impact would be on organic search performance, and compared their answers to the real results. For the average case study, only 35% of respondents got it right. You can read the case studies for yourself here.

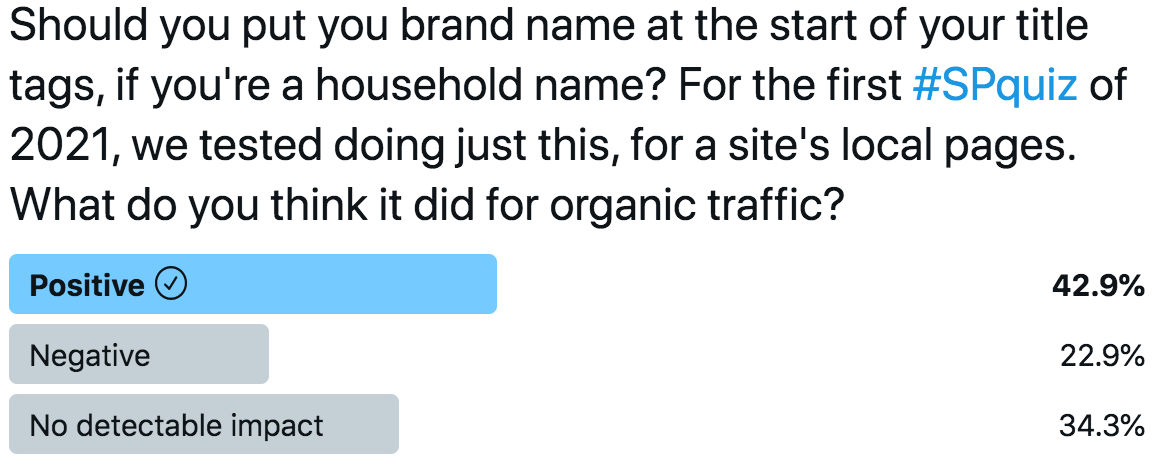

This is what it often looks like when we canvas our audience’s opinion:

With some variation of “positive / inconclusive / negative” in most polls, this is remarkably similar to the coin flip result - roughly a third of respondents getting it right out of a roughly three-way poll.

I’ve previously talked about this as “even experienced SEOs are no better than a coin flip” at the rankings task. In the context of case studies, maybe we need to extend this to “most SEOs are no better than a random rock-paper-scissors guess”.

Image by Fadilah N. Imani.

It’s hard in theory as well as practice

The last conference I spoke at before the pandemic(!) was SearchLove London in late 2019. My talk was entitled misunderstood concepts at the heart of SEO (video here for DistilledU subscribers) and was based on a series of polls I ran on twitter. I talked about how common it was, even in those rare areas of SEO where there are categorical right and wrong answers, for misinformation to spread, and for incorrect opinions and answers to spread on the web.

I talked about how:

- 80%+ of the answers to robots.txt crawling questions were incorrect

- 70%+ of the answers to questions about frequently-cited keyword algorithms like TF-IDF were incorrect

- 70%+ of the answers to questions about link flow algorithms like PageRank were incorrect

- 70%+ of the answers to questions about JavaScript and cookie handling were incorrect

I want to remind myself and you at this stage, that my point is this stuff is hard, not that people who don’t know these things can’t be SEOs, can’t be effective, or worse. I got some of my own questions wrong before I looked up the answers.

Your regular reminder:

And while we’re at it, another regular reminder of my opinion on the interplay between university degrees and marketing / SEO:

My opinion is a resounding “no” (more here). I say this with love to folks who are good at academic tests and who were lucky enough to turn that into qualifications (myself included): that’s nice, but it isn’t required for, or quite possibly even correlated with success in SEO.

A surprising number of changes hurt

We see a lot of changes that we think couldn’t possibly be damaging turn out to have significant negative impacts. This applies to technical best practices as well as seemingly strong content hypotheses. Perhaps, given the difficulty of evaluating and forecasting SEO changes, this shouldn’t be a surprise, yet it often does surprise us and our audience.

I’ve written before about the damaging impact of repeatedly rolling out changes that are what I called “marginal losses”. I think that making these kinds of changes and failing to detect their negative impacts is one of the biggest causes of lagging SEO performance on large sites. Without controlled testing, it can be extremely difficult to detect when a change has this kind of small negative impact, but the cumulative effect can be substantial and can outweigh all the positive activities a team carries out of the same time period.

This combination of surprising negative effects being extremely common plus them being hard to detect could be why so many established sites see stagnating organic search performance.

What should you do

It’s clear that these trends are not about to reverse, so any successful strategy needs to work with these challenges. The flip-side of the “competitive webmastering” challenge is that you don’t need to be perfect, but rather can get ahead by designing an approach that outstrips your competition. The difference between a lagging approach and consistent outperformance can come from identifying the winning and losing changes.

This is why, for large enough sites, we believe that controlled testing on your own website is the best way to operate, and this is why we are on a mission to prove the value of SEO by empowering agile changes and testing their impact.

[If your site is suitable for running SEO A/B tests, with at least one site section of hundreds (or more) pages with the same template and 30,000+ organic sessions / month to pages in this section, we’d love to speak. You can register to see our platform in action here.]

Of course, many sites (including our own, here at SearchPilot!) don’t fit these criteria. So if you’re just starting out, work in an industry like B2B SaaS that doesn’t generally fit with SEO-testable websites, or can’t test yourself for any other reason, my best suggestions are:

- Register here to get our case studies in your email every other week - despite the limitations on general transferability of insights, test results from experiments carried out on real websites in the real world are the best learning resource we have

- Get involved on twitter and vote in our next #SPQuiz to test yourself and hone your instincts

More generally, you need to remember that there is a lot of incorrect information on the internet. In the talk I mentioned above, I phrased it as:

And so all of my recommendations for how to approach these problems and improve your recommendations (or come up with better hypotheses if you are able to test) is to build your own foundational understanding as much as you can, learn the theoretical foundations, learn from real-world experiments, and do your own testing.

Comments? Questions? Thoughts? Drop me a line on twitter. I’m happy to discuss this stuff all day.