Do you already have an A/B testing tool like Optimizely, VWO, or maybe Adobe Target for your Conversion Rate Optimisation (CRO) A/B tests? Have you ever asked yourself: "Why can't I just use this same tool for my SEO tests?”

It's a fair question, but the short answer is no. Your standard CRO tool isn't built for SEO testing. While they're brilliant for user behaviour, they operate fundamentally differently from platforms designed specifically for SEO experiments.

If you’d prefer to watch a video explanation, here you go:

Difference #1: Splitting Users vs. Splitting Pages (How We Bucket)

This is probably the biggest conceptual hurdle.

How CRO bucketing works

They split users. When someone visits your site, the tool randomly assigns them to either a control group (A) or a variant group (B). That person then consistently sees the A or B version of tested pages.

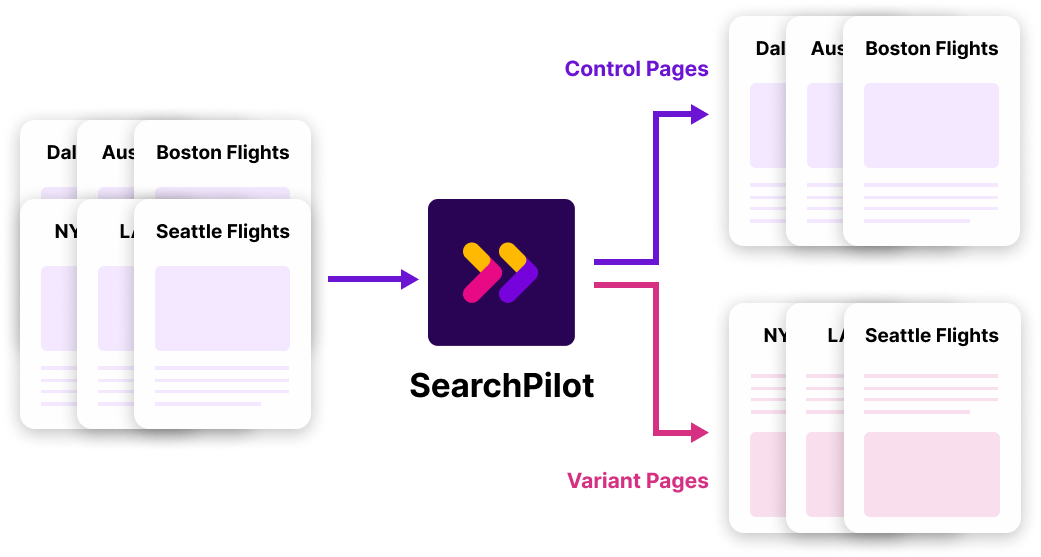

How does SEO bucketing work

With SEO testing you split pages instead of people. We take a group of similar pages (like a bunch of product category pages) and divide the pages themselves into control (A) and variant (B) groups. Here’s the crucial part: every visitor – whether it's a user or Googlebot – sees the assigned version of that specific page URL. You can't show Google one version of your page one day, and a different version the next. For SEO, each URL needs one stable version (either A or B) visible to search engines at any given time.

Difference #2: Making the Changes (Client-Side vs. Server-Side)

How the test variation is actually shown makes a huge difference to search engines.

- CRO Tools Often Use Client-Side Changes: Many CRO tools rely on JavaScript running in the user's browser (client-side) to make the change. The original page loads first, then the script kicks in and modifies it to show the variant.

- Why This is a Problem for SEO: Search engines like Google can process some JavaScript, but they aren't perfect at it. Googlebot might see the original page before the JavaScript changes kick in, or it might not render the change reliably. If Google doesn't see your tested change, well, your SEO test is effectively invalid. We also know that LLMs like ChatGPT don’t use JavaScript at all so they definitely won’t see your change. Plus, this client-side swapping can sometimes cause a visual flicker for users or negatively impact Core Web Vitals.

- SEO Testing Needs Server-Side: For SEO tests, changes must be made server-side. This means the modified HTML is sent directly from your server. When the page arrives in the browser, the change has already been made. This guarantees search engines see the exact version you intend to test and avoids client-side rendering issues.

Difference #3: Analysing the Impact (Direct Comparison vs. Causal Modelling)

Measuring the results works differently too.

-

CRO Analysis is Usually Direct: Because you've cleanly split users into A and B groups, you can directly compare metrics like conversion rates between those two populations. Standard statistical tests (like t-tests) work well here.

-

SEO Analysis is More Nuanced: Since we split pages, and there’s only one Googlebot, SEO testing needs to use a time-based testing. Essentially, we look at the historical performance of the pages before the test started. We use this data (and the behaviour of the control group during the test) to predict how the variant pages would have performed during the test period if no change had been made. Then, we compare this prediction to the actual organic traffic the variant pages received. If there's a statistically significant difference between the actual traffic and the predicted traffic, we can confidently attribute that difference to the SEO change we made. I’ve simplified this here, but you can read more about the maths behind SearchPilot here: An update on Split Optimizer: SearchPilot’s neural network model.

The Bottom Line

Your CRO A/B testing tool is likely fantastic for optimising user journeys and conversions – keep using it for that! But for testing changes intended to impact search engine performance, it's simply the wrong tool for the job. The way CRO tools handle bucketing, implement changes, and analyse results doesn't make them suitable for SEO testing.

If you’re interested in how SEO and CRO testing can work together, here are some other resources:

-

Optimize to convert: How CRO testing and SEO can work hand in hand

-

What is Full-Funnel Testing? How we combine SEO and CRO testing

The Only Way to Truly Measure SEO Impact

Most SEO teams still rely on best practices, gut feel, or slow feedback loops to make decisions. But without controlled testing, it’s impossible to know which changes actually improve performance.

SearchPilot takes the guesswork out by running server-side, statistically robust SEO A/B tests that isolate the impact of your changes. It works at the page level (not user level), uses predictive modelling, and ensures search engines see exactly what you want them to..png?width=1500&height=1500&name=Copy%20of%20Product%20ads%20(1).png)

It’s the most reliable way to prioritise what works and finally prove the value of SEO to your business.

See SearchPilot in Action with a Personalised Demo

![[Webinar Replay] What digital retail marketers should DO about AI right now](https://www.searchpilot.com/hubfs/what-to-do-about-ai-thumbnail.png)