Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

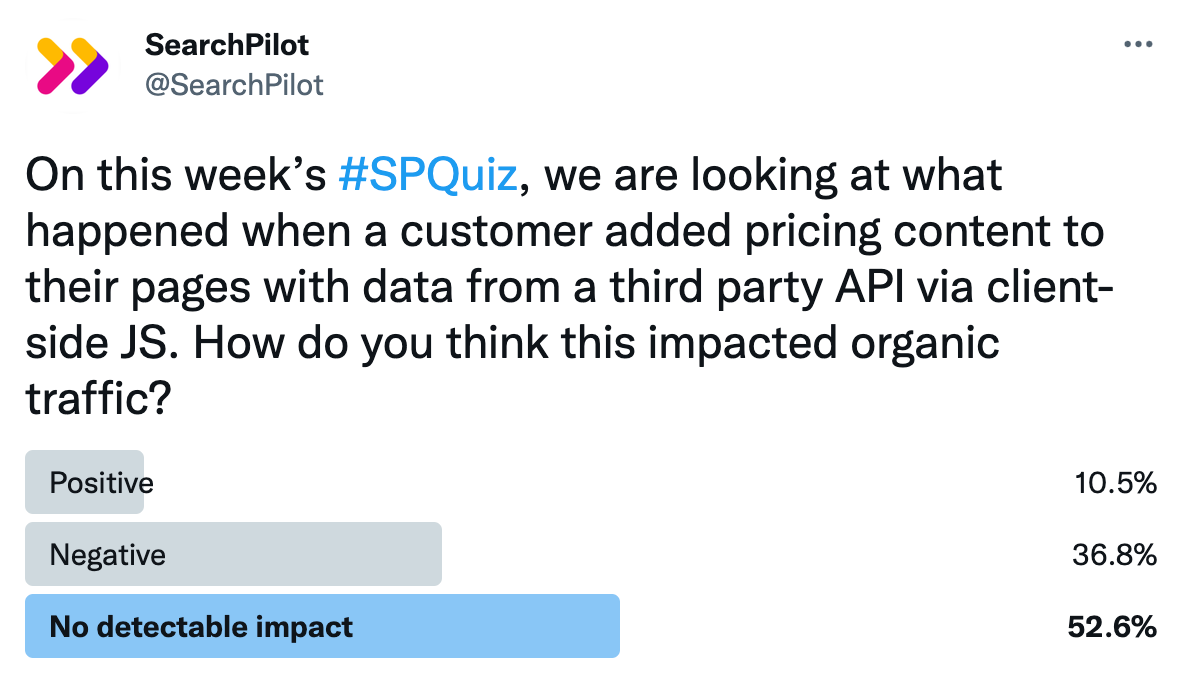

This week’s case study highlights a test that involved a client-side script to perform an API request for data that would then be injected onto the page to enhance the page’s content. We asked our twitter followers in our #SPQuiz poll what they suspected the impact to organic traffic would be:

Most of the respondents believed there wouldn’t be a detectable impact from this test. Read on to see if they were correct.

The Case Study

Everyone should strive to build content pages that are a one-stop shop for any question that searchers may have. While this is high-value and a competitive advantage, a common obstruction is that data collection requires resources not everyone can afford.

For our case study this week, we’re highlighting a customer in the travel industry who tested a more practical approach to accomplish this. Our customer pulled in data from a third-party airline price aggregator and placed the content on their site. Using SearchPilot they injected a client-side script onto the page that pulled from the third-party API and placed the returned values onto the page. The pitfall of this approach is that we didn’t know whether or not Google would render the JS and see the data – we always recommend server-side tests when feasible! At minimum, Googlebot would see the additional container and heading on the page intended to house the requested data.

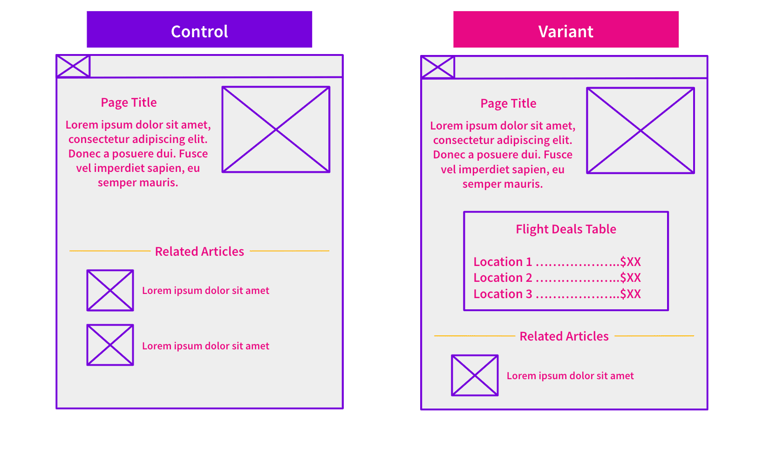

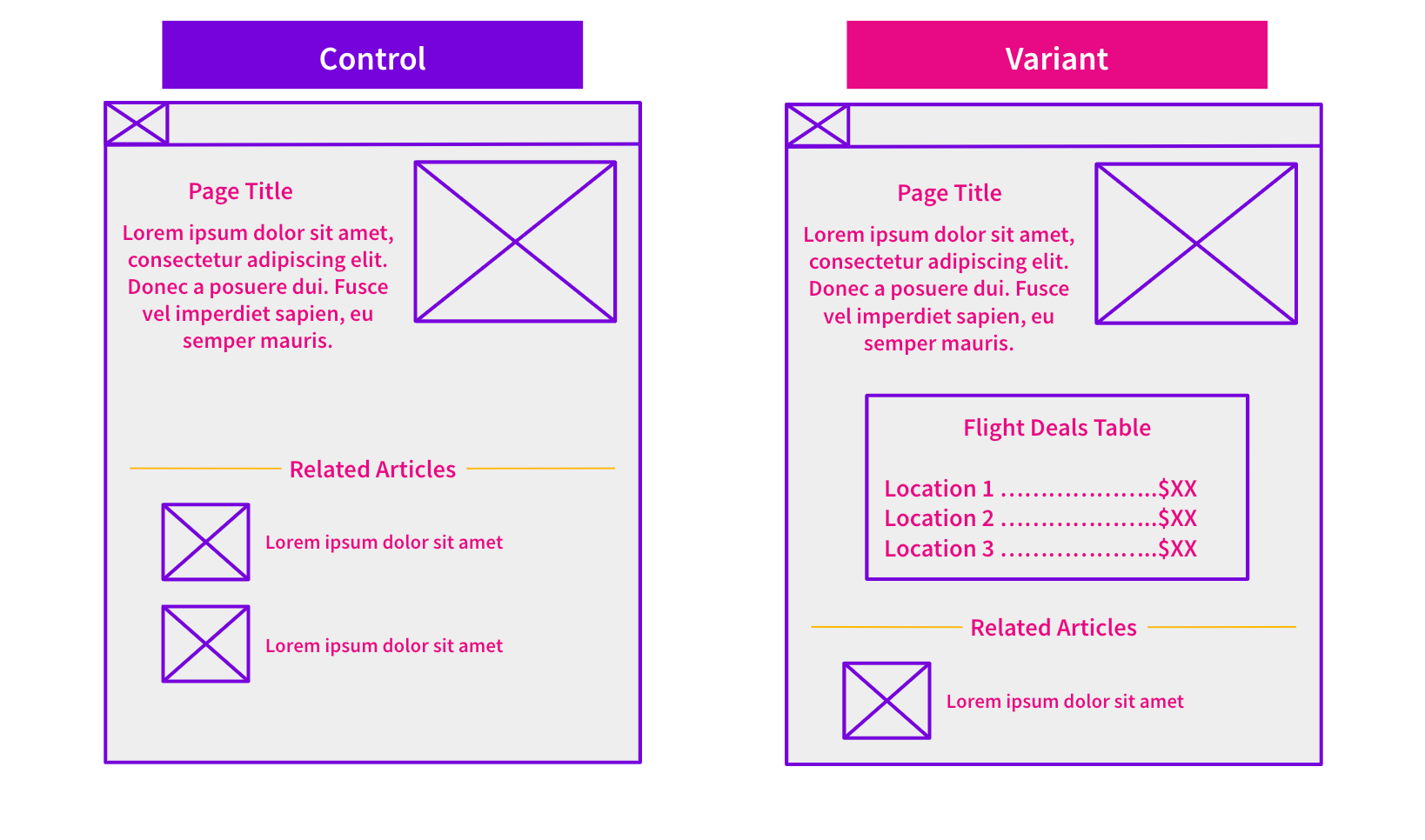

The hypothesis was that including third-party data on their page would improve relevancy signals for deal related queries which could improve rankings and lead to increased organic traffic. Additionally, providing more useful and accurate content to users could possibly help to improve user signals. On the flip side, we also understood the possibility that Google might not pick up on the content since it is injected after the initial page load. In that case, we might anticipate Google discounting and not valuing it. The resulting test build looked similar to this image:

Test Results

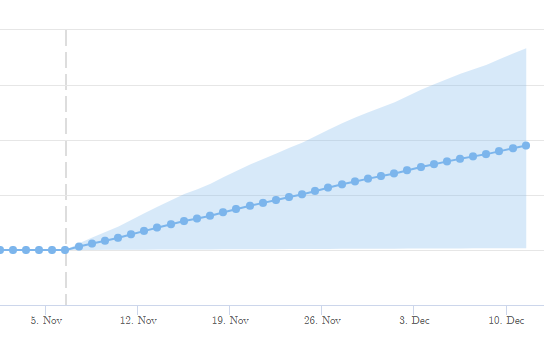

This was the impact on organic traffic:

The test result was positive at 95% confidence with an estimated uplift of 7%!

We see that Googlebot discovered this change swiftly and the resulting impact was immediate. Running the variant pages in the Google Mobile Friendly tool also revealed that Google was rendering and seeing the returned API data on the page. This website update was implemented for the customer’s production site and served as inspiration for additional tests. Whether or not Google renders the API call that you place on your website will affect the impact you receive from a website update like this, but we think the strategy behind this test is relevant for a lot of companies. It’s always worth researching how you can use what is publicly available to enhance your own content and improve the organic visitor’s experience.

How our SEO split tests work

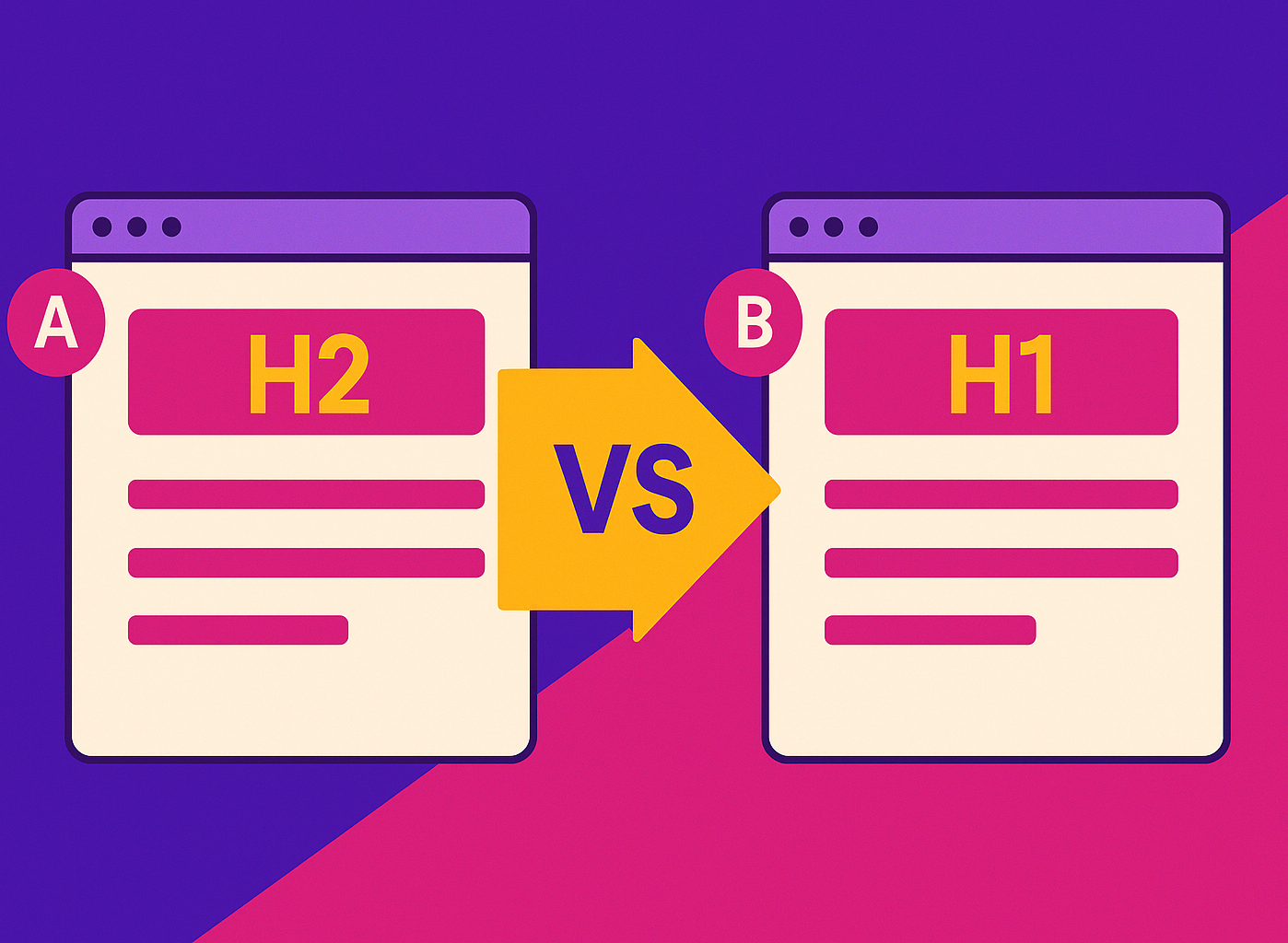

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.