Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

For this week's #SPQuiz, we asked our followers on X/Twitter and LinkedIn what they thought the impact on organic traffic was when we added three-letter airport codes to the title tags and H1s on the flight detail pages for a travel customer.

Poll Results

Most of our followers believed that adding this information to title tags would boost organic traffic, while a smaller group of 20% felt the change was too minor to drive a meaningful impact.

This change actually had a negative impact on organic traffic!

The Case Study

Title tags and heading tags play a key role in SEO by helping search engines understand the contents of a page. One of our customers in the travel industry has been exploring various H1 and title tag combinations to determine what signals might help boost organic performance. In this particular test, the focus was on whether including the city destination or airport codes in brackets could enhance search visibility, specifically on Flights to City/Airport pages for their Italian domain.

The hypothesis was that adding 4-letter city codes (e.g., LOND for London) or 3-letter International Air Transport Association (IATA) airport codes (e.g., LHR for London Heathrow) to the H1 and title tag would signal greater relevance and authority to search engines. This could also improve visibility for users searching by using airport codes, and potentially capture more targeted traffic.

What was changed

This test added 4-letter city codes or 3-letter IATA airport codes in brackets after the destination name in both the title tag and H1 on the Italian domain’s Flights to City/Airport pages.

Results

The test had a negative impact at the 95% confidence level, resulting in a 16% drop in organic traffic. Despite the initial hypothesis that adding city or IATA codes might strengthen SEO signals, the data indicates that this change harmed performance.

There are several possible reasons for the negative outcome. The addition of airport codes may have made the titles and H1s feel less natural or misaligned with typical user search behavior, potentially affecting how Google interpreted the relevance of the pages. It’s also possible that the codes didn’t add meaningful value from a content perspective, offering no new information that wasn’t already available through the page’s content or URL.

Sometimes, changes like this can also come across as over-optimizing, which might lead Google to give less weight to pages that include extra elements that don’t benefit users or improve their experience. While this change was reflected in SERPs, it’s possible that the unfamiliar or less user-friendly appearance of the codes made the listings less appealing to general users, potentially leading to a lower click-through rate.

Ahrefs data shows that users are significantly more likely to search for airport codes than city codes. When we segmented the data, we found evidence suggesting the negative impact was primarily driven by flights to cities rather than airports. Including a keyword like "LOND" not only offers no ranking benefit in this case but may also hurt CTR, as it doesn’t align with how users search.

While adding structured elements, such as destination codes, can sometimes strengthen EEAT signals, this test suggests that Google may not prioritize these changes if they do not add meaningful value to the content or improve clarity. Due to this, we didn't recommend rolling out the change.

As always, testing remains crucial. Future experiments could explore alternative placements or formats for destination codes, as well as other methods to enhance SEO signals without compromising relevance or readability.

To receive more insights from our testing, sign up for our case study mailing list, and please feel free to get in touch if you want to learn more about this test or our split testing platform more generally.

How our SEO split tests work

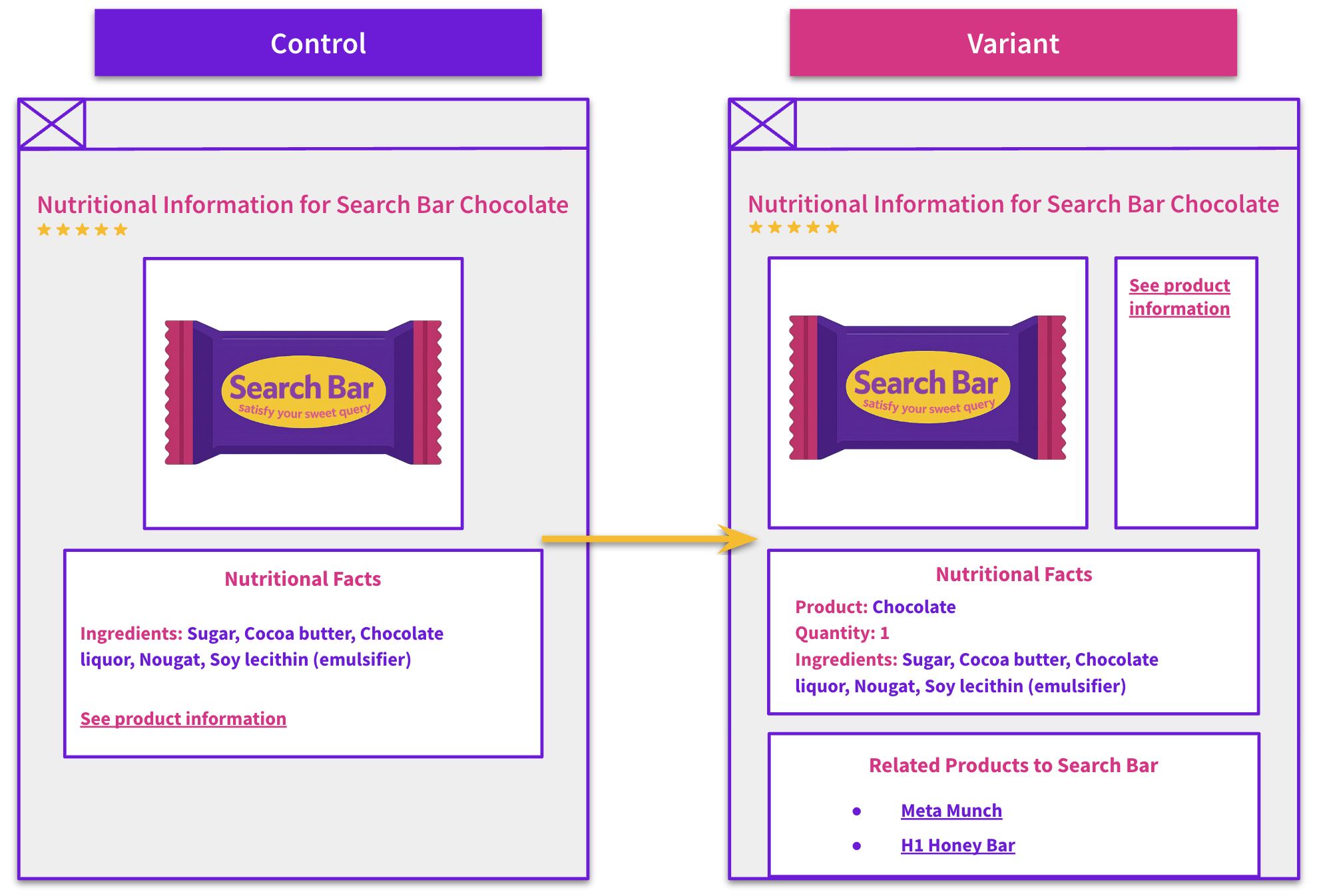

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO A/B testing works or get a demo of the SearchPilot platform.