Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

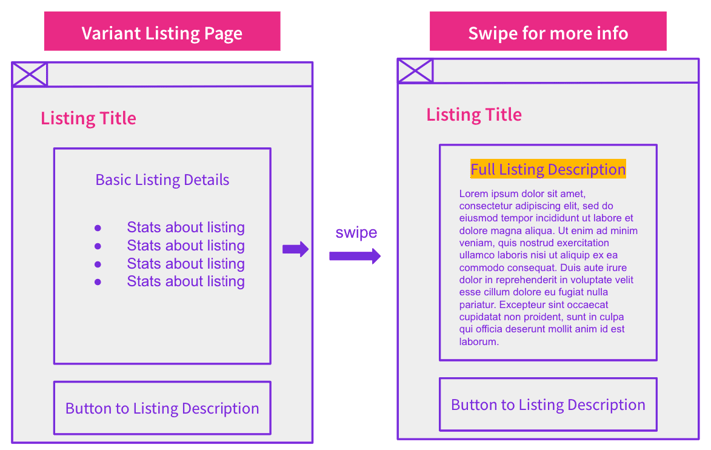

In this week’s #SPQuiz, a listings website upgraded their category pages with a “swipe for more info” function that added additional information for each listing. Previously the website wouldn’t display the details of the listing without clicking onto a new page.

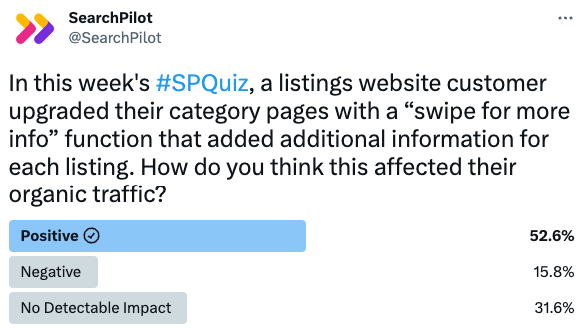

We asked our followers on Twitter and LinkedIn what they thought the impact would be on organic traffic.

Here is what they thought:

Twitter Poll

The majority of our Twitter followers thought this change would have a positive impact on organic traffic while 31% thought it would have no detectable impact.

LinkedIn Poll

Similar to the results on our Twitter poll, more than half of our LinkedIn followers believed this change would have a positive impact on organic traffic, with 38% believing there would be no detectable impact.

Let’s dig into this case study to find out who was right!

The Case Study

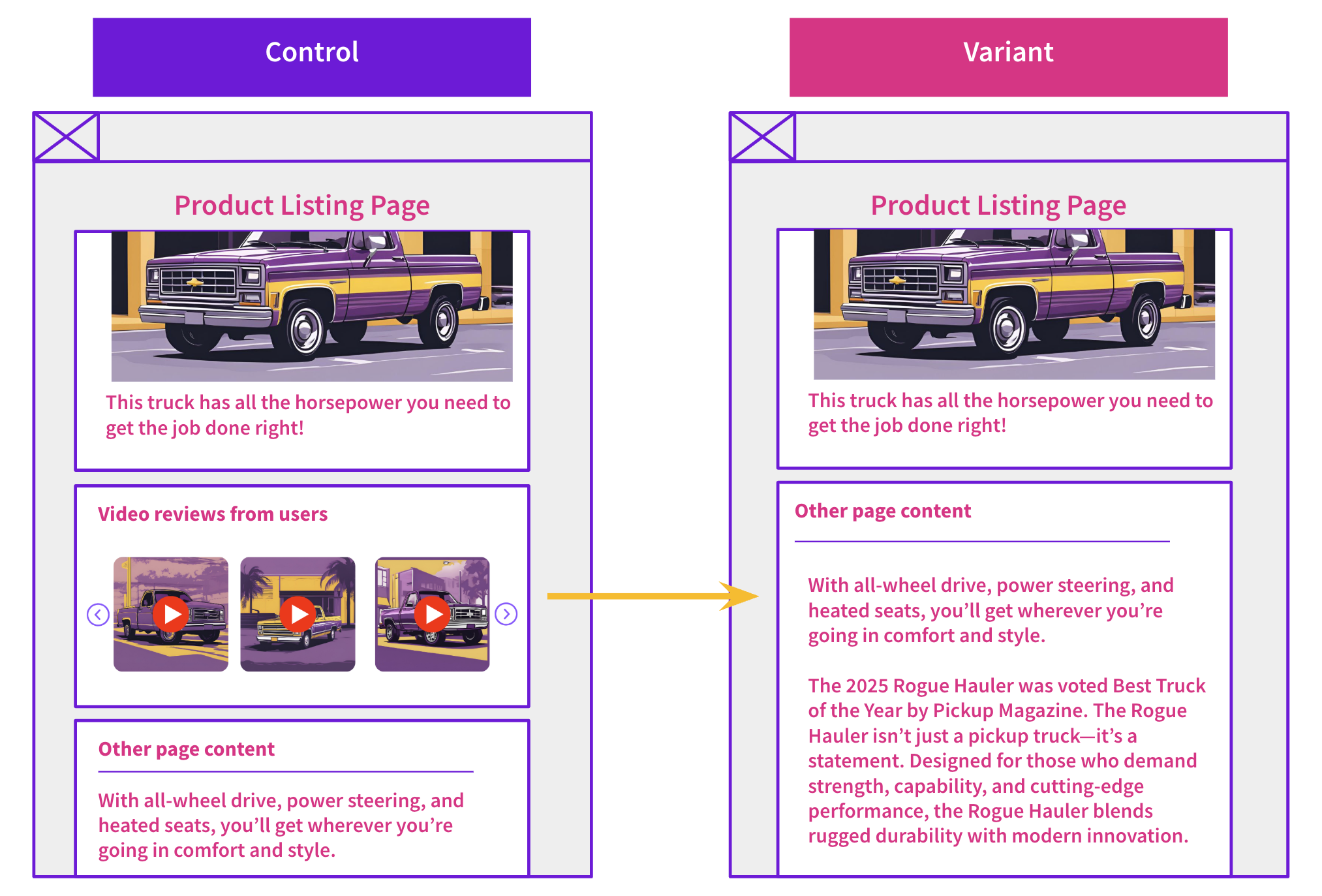

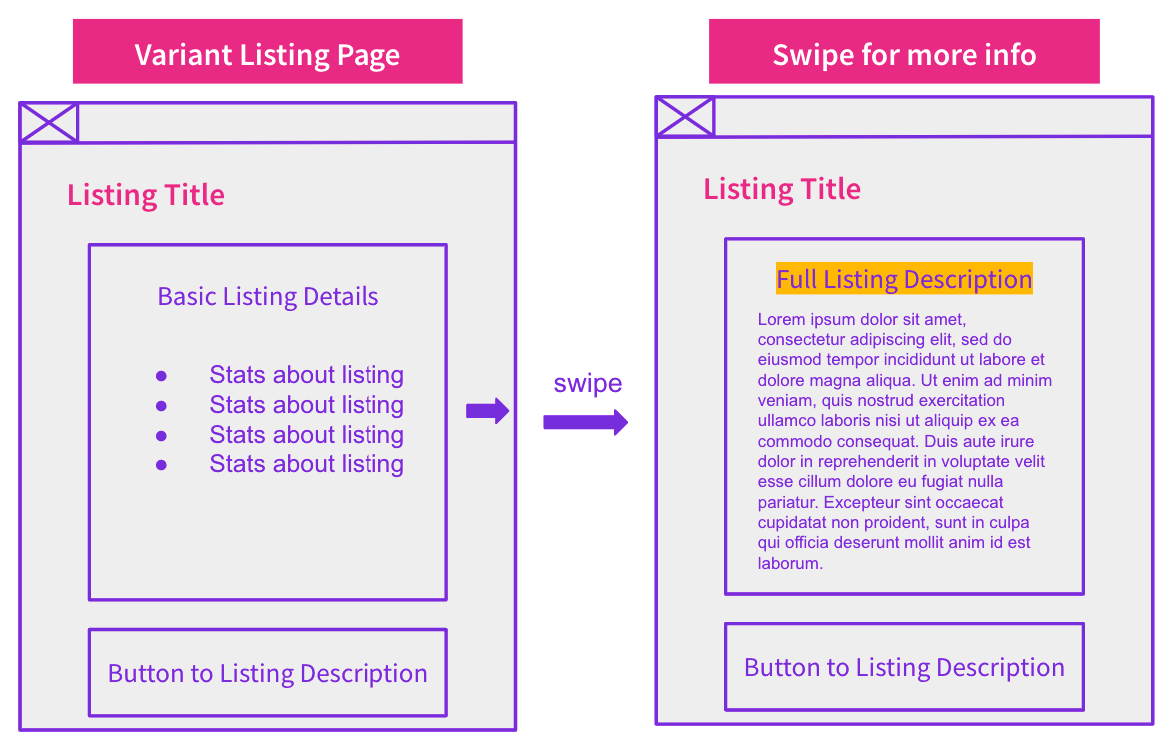

In this case study, we’re highlighting a customer that implemented a ‘swipe for more info’ feature on a category page of a listings website, allowing users to preview additional information about each posting. The hypothesis for this test was the additional description information added on the details page would be crawled, helping to improve rankings by increasing the page content. Another positive effect would be for users to have a brief overview of the listing without having to click into the details page, and that would help improve the user experience and may additionally benefit organic traffic.

This test utilized SearchPilot’s request modification feature to implement the changes. Request modification tests are perfect for testing complicated updates to a website, such as large UI layout changes or new components that require pulling additional data. Unlike CRO testing, this test is designed to measure the impact on search performance.

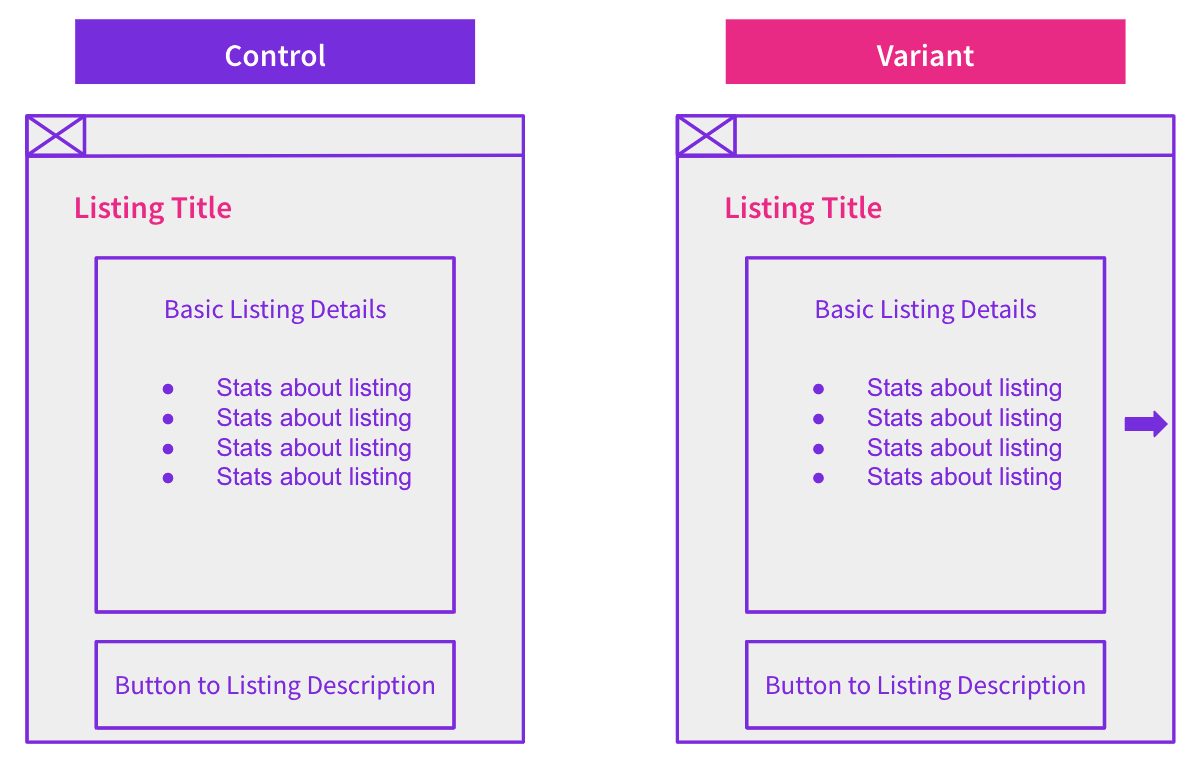

Visually there weren’t significant changes to the control vs. variant pages initially:

However once a user swipes to reveal the description, the new portion of the page is shown and additional information is displayed. This description content was in the html and visible to the search engines before the user swiped to see more information.

So how did this experiment perform?

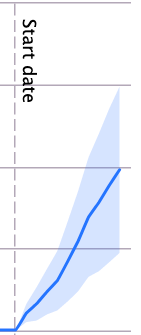

The following chart shows the impact these changes had on the variant pages:

The test had a positive impact on organic traffic, leading to a +5% uplift!

The test showed that the variant pages, where we implemented the “swipe for more info” feature, resulted in a +5% uplift, with an estimated additional 24,000+ sessions per month! Our hypothesis that including additional content on the listing page would improve rankings proved to be right. Since this change was a statistically significant increase in sessions we recommended that these changes be deployed site wide.

It’s interesting to note that this was an example where additional content on the page was beneficial even though it wasn’t visible to the user on first page load before they interacted with the page. In other tests we have found that text that isn’t visible can be less effective than visible text (even if the latter needs a scroll into view), and so there is a possible follow-up to this test to see if there would be an incremental additional uplift available if this new text were incorporated into the body of the page and visible on a simple scroll without the user interacting with the page.

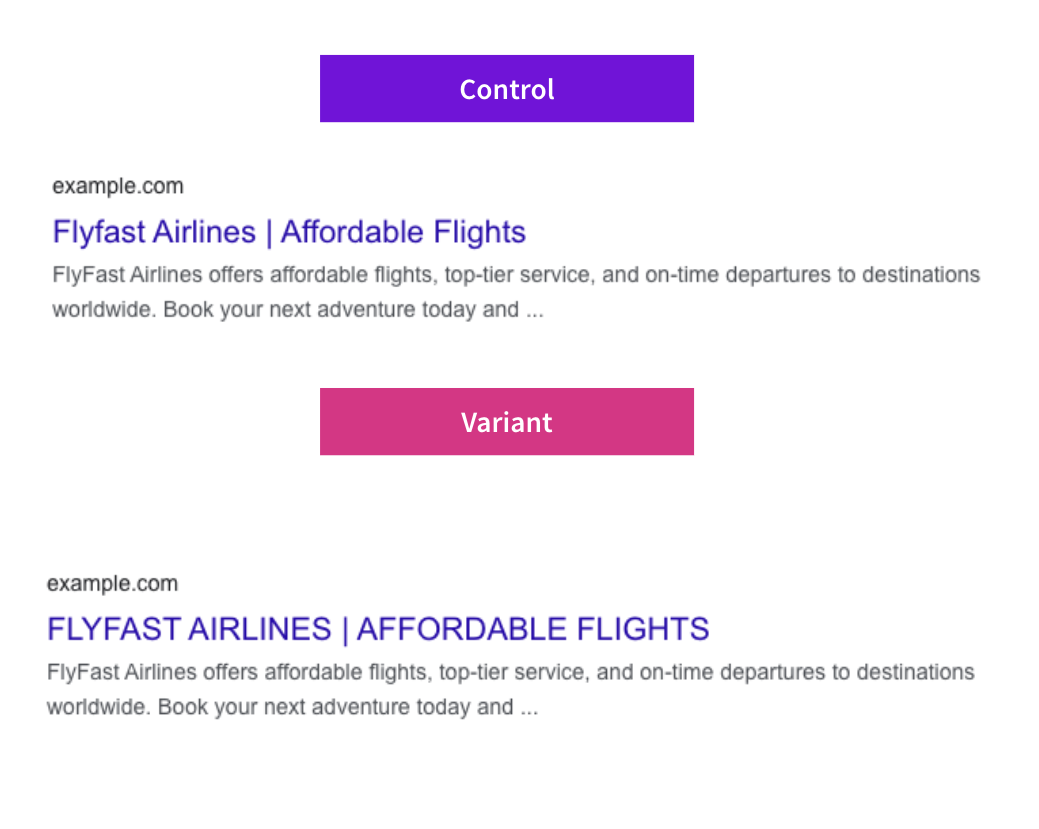

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO A/B testing works or get a demo of the SearchPilot platform.