Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

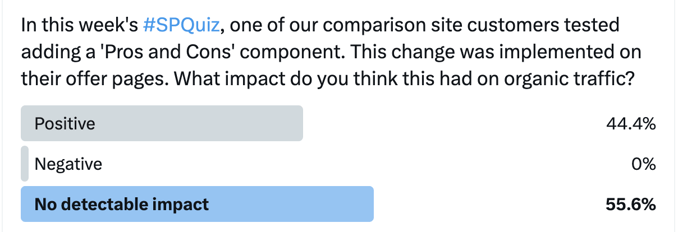

In this week’s #SPQuiz, we looked at the impact on organic traffic when we add Pros and Cons to our customer’s offer pages.

Across Twitter and Linkedin, voters were split between positive and inconclusive, with not many people thinking this test would have negative consequences. There was a slight disparity between the two social networks - our Linkedin followers were slightly more optimistic that this test would be positive. Who was right? Read on to find out!

The Case Study

Adding relevant content to pages is a key SEO recommendation that can yield favourable results across almost all industries. This is especially true when the content is helpful to users, as it should then positively impact Google’s perception of the pages’ “experience, expertise, authoritativeness, and trustworthiness”, or “EEAT”.

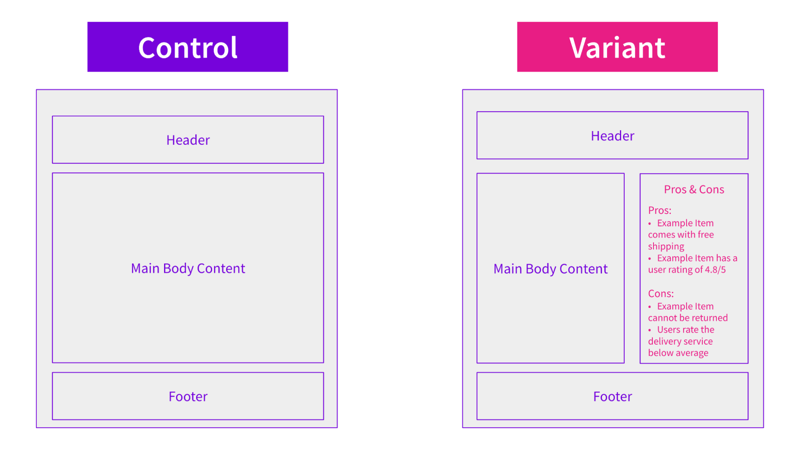

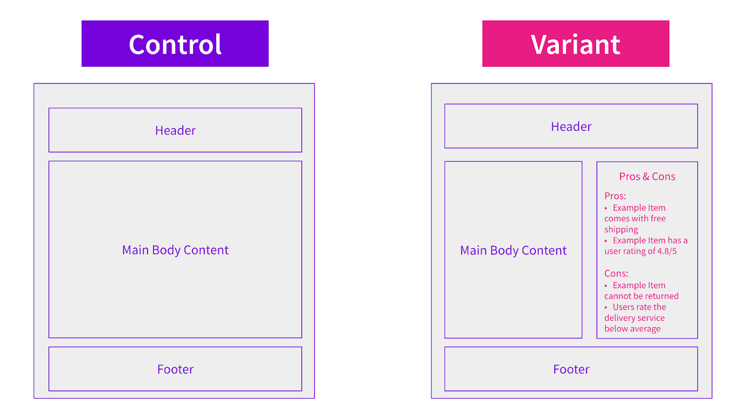

With this in mind, one of our comparison site customers tested adding a “pros and cons” content table to their offer pages. This gave users extra context and information to make an informed choice about clicking on the link through to the product offer being described.

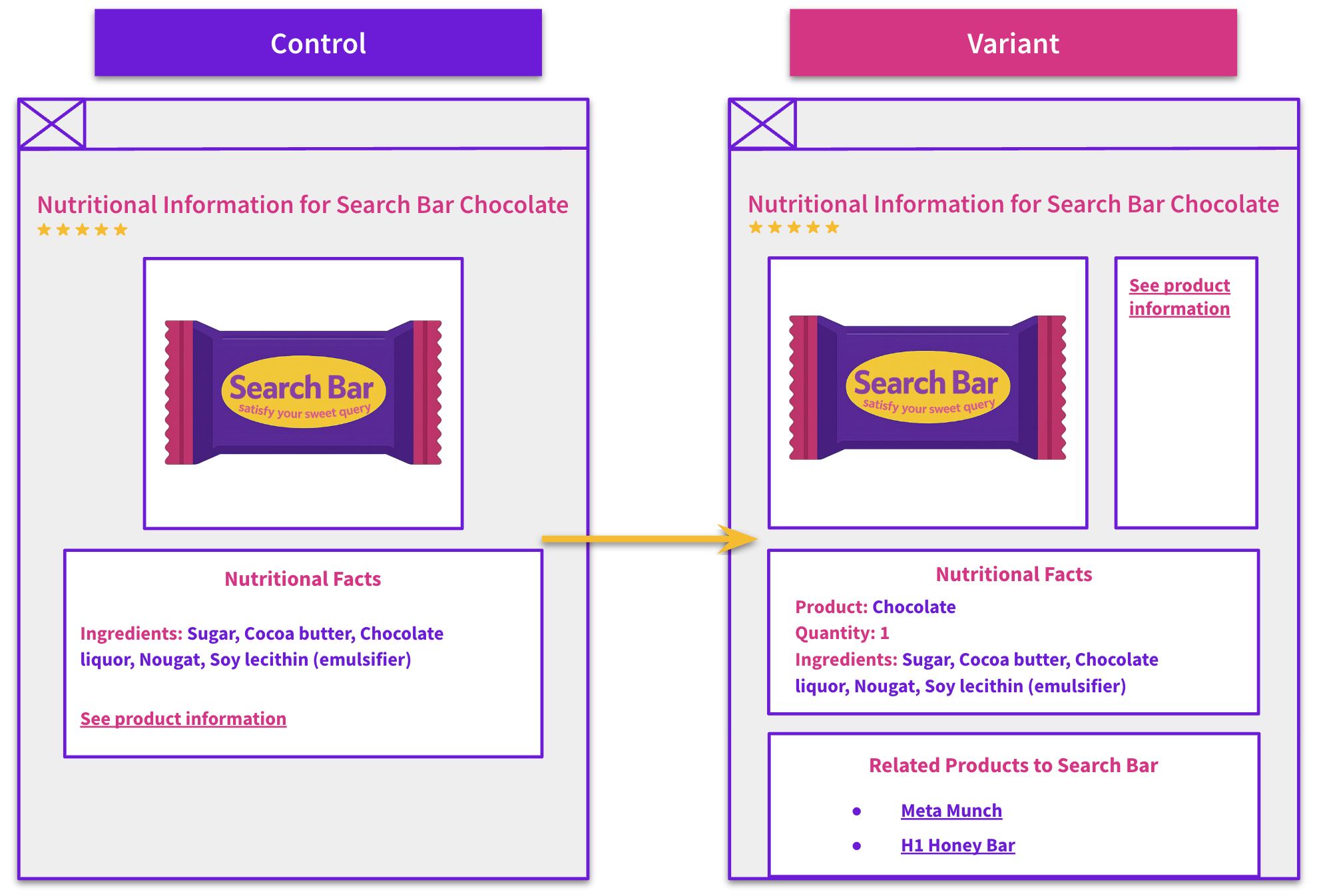

The hypothesis of the test was that by adding this content, it would improve Google’s perception of the page’s EEAT, and this would have a beneficial effect on rankings. It may also allow the pages to rank for more long-tail keywords that appear within the pros and cons table. They also hoped that this schema type would lead to table format featured snippets, which would have a clickthrough rate benefit.

It should be noted that this change did not involve adding “positiveNotes” / “negativeNotes” pros and cons schema markup, as the customer initially decided to just test the content’s impact alone.

What Was Changed

Results

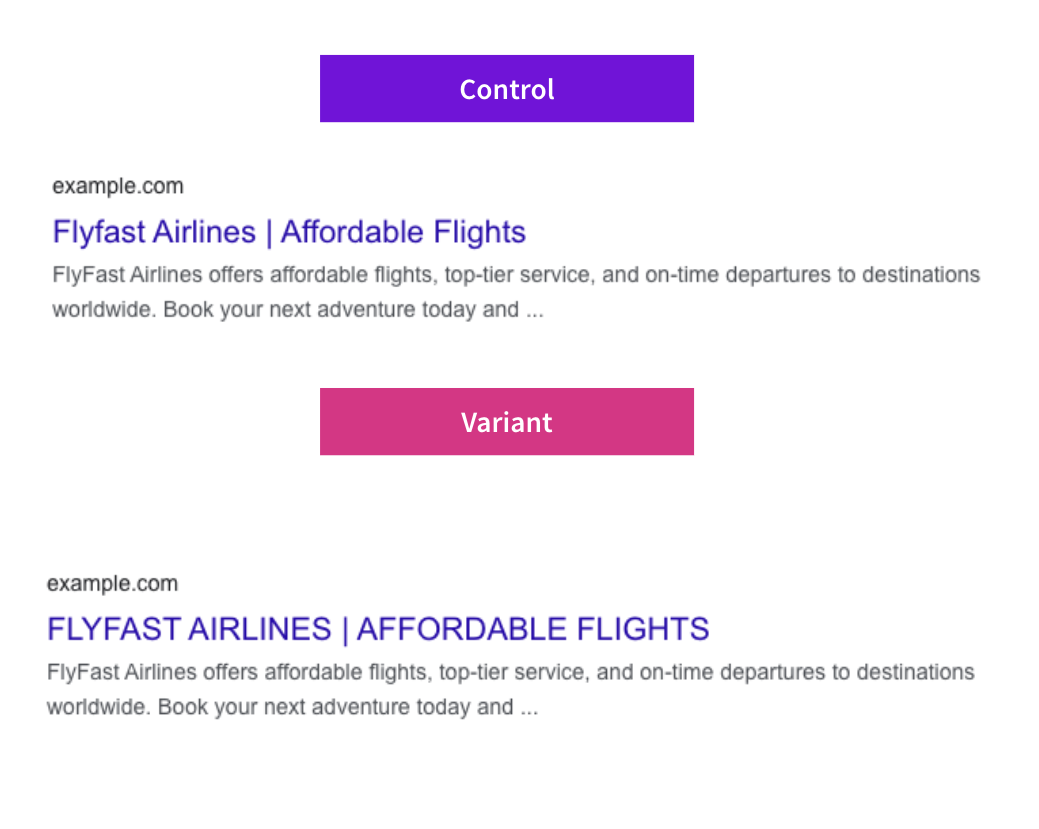

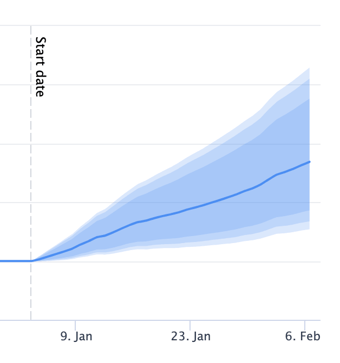

This test was a big winner! In percentage terms, it stands as one of SearchPilot’s biggest ever winning tests, delivering a 50% uplift in organic traffic to pages that had the change applied. This was without any featured snippets being gained, so it can be attributed to ranking improvements alone.

It should be noted that the site section, or group of pages to which the change was applied, was relatively small so the absolute impact on sessions wasn’t as big as some other tests, but this test really showed the benefit of adding content that is helpful to users to the page.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO A/B testing works or get a demo of the SearchPilot platform.