Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

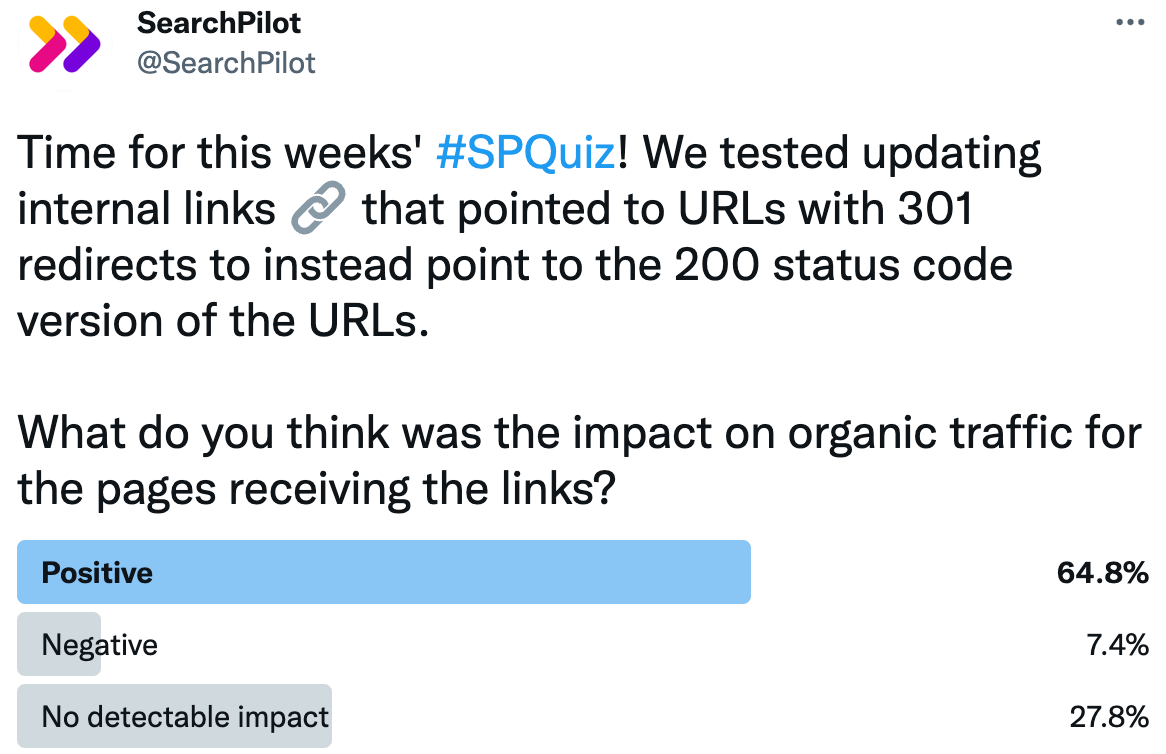

This week we asked our Twitter followers what they thought would happen when we updated internal links that pointed to URLs with 301 status codes to their 200 status code equivalent. More than half of our followers thought this would have a positive impact on organic traffic:

This change actually had no detectable impact on organic traffic. How could that be? Read the full case study below.

The Case Study

One item in a typical technical SEO audit is to seek out internal links that point to URLs that redirect (have 3XX status codes). We often get questions about whether we have any evidence that changing these internal links to point to the correct URLs (with 200 status codes) positively impacts SEO.

Every website and situation is different, but luckily at SearchPilot, our customers have the opportunity to test linking changes to better quantify their impact.

A customer with pages for their retail locations changed the URL structure of their store pages. However, the city pages that linked to these stores still referenced the former version of the URL. This meant that there were thousands of internal links pointing to 301 redirects.

Google documentation claims that no PageRank is lost when 301 or 302 redirecting a URL. However, forcing search engine crawlers to crawl redirected internal links as opposed to the destination links adds an extra hop that can impact how easily content is accessed and discovered. Having a large number of redirected internal links could also impact user experience, including the perception of page load times. Any impact in these areas may result in an impact on rankings.

This fix is something that our customer was very likely to roll out, but with strained development resources they wanted to have a better sense of the impact to get this change prioritized in the dev queue.

In this case the hypothesis was that by removing the links to redirects and replacing them with the 200 status code link, there would be a benefit to the store pages as well as a potential benefit to the city level pages.

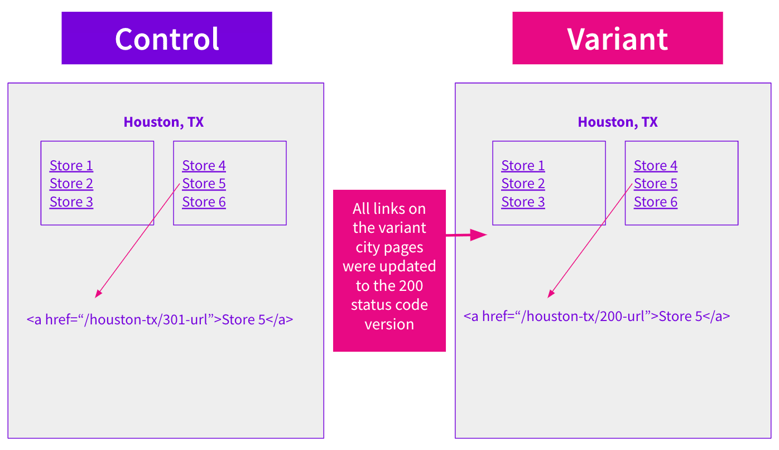

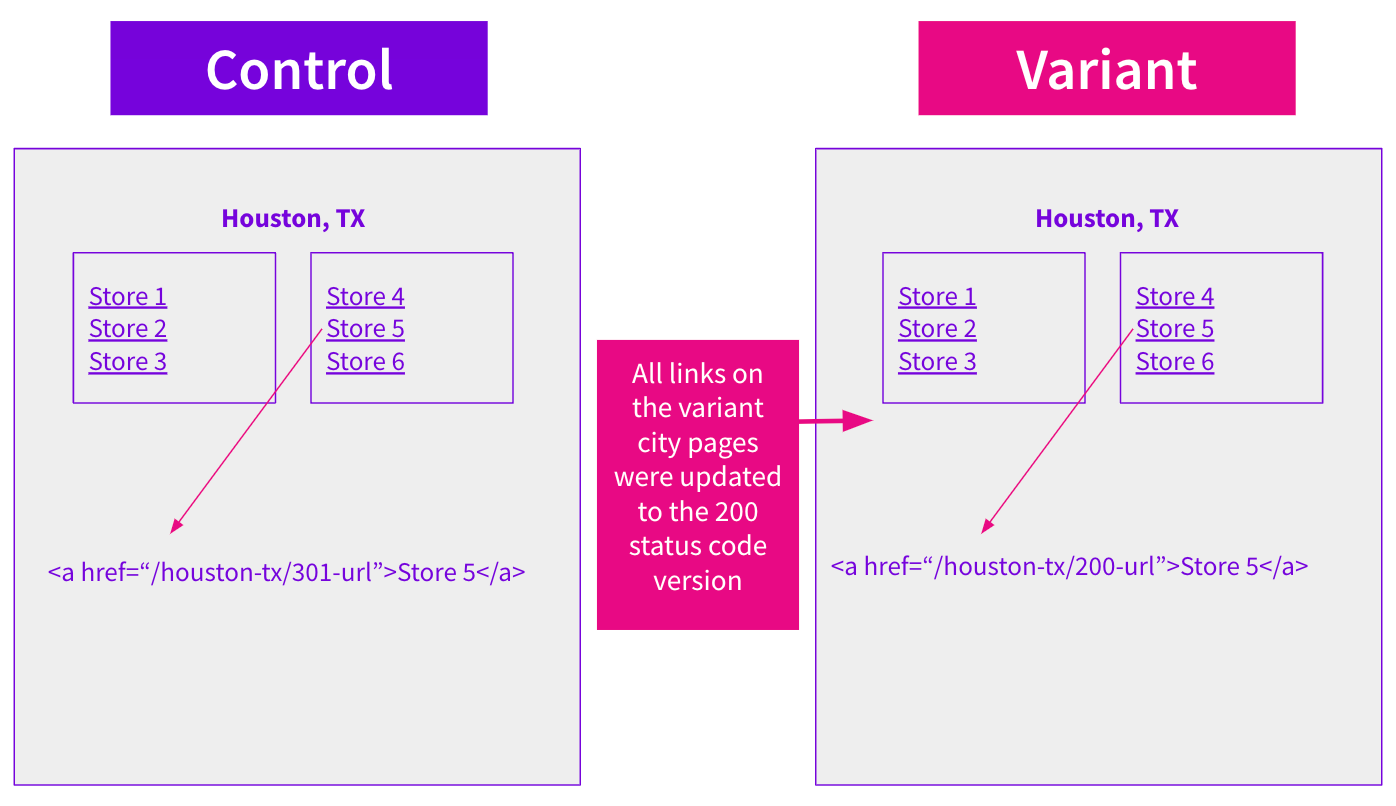

In order to measure the impact on both the store pages and the city pages, we set up this experiment by creating control and variant groups of pages based upon randomized city groups. For example, all of the links on the Houston, Texas page would be changed to the 200 status code links while all links on the Miami, Florida page remained unchanged.

This setup gave us roughly half of the city pages in control and variant, as well as half of the store pages in control and variant.

The change looked like this:

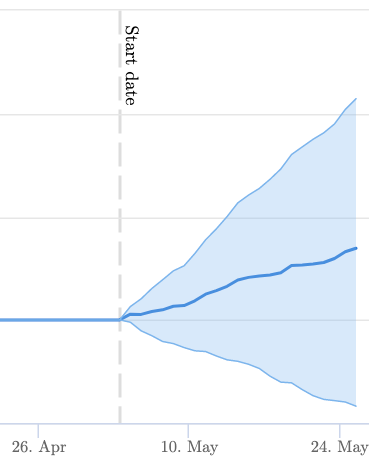

Here is the impact this change had on both city and store pages in aggregate:

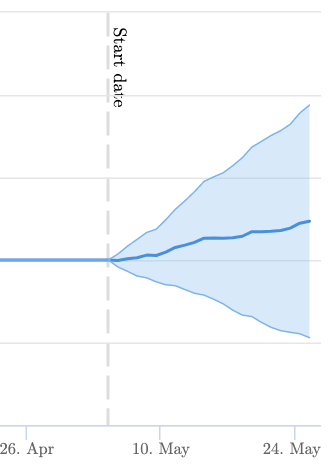

The impact on just city pages:

And the impact on just store pages:

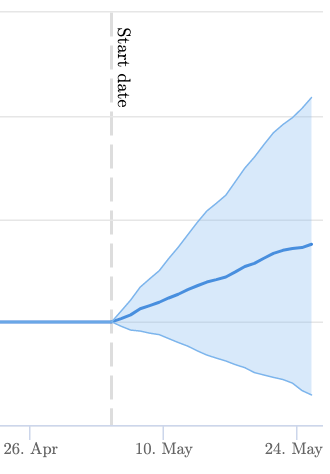

This change showed a good, but not conclusive, chance of a positive impact on store pages - the link targets - and was more likely to be positive than negative on the city pages as well. We estimate that this change had an impact between -1% and +4% on the store pages. This test had a strong hypothesis, thus our customer decided to deploy this change despite not achieving a statistically significant outcome. You can read more about SearchPilot’s methodology for evaluating inconclusive test results here.

While the result of this particular test doesn’t prove or disprove whether this common technical SEO recommendation is universally beneficial, it does indicate that there might be slight positive implications from changing internal redirected links to the correct destinations. It’s worth noting that this type of fix could also have a beneficial sitewide impact that we wouldn’t be able to measure with a split test. As always, things vary between industries and websites, so if this is something you’re able to test, we recommend it.

The benefit to testing best practice recommendations like this is in understanding how to prioritize getting them implemented. Getting a large number of links updated can be a change that requires dev work. In this case, we might conclude that this is a change that isn’t as urgent as other changes we have in the queue, but is still something that needs to be done. Having a testing-focused SEO strategy can help get high impact changes executed more quickly.

How our SEO split tests work

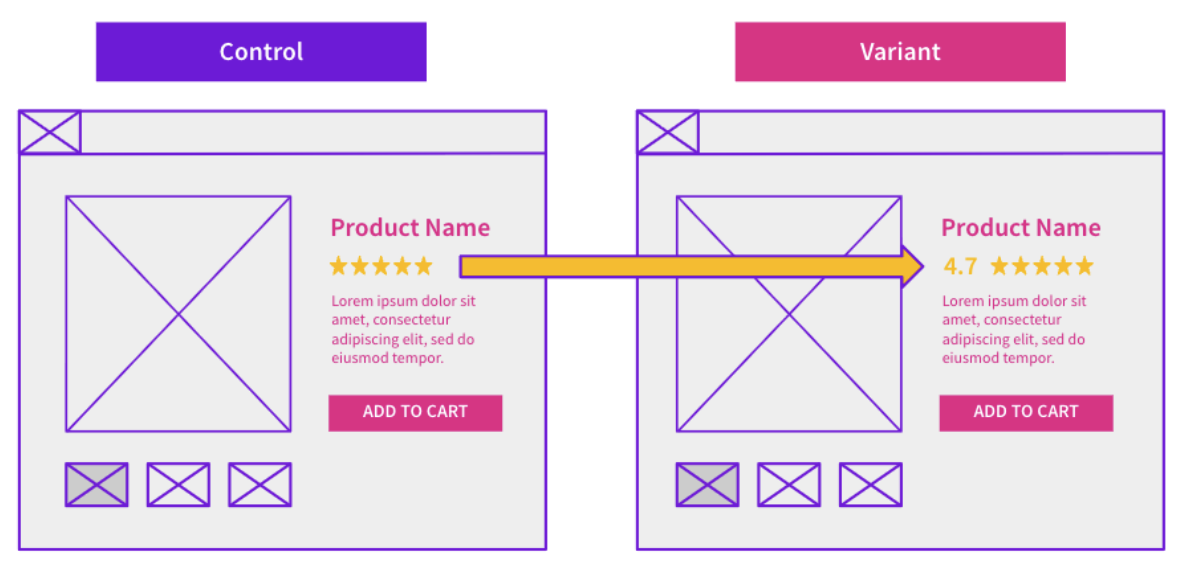

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.