Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

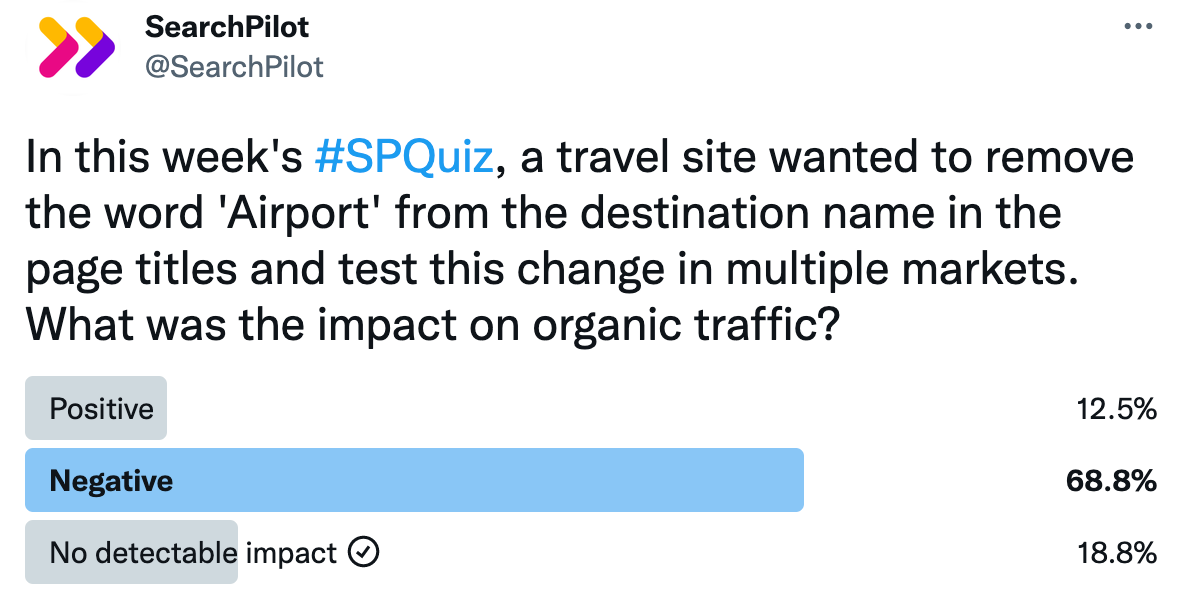

In this week’s #SPQuiz we asked our followers what they thought the impact on organic traffic would be when we removed the word ‘Airport’ from the destination name in page titles on a travel website. This might seem like a strange thing to do, but it was based on an interesting hypothesis following Google’s increase in propensity to rewrite titles in the search results. We tested this change in multiple markets.

Here is what they thought:

About two-thirds of our followers thought that this change would have a negative impact on organic traffic. The remaining votes were nearly split between a positive impact, and no detectable impact. In this case, the majority was wrong. In most markets, this test had no detectable impact. Although in one market, it had a positive impact.

To learn more, read the full case study below.

The Case Study

Since Google began overwriting page titles en masse, a new variable has been introduced in title tag tests. Although Google technically always rewrote title tags to some degree, the frequency increased significantly in the latter half of 2021.

Historically, title tag tests were primarily testing how your new title tag impacted rankings and click-through-rates (CTRs) in comparison to your original title tag. Today, we’re testing something more convoluted. It’s something like how the change impacts search rankings and how the way Google opts to display the title in search results impacts CTRs, versus how they chose to display the title in search results before the change.

This additional variable makes the results from title tag tests more challenging to unpick but also makes testing them that much more important. We have found title tag tests to be the most likely to be statistically significant and to tend to produce the largest impacts on organic traffic; however, that can swing in either direction. For SEO, title tag changes tend to fall into the high-risk, high-reward category.

You should test any update you want to make to your title tags to mitigate downside risk, but you should also regularly test new title tag formats because if you find the right one, they’re an easy source of gains to organic traffic.

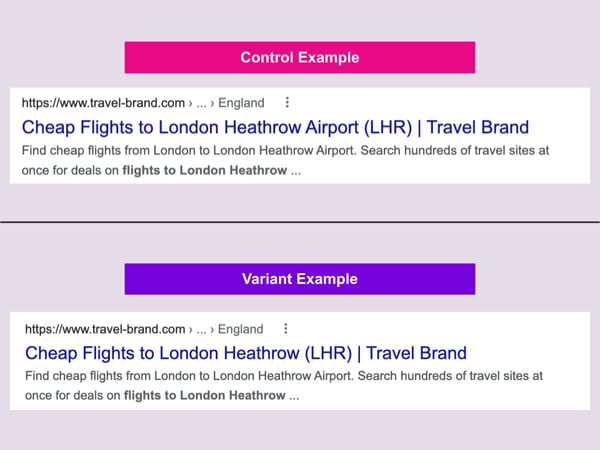

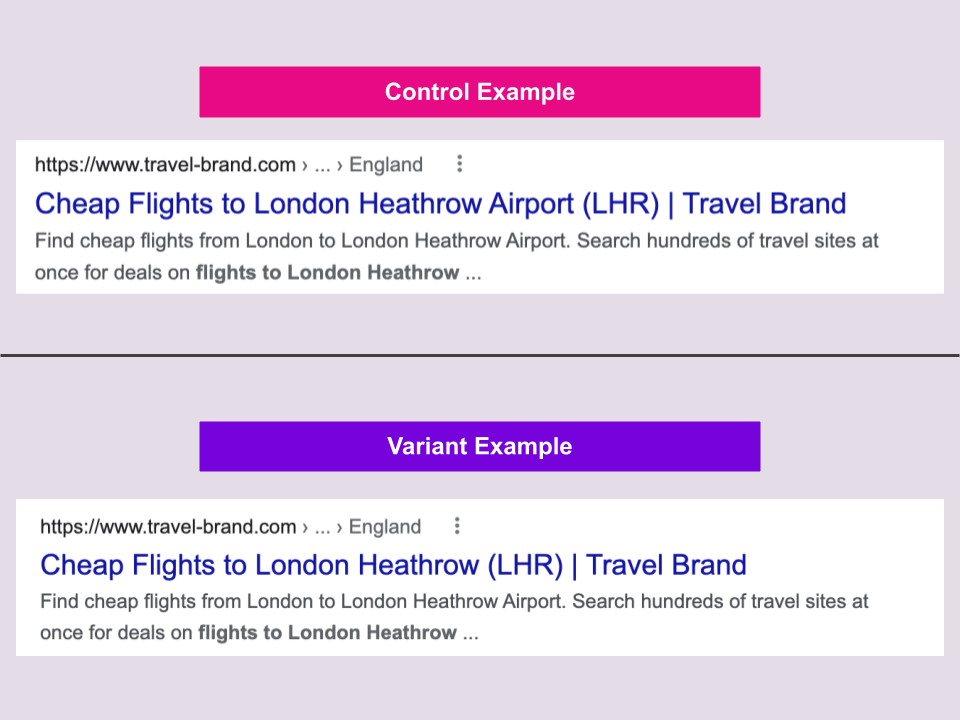

One of our travel customers wanted to run a test on their flights to airport pages where they removed the word “Airport” from the destination name in the title. For example, instead of using the phrase “Flights to London Heathrow Airport” in the title tag, they wanted to test using “Flights to London Heathrow” instead:

The hypothesis for this test was that given Google’s rewrites show a preference for shorter titles, they could improve CTRs by shortening the title tags. The primary aim was to get to a shorter baseline title tag format without harming organic traffic, which they could then iterate on later with further testing. They tested this on three markets, the United Kingdom, the United States, and France.

When they ran the experiments though, they found that none had a detectable impact.

They of course wanted a positive uplift, but given their aim was to get to a shorter title format that they could iterate later on with further testing, the fact there was no clear impact meant they could move forward with this format as a baseline.

That being said, they still wanted to check if this new format changed how the titles were displayed in search results. When looking into it further, they found that this change caused Google to begin overwriting their titles in the United States when it hadn’t been before, even though the version Google displayed still included the word “Airport.” There were only a handful of pages where Google respected the updated title tag and no longer displayed the word “Airport” in the search results.

When we ended the test, Google stopped overwriting the title tags again. This phenomenon was not seen in the other markets. The customer decided to re-run the experiment in the United States. They wanted to be more confident that the test caused Google to begin overwriting the title tags. The follow-up test in the United States was identical, just run three weeks later.

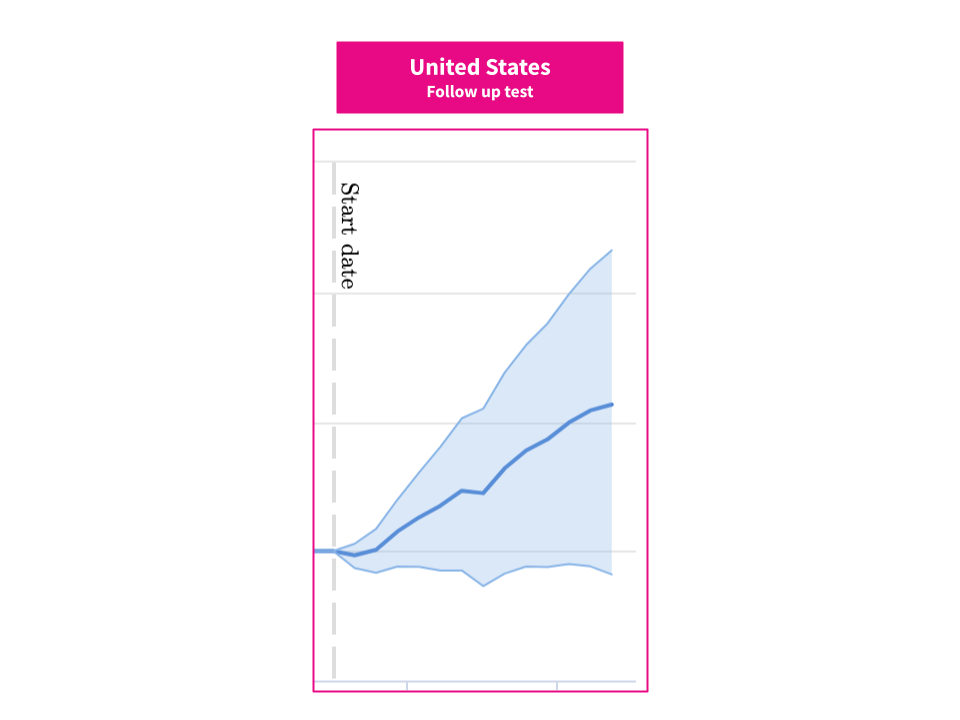

This was the result:

Although not statistically significant at the 95% confidence interval, this change had a positive impact at the 90% confidence interval, with an uplift of 5%.

As with any test, it’s possible this was a false positive. At the 95% confidence interval, we expect 1 in 20 experiments to be a false positive and that rate increases with lower confidence levels. However, our customer found evidence that this time, although still not universal, Google did display the change in the search results on a higher percentage of pages than in the first test.

One difference between the two experiments was when they were run. We know that Google’s algorithm is constantly updating; the tweaks made to how Google decides to display titles between the two tests may have meant Google was more likely to respect the change the second time around. That’s a good reminder that we should retest things at later dates, especially if we have reason to believe that Google has significantly updated how it handles that specific ranking factor.

A final takeaway: if you’re running title tag tests, check how Google is displaying your new title tag in the search results. It makes sense to track displayed titles for the head terms that a selection of your variant pages rank for. The insights from exploring how or if Google did any rewrites to the title tags will help inform future tests and follow-ups.

For our customer, they took the positive indications to mean that this version of the titles in the United States would serve as a good baseline for them to further iterate on with new testing, even though it was forcing rewrites (read here to learn more about how we make decisions about deploying tests that aren’t statistically significant at the 95% confidence interval). This test also gave us an insight into just how unpredictable Google’s rewrites of title tags are – and indicates that the algorithm they use to inform how title tags are displayed is likely to be changing regularly.

How our SEO split tests work

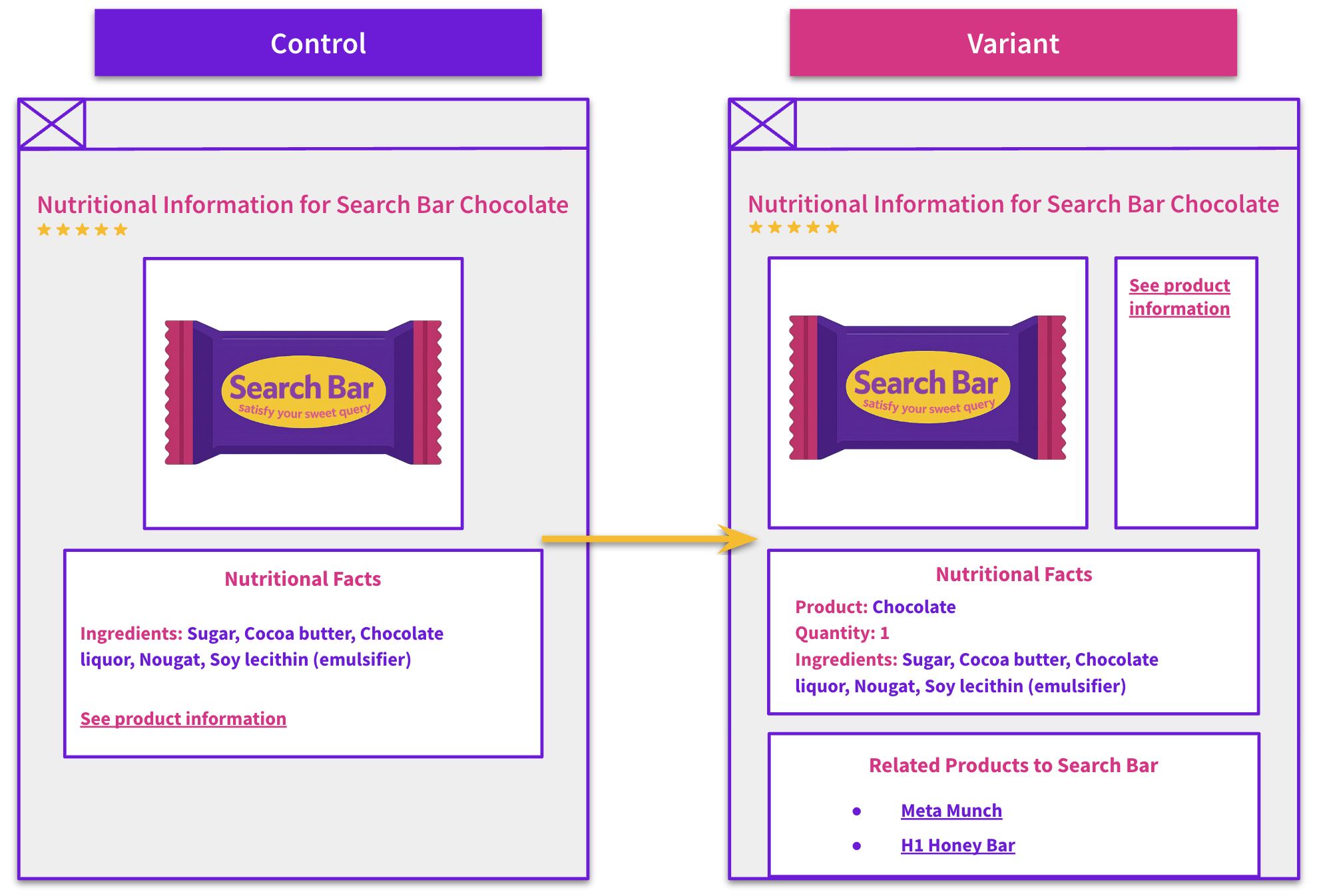

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO A/B testing works or get a demo of the SearchPilot platform.