The Case Study

Search engines rely primarily on whatever content they can find on a page to understand its subject and determine which search queries will be relevant. PLPs (product listing pages) serving as category hubs for ecommerce sites often just contain long lists of links, with minimal actual content of their own, and some SEOs have expressed concerns that these pages could appear “thin” to search engines.

Historically, many have considered it an SEO best practice to add “SEO content” to category pages to improve their rankings. In contrast, others have disputed this, citing low user engagement rates with this type of content and Google’s instructions to create content for humans, not bots.

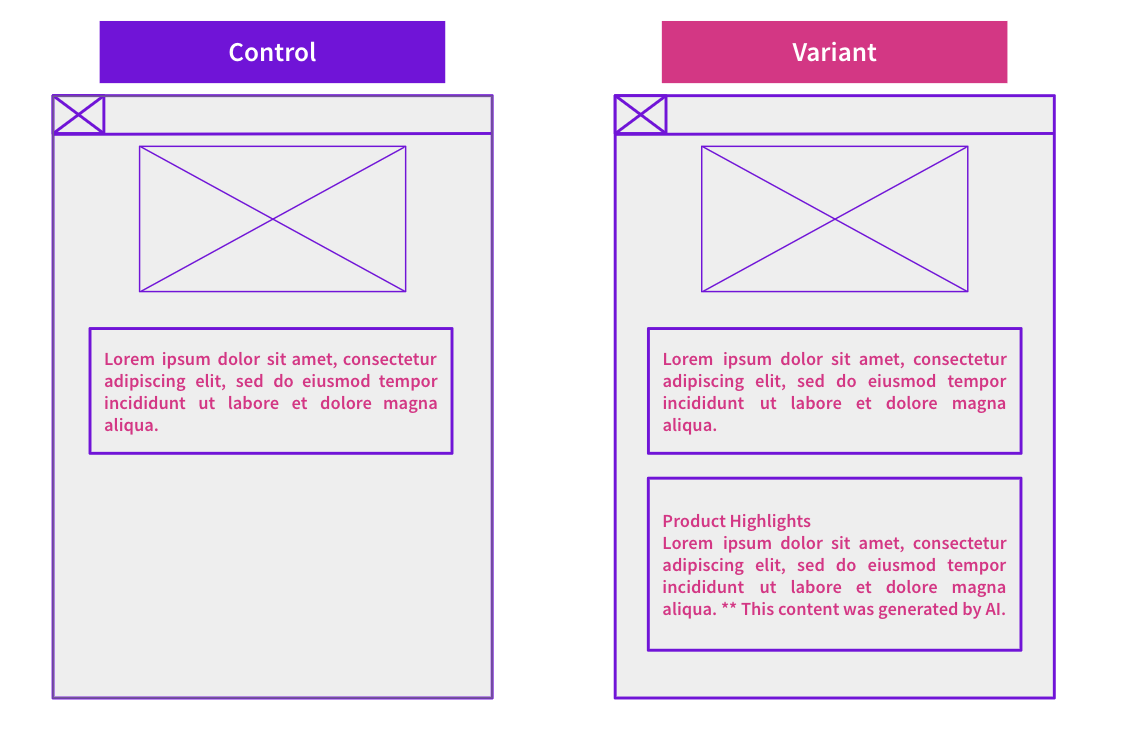

We hypothesized that the SEO content on category pages was irrelevant and doing more harm than good, and that removing it would increase our customer’s rankings for the more relevant keywords remaining on the pages.

What Was changed

We deleted the SEO content at the bottom of the customer’s category pages.

Results

Our test analysis revealed that removing SEO content from category pages did lead to a statistically significant increase in organic traffic from mobile devices, while the influence on organic traffic from desktop devices was negligible:

Mobile devices |

Desktop devices |

Our best theory into why this impact was limited to the mobile device traffic was because the SEO content added to the scroll depth of the page and perhaps the was negatively impacting user experience.

We followed up this experiment by retesting this hypothesis on another part of their site that hosted SEO bot-focused content – and saw a positive uplift to mobile traffic there as well! Seeing the same positive impact on multiple parts of their site help reaffirm our experiment results.

This week's experiment is a good example of why it's important to A/B test SEO changes rather than relying on the promise of SEO best practices.