Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

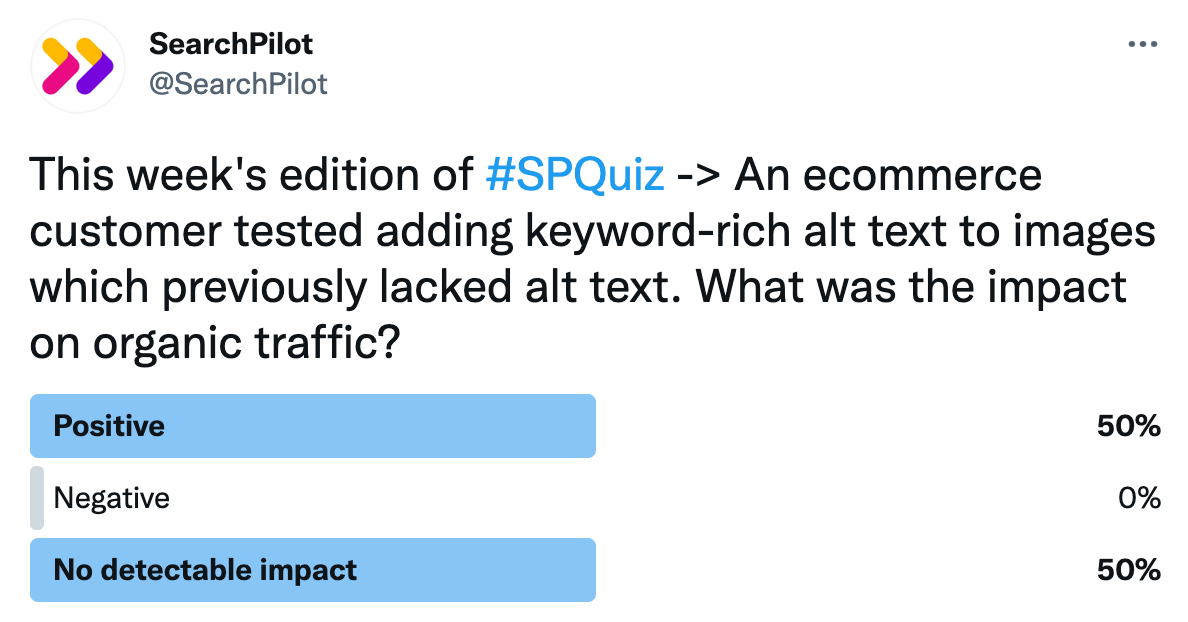

For this week’s #SPQuiz on Twitter, we asked our followers what they thought would happen when we added keyword-rich alt text to the images on an ecommerce website that previously didn’t have any:

The vote was a true 50/50 split between voters who thought the impact would be positive and those that thought it would have no detectable impact. Not one voter thought that adding alt text would have a negative impact.

50% of our voters were right! Adding the keyword-rich alt text had no detectable impact on organic traffic to these pages. You can read more about the details below.

The Case Study

SEOs commonly recommend including and / or optimising the alt text on images as a way to improve the organic performance of the pages where the images can be found. Google uses alt text to inform rankings for Google Image search, and John Mueller has previously said (2017) that it can be used to inform desktop rankings like any other on-page text. Google’s documentation recommends alt text for its benefits beyond SEO; alt text in images can provide useful anchor text if it is used as a link, and improves accessibility since it enables screen readers and other assistive technologies to describe the page more completely and accurately.

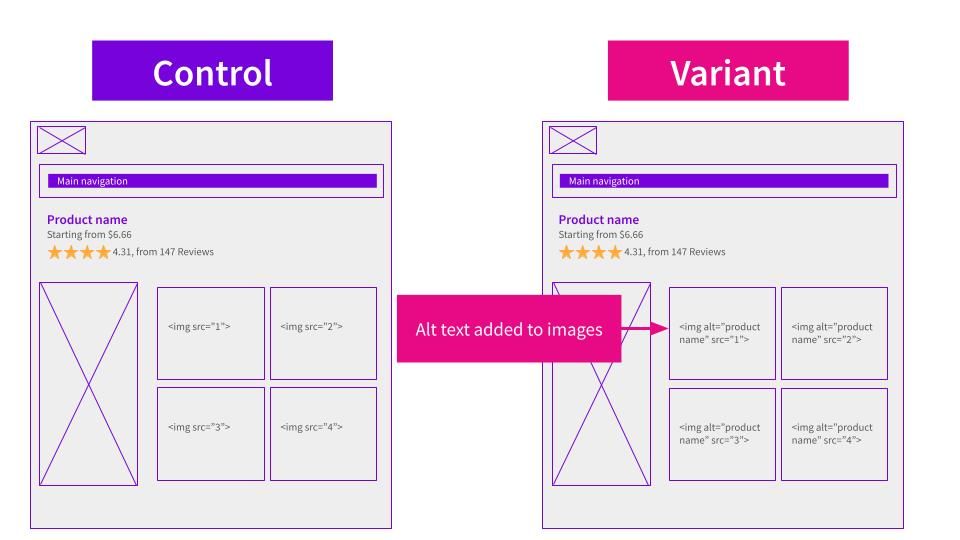

An ecommerce customer decided to test adding alt text to product images on their category pages. Each page included images for all the different products, with most pages having 24 options as the default view. Previously, none of these images had alt text on them. To run reliable A/B tests, our experiments are run on groups of pages with over 1,000 sessions / day and oftentimes include thousands of pages. Therefore, we often face the challenge of trying to build website changes at scale with limited manual input.

Because of that difficulty, for this test we ended up having to include the same alt text for every product image on the page in this test. For example, if the page was “men’s t-shirts” every image would include the alt text “men’s t-shirt”, instead of each image having unique alt text like “white men’s t-shirt”.

While we knew this approach posed the risk of Google perceiving the alt text as keyword stuffing or spammy, we still felt the alt text was accurate to the image and that this test was worth running; our hypothesis was that we could improve relevance signals for the primary keyword on each page and increase organic search traffic, including image search traffic. Here’s a mock-up of control and variant:

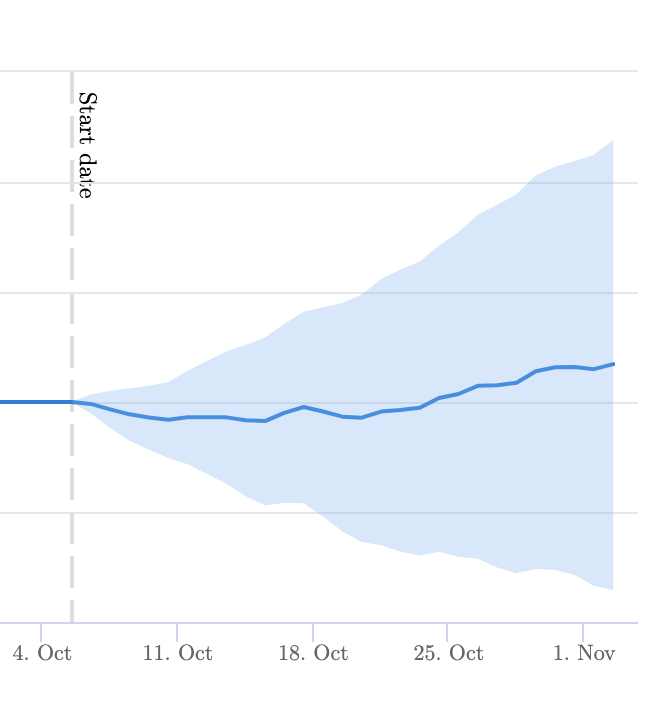

These were the results:

This experiment was inconclusive, meaning we were not able to detect any impact to organic traffic with statistical confidence.

The fact we were unable to optimise the alt text and make it unique to each image may have contributed to this result, although we would need to run a follow-up experiment to test that hypothesis. Also, while Google Image search traffic was included in the results, it did not make up a significant portion of the organic traffic to these pages and therefore, while pure image search performance may have improved, it may have been too small an impact for our model to detect as an effect on total search traffic. For a website with a more significant portion of its traffic coming from image search, alt text might have a greater impact.

Following the default to deploy mentality, the customer decided to roll this change out anyway. The reasoning behind this decision was that the change improved accessibility, it was based on the official line on image search, and the test did not show a negative impact.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.