Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

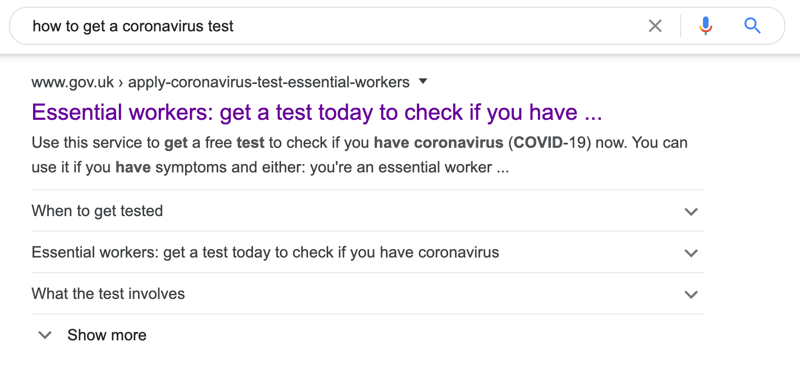

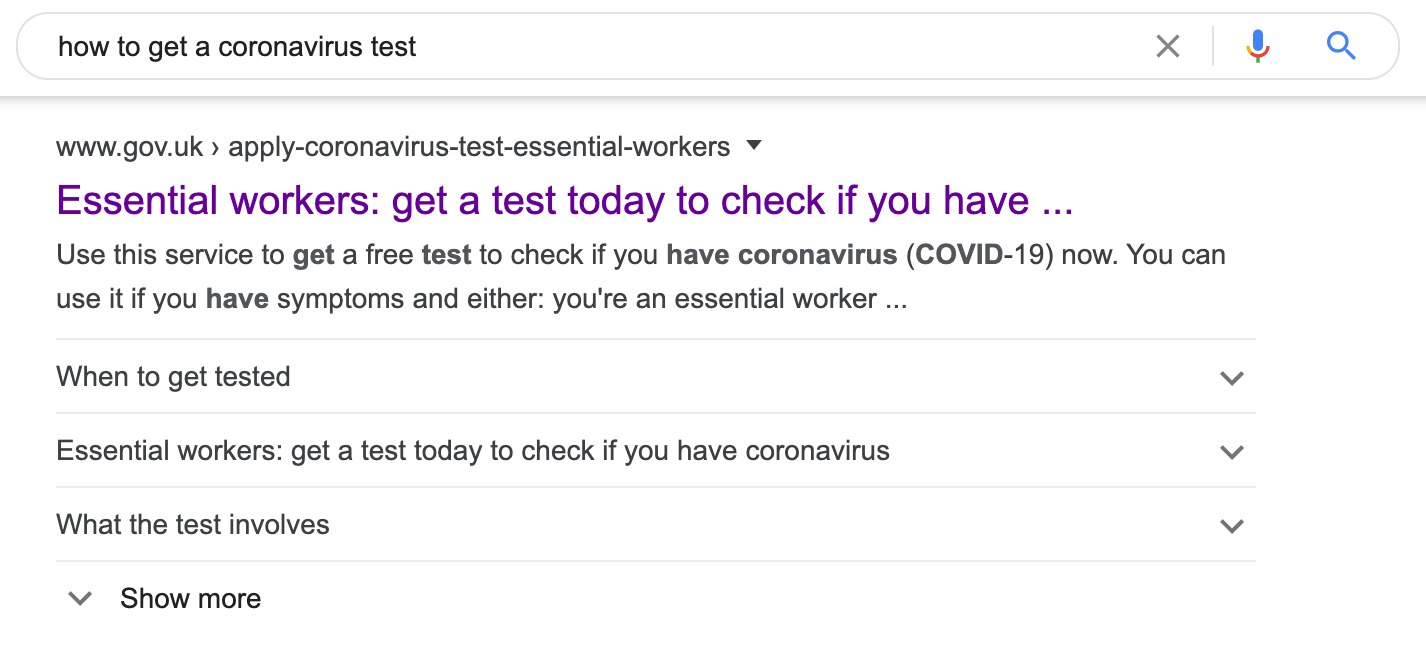

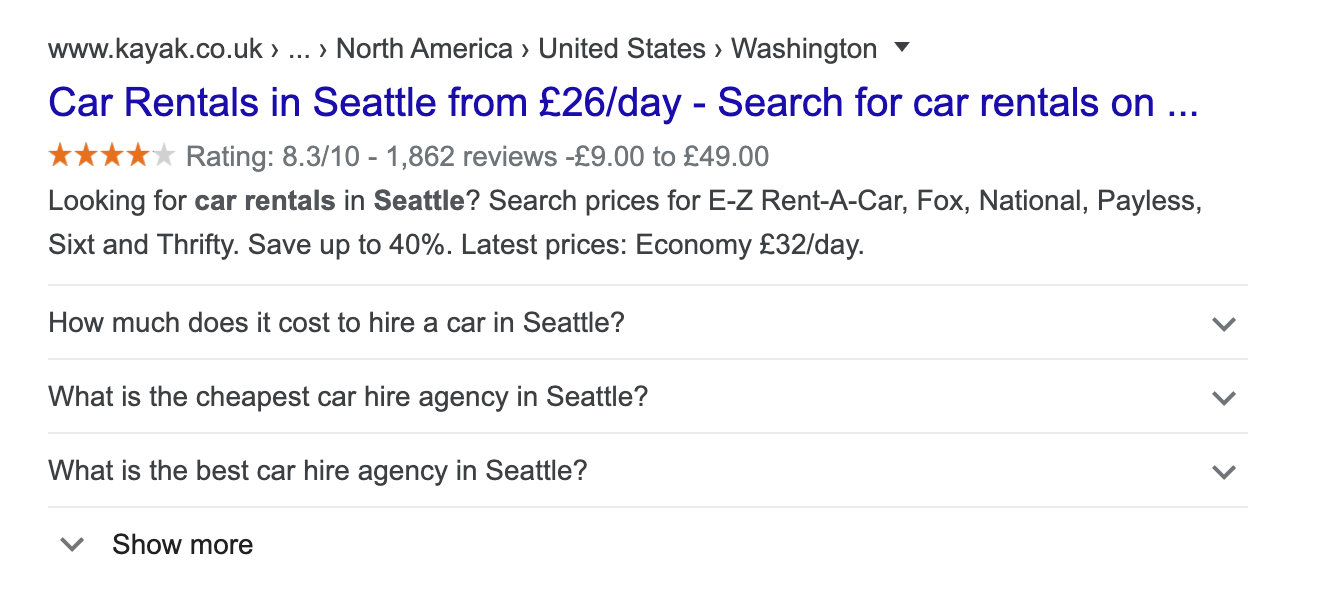

FAQ schema is a form of structured markup that can get rich snippets in search results. It was released in 2019 and allows web pages to mark up FAQ content on their web pages so that it appears for users in the SERP (Search Engine Results Page). When implemented, it can transform your web page’s search result to look like this:

Not only can these rich snippets readily provide informative content to users, they also created quite a buzz in the industry when SEOs discovered they could be used to take up more real estate on the SERP, pushing competitors further down the page.

In August last year we shared our first case studies from testing FAQ schema, where we reported that we had seen uplifts in organic traffic ranging from 3% to 8% despite the concerns of ourselves and others that this new search feature was going to negatively impact organic click-through-rates.

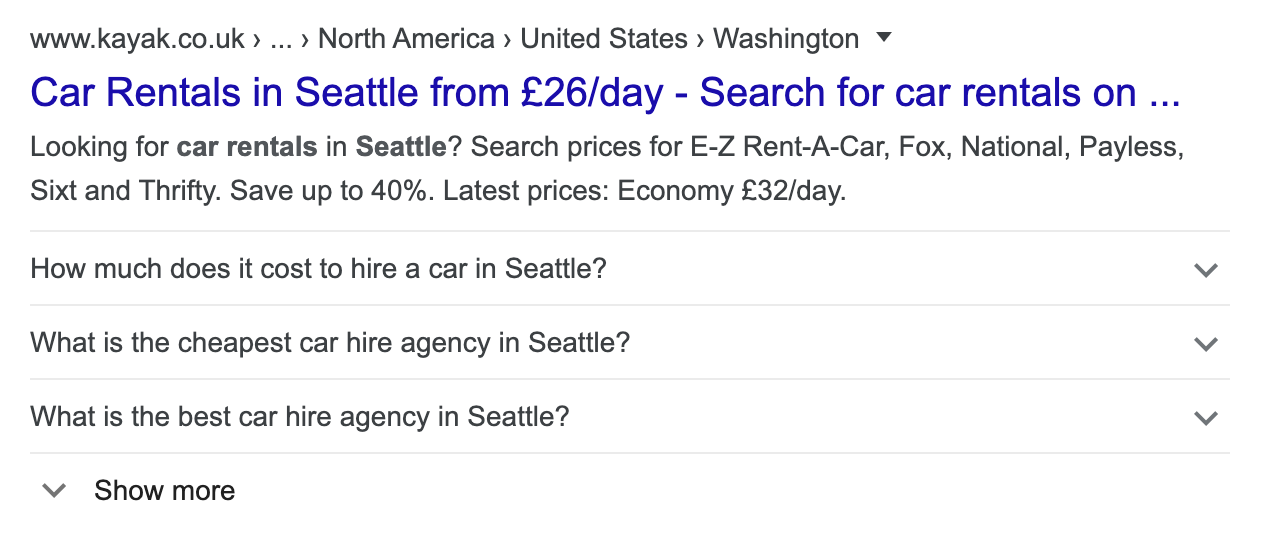

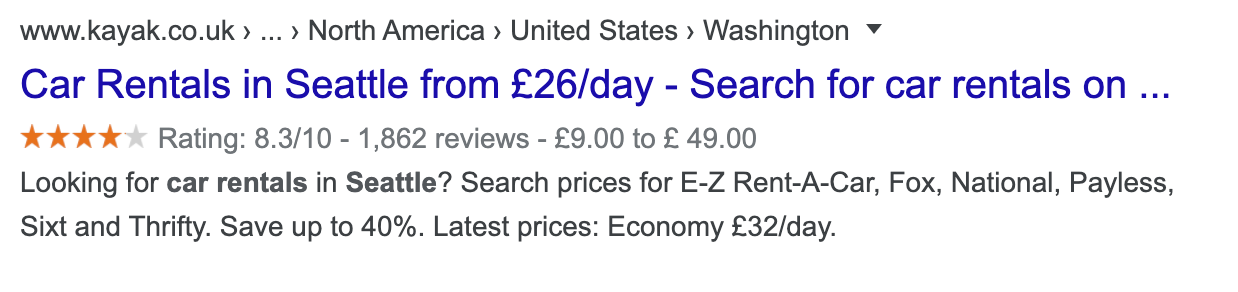

They were exciting results, but a lot has changed since then. First, since we published that case study Google made some changes to FAQ schema implementation. Gone are the days where you can go wild on the SERP with price, and review, and FAQ schema and get rich snippets for all of them. Today, aside from some rare edge cases, Google only allows you to have either price and review snippets or FAQ snippets:

FAQ Only

Review and price only (you can also have just review or price markup)

But if you try to include review/price snippets with FAQ schema, Google won’t award you both in the SERP (we’ve found that Google will drop FAQ schema in favour of rating and price schema). I.e., this combination is no longer allowed:

The second development since last summer is that we have tested this a lot more, on various clients across various industries. We are often asked if there are any silver bullets we’ve discovered over time. The short answer is no, there’s not, but we have found that adding FAQ schema is the change that has been the most consistently positive across all our test types, with 67% of all FAQ schema tests we’ve run having been positive. These tests typically show uplifts in organic traffic from 4-15% (a slightly wider range than we reported before), but in one instance we saw a 25% uplift from adding just FAQ schema to existing FAQ content!

Sadly, it’s still not the silver bullet we’ve all been waiting for. Not only do we have our own caveats, there is evidence Google may be starting to prune FAQ schema from search results (along with other rich snippets). No two websites are ever the same, and the ever-changing nature of Google’s algorithm means nothing lasts forever, so be sure to use our data to suggest hypotheses and run your own tests where you can. From our own breadth of testing FAQ schema over the past year, here’s a summary of what we’ve learned so far:

Review and price schema may still be more valuable

Our sole negative FAQ schema test came when we removed review and price schema in order to make our FAQ schema appear in the SERP. This change resulted in a 5% decline in organic traffic. It seems to us that for industries where review data is useful to users, like products for ecommerce, car rentals, hotels, etc. there’s a high chance review schema may be more valuable.

For pages where review schema is either redundant or not relevant at all, FAQ schema seems to win. However, we need to do more testing to confirm this hypothesis and we would definitely still recommend testing which is more valuable for your own website first.

Uplifts tend to be higher on mobile than on desktop

For positive FAQ schema tests, we’ve found that the uplift in organic traffic when split by device tends to be higher on mobile than on desktop, although we still see uplifts on both. This outcome isn’t particularly surprising, given that FAQ schema will take up even more real estate on the SERP on mobile, but it is still good to confirm.

Impact on conversions

We now have the capability to do full funnel testing, which means we can measure the impact on both SEO and CRO in tandem. When we’ve been able to measure conversions, we’ve seen mixed results.

The impact on conversions has been mixed. For some tests we’ve seen a positive impact on both organic traffic and conversion rates, whereas for others we’ve seen an uplift for organic traffic and no impact or small negative impacts on conversion rates.

It’s a good reminder that what’s good for SEO is not always good for CRO, or vice versa, so be sure when testing anything to weigh up both parts of the funnel.

What questions you include does matter

With one of our clients, after seeing a positive uplift from adding FAQ schema, we later tested changing the first question to one that included higher search volume keywords. This resulted in a statistically significant, albeit small, further uplift in organic traffic of 2%.

This change also had a statistically significant, positive (0.2 percentage points) impact on conversions, so the quality of your FAQ content is also still important.

In summary, here are our takeaways:

- FAQ schema is the most consistently positive test we run, although still not a silver bullet

- Review and price schema may still be more valuable - or at least you should test which one is for your website

- Uplifts tend to be higher on mobile than on desktop

- This change can have an impact on conversions in either direction, depending on the other markup you’re using

- The questions you include do make a difference

That’s all we have for you today! Stay tuned for our next case study, and as always please feel free to get in touch via Twitter to learn more about this test, or to sign up for a demo of the SearchPilot SEO A/B testing platform you can fill in this form.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.