Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

The constant dialogue between webmasters and representatives at Google has always been littered with SEOs sharing case studies with examples of when what they’ve seen out in the wild hasn’t lined up with what the powers-that-be at Google claimed should happen with its algorithm.

Single examples, however, are generally not sufficient evidence to confidently dispute information that comes from the horse’s mouth. There are often a multitude of factors impacting organic traffic, so while a chart of organic traffic over time with an arrow pointing to the date we made a change to our website can present a strong argument of a cause-effect relationship, we still often lack the backing of a controlled experiment.Having the capability to test SEO changes in a more controlled fashion through SearchPilot has given us a new source of authoritative evidence on what does or doesn’t improve your organic traffic – regardless of what comes from the horse’s mouth or what best practice says.

This case study is just one of many examples of when our test results have challenged what we heard from Google, in this case it was what happens when you bring content on page that was previously concealed behind tabs and accordions.

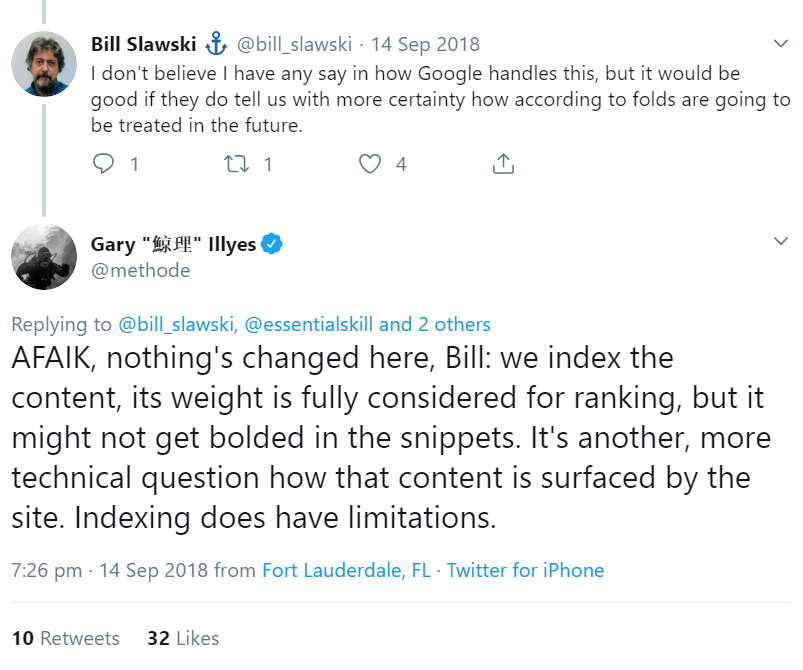

When Gary Illyes was asked about this type of content on Twitter in September 2018, this was his response:

Another user responded to Gary saying he had authoritative tests proving otherwise, and we now have further evidence to support him with, that comes from a split test we ran on Iceland Groceries.

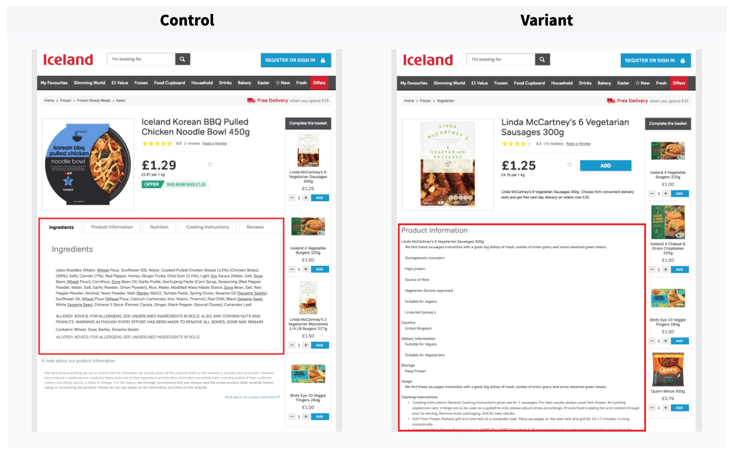

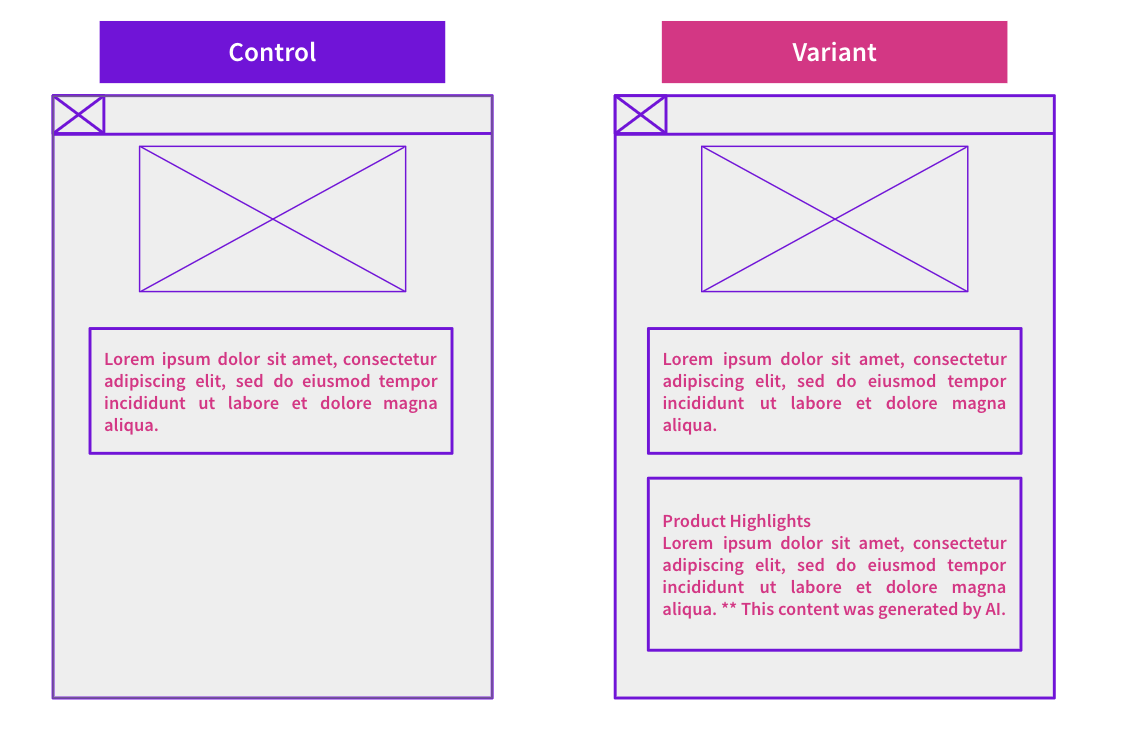

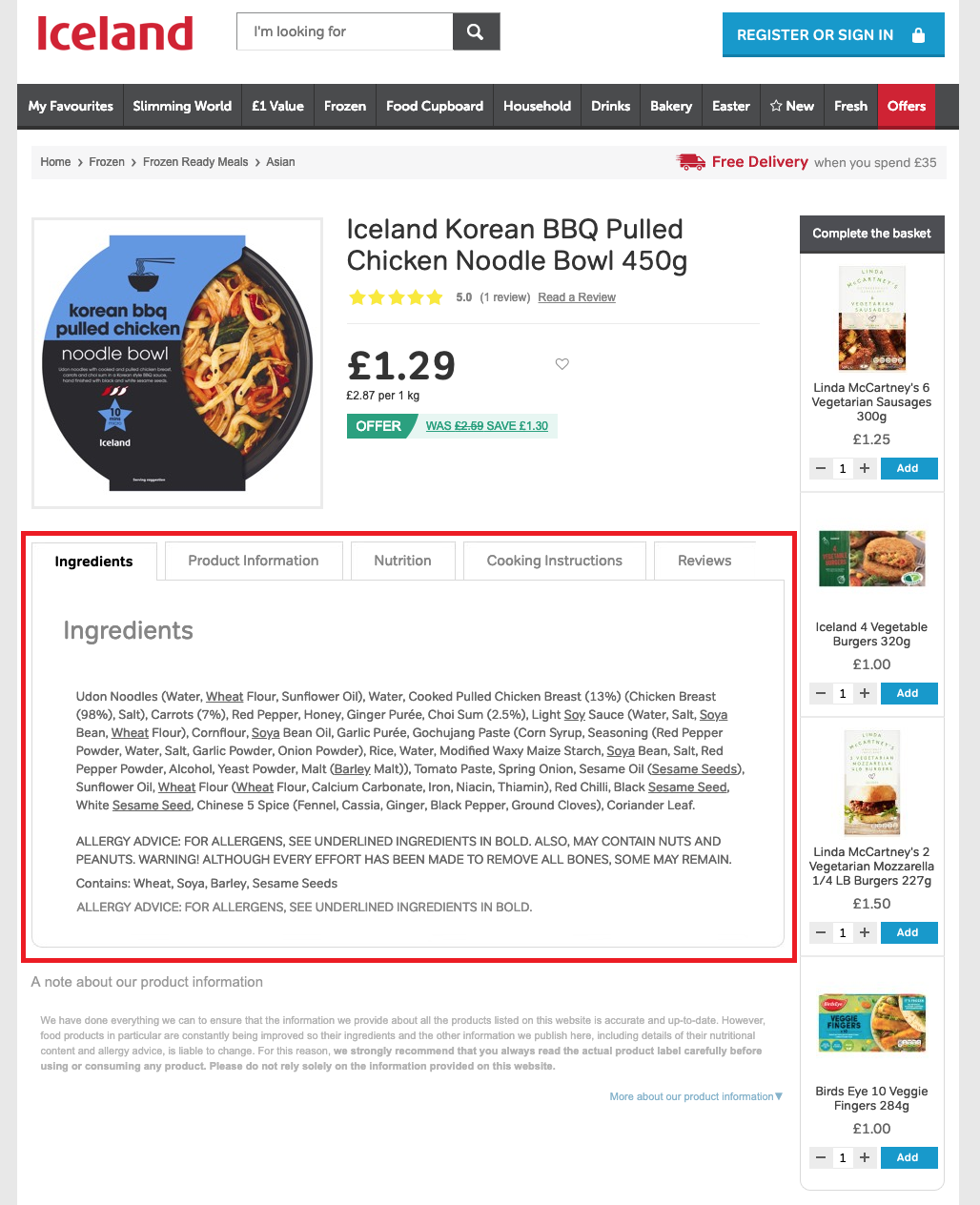

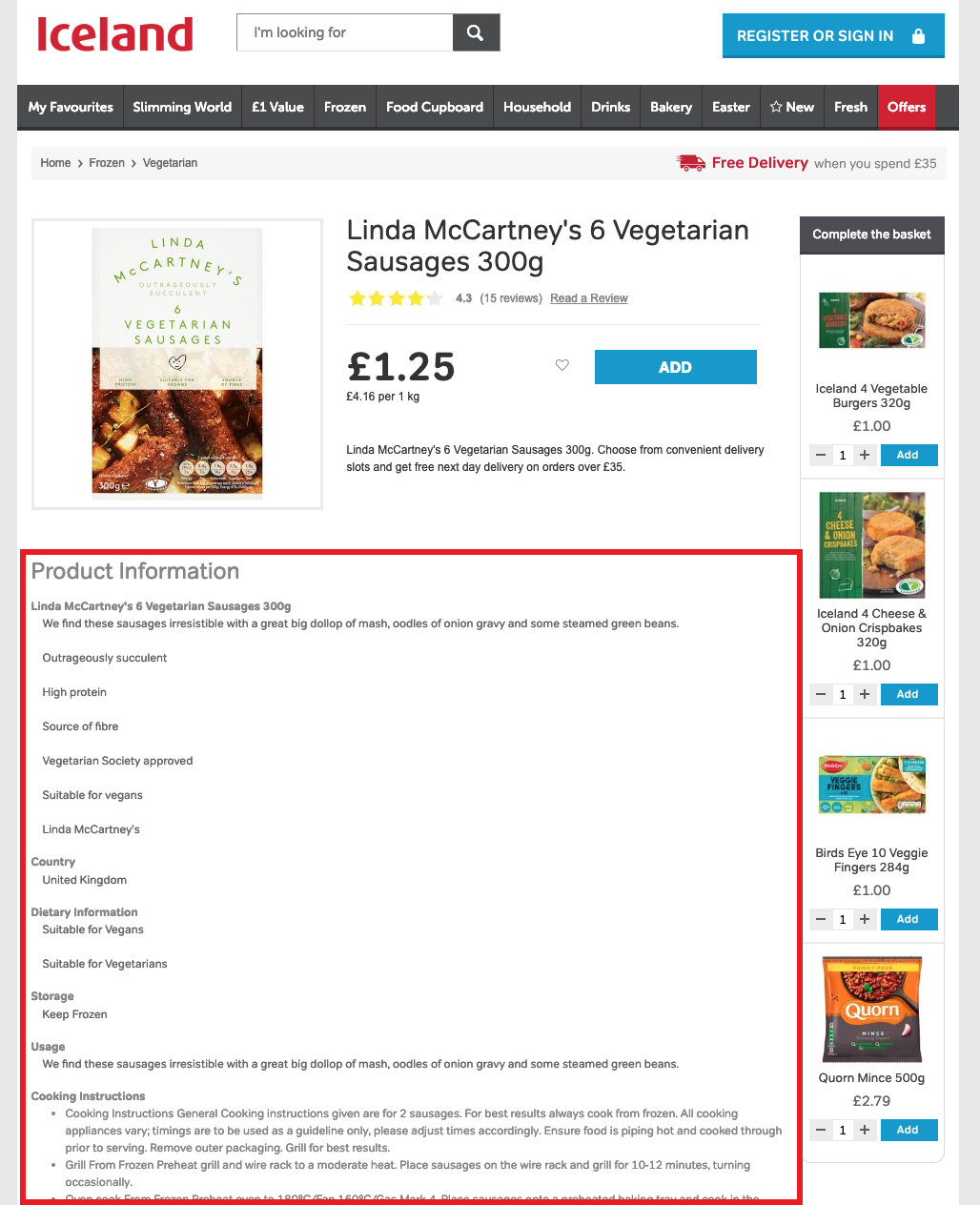

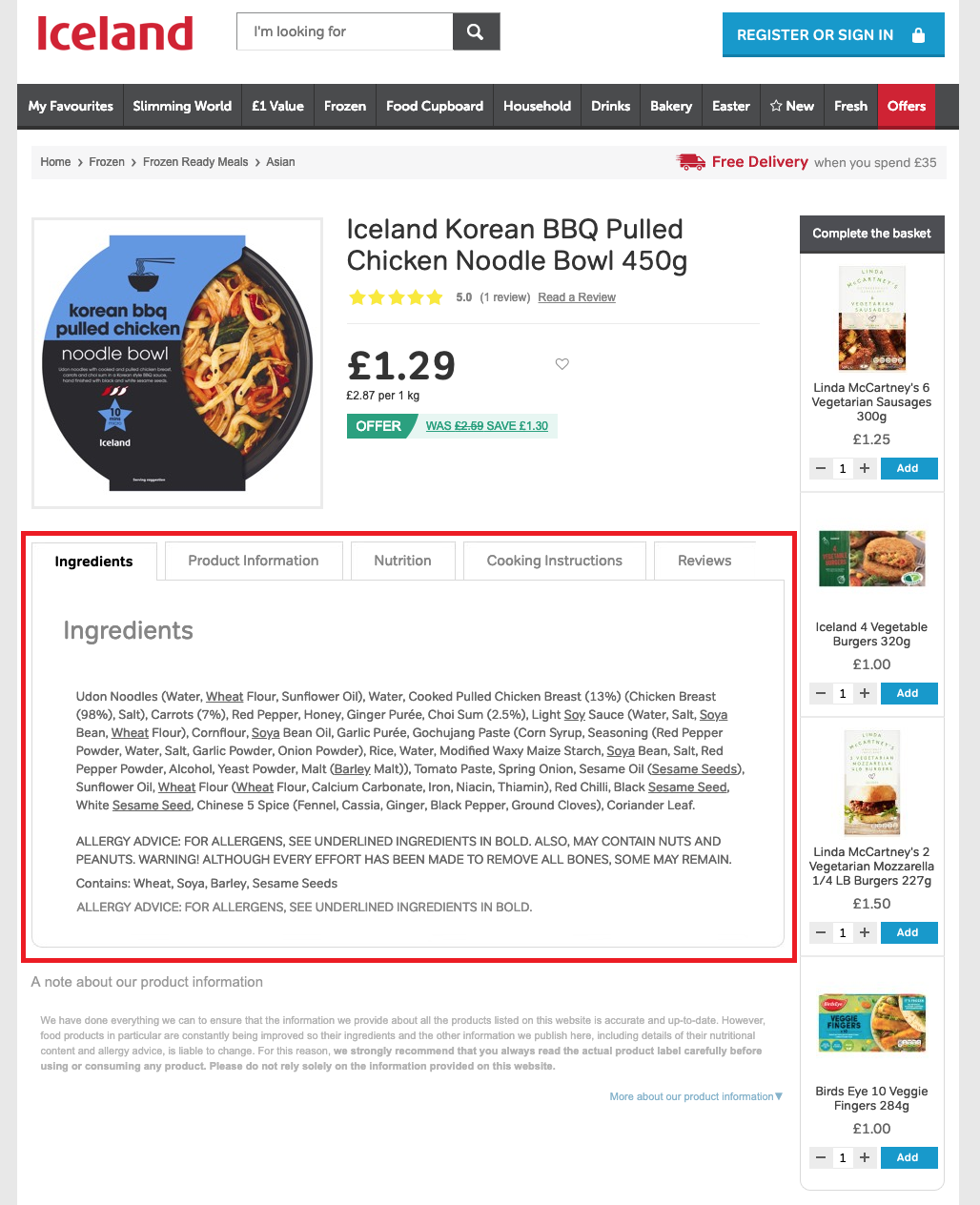

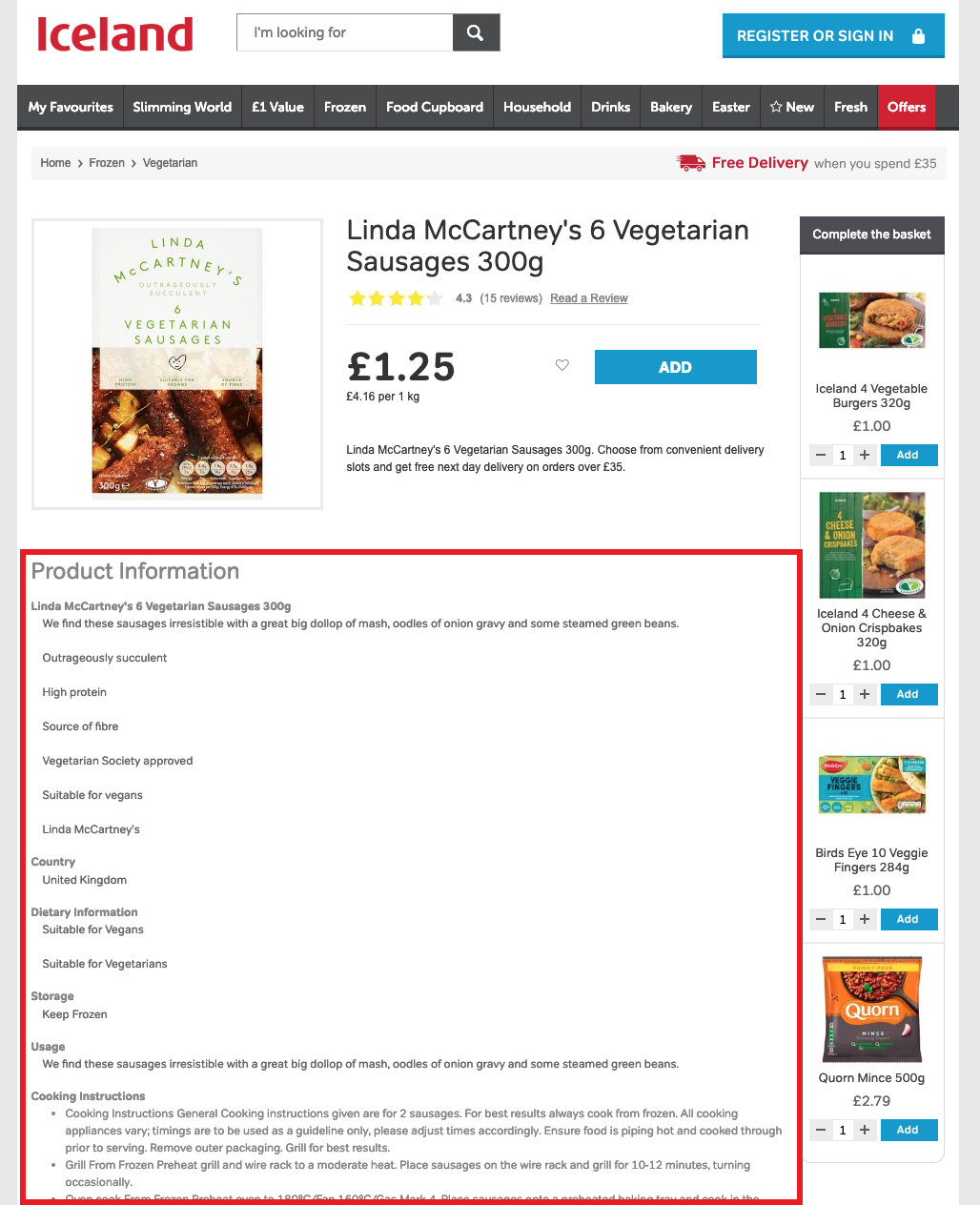

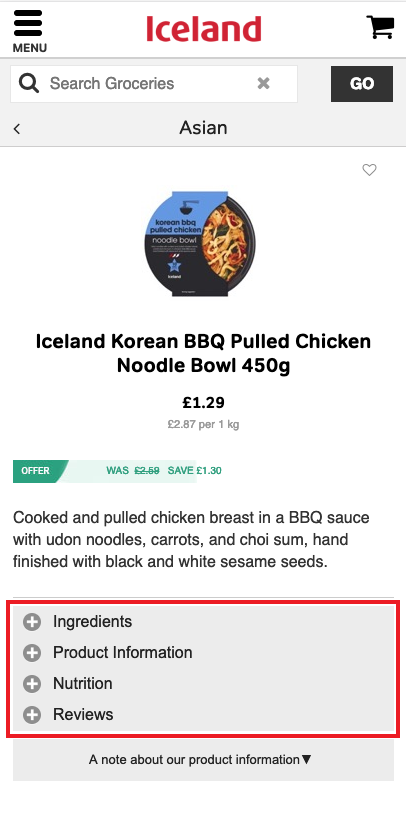

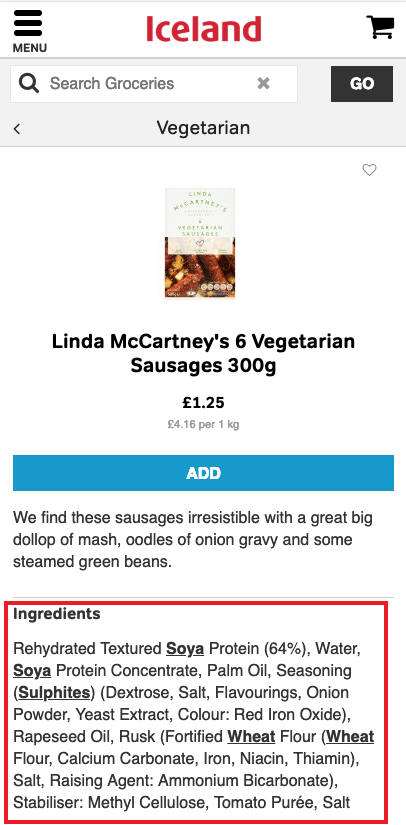

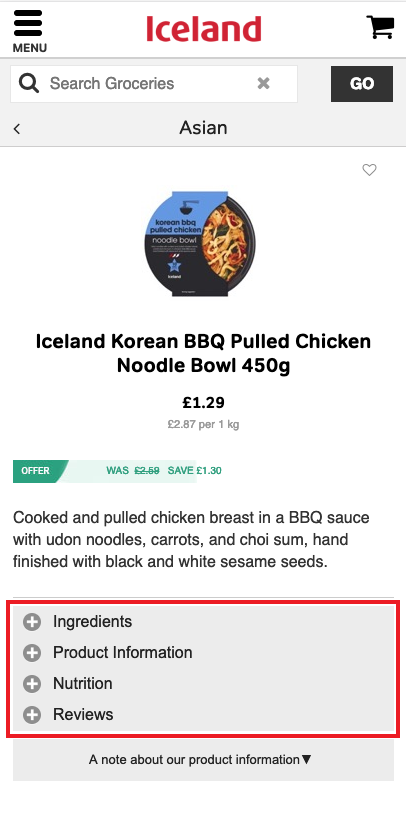

In this test, we removed the tabs / accordions that were concealing product information like ingredients and nutrition facts when the page loaded, and instead made this text visible on the page when it loaded. Here’s an image of our variant v. control pages on desktop and mobile:

| Control | Variant |

|---|---|

|

|

| Control |

|---|

|

| Variant |

|

| Control | Variant |

|---|---|

|

|

| Control |

|---|

|

| Variant |

|

As it turns out, this test resulted in a 12% uplift in organic sessions. More evidence to counter Gary’s claim!

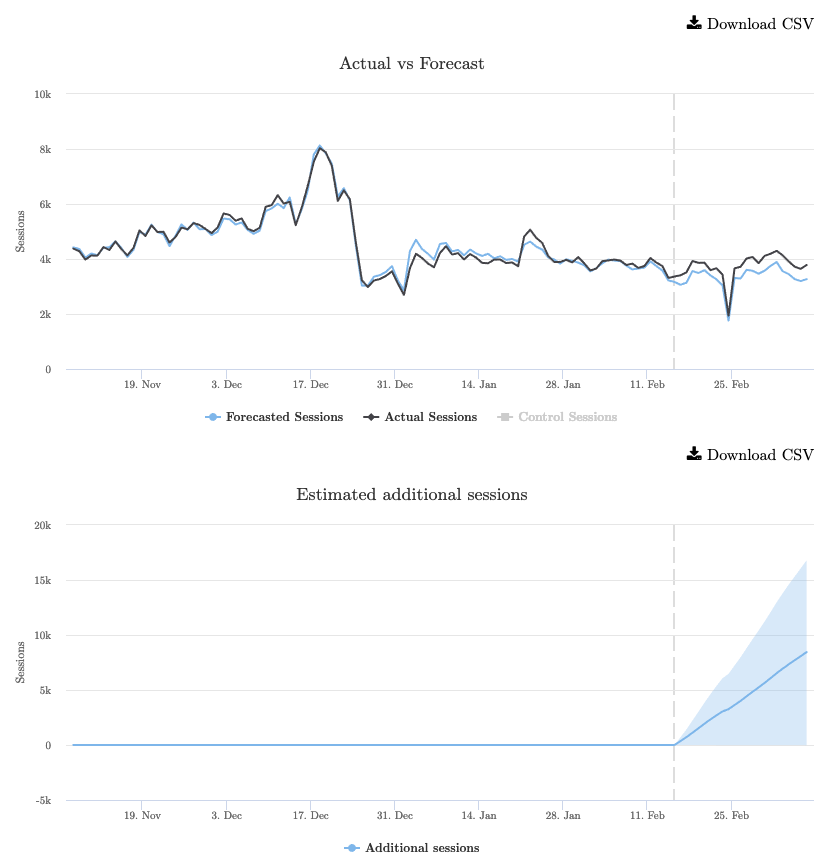

You can see the model and performance graph here:

This chart represents the cumulative impact of the test on organic traffic. The central blue line is the best estimate of how the variant pages, with the change applied, performed compared to how we would have expected without any changes applied. The blue shaded region represents our 95% confidence interval - there is a 95% probability that the actual outcome is somewhere in this region. If this region is wholly above or below the horizontal axis, that represents a statistically significant test.

Interestingly, when we looked at the results split between desktop and mobile, the positive effect was even more prominent on mobile than desktop, also challenging Google’s assertion that having content in tabs on mobile should not be a problem. Granted, this was with the caveat from John Mueller in 2016 that the content “is loaded when the page is loaded.”

Regardless, it seems that for now from an SEO perspective, having your content visible on the page can be better for your organic traffic. It’s always important to remember that no two websites are the same, which is why we’d recommend testing this on your own site. Stay tuned for more insights from SearchPilot.

Please feel free to get in touch via Twitter to learn more about this test, or to sign up for a demo of the SearchPilot SEO A/B testing platform you can fill in this form.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.