Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

This week we asked our Twitter followers what they thought the result would be of adding ratings, reviews, and their associated structured data markup on two sets of local pages: some that were content-rich and some that were not. This is what people thought:

Half of our followers thought this would be beneficial to both sets of pages. No one voted that it would benefit only the pages without pre-existing content.

In this case… only about 20% of our audience was correct. These tests both had an inconclusive impact on organic traffic. How could that be? Read the full case study below:

The Case Study

Ratings and reviews of a business’s locations can be really valuable content for users. This kind of user generated content may help users make more informed decisions about future visits or purchases. Additionally, when ratings and reviews are marked up with structured data, star ratings appearing in the search results can influence users’ decisions to click through.

But when you have a lot of informative content on your pages already, how valuable is it to invest in developing a ratings and review feature?

One of our customers was going to invest in the resources to implement ratings and reviews anyway for reasons other than an organic traffic boost, but still wanted to test the value of this content and schema for its SEO impact.

In particular, they wanted to learn whether adding review content to pages that had no other content about the location would have a bigger impact than adding reviews to pages that already had some content.

To find out, we tested this on two separate subsets of pages: one with pre-existing content about the location and one without.

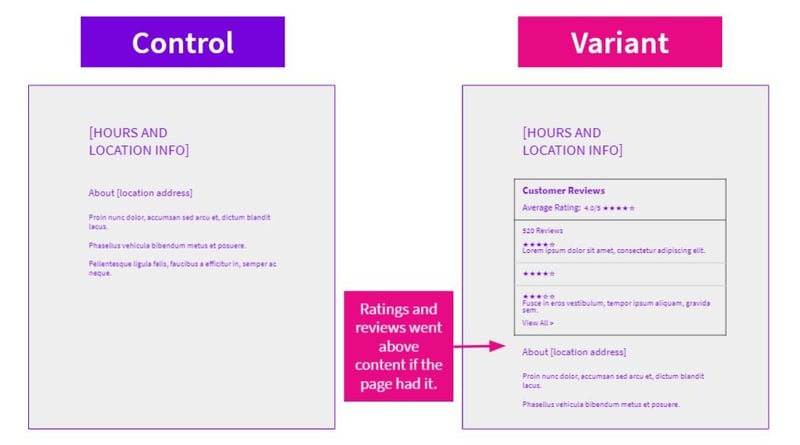

The new page component included well-populated reviews of each given location along with a snippet indicating the average star rating. On pages that had existing content, the reviews appeared above the content:

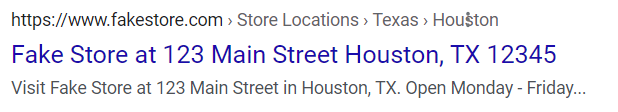

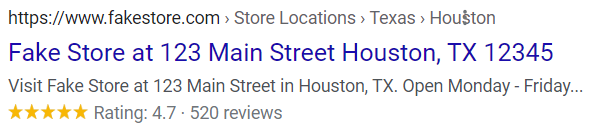

Many pages gained rich snippets in the search results:

| Control | Variant |

|---|---|

|

|

This was the result for pages that already had existing content:

And the result for pages without content…

Both of these tests had an inconclusive impact on organic traffic.

While we expected that having the star ratings in the search results would encourage more users to click through, it didn’t seem to have the anticipated effect. Perhaps users would have clicked through regardless of the store’s rating if they knew that was the store brand they were looking for anyway.

Based on the test result for pages with no existing content, it could be that Google doesn’t see user generated content like this as any kind of substitute for other on-page content about the location - a good opportunity for more tests!

Adding useful content and structured data doesn’t always have as big or immediate traffic impacts as you think it might. However, we know how much search engine algorithms and the display of pages in the search results changes over time. As Google incorporates user-focused metrics into their algorithm more and as snippets evolve, content like this may provide valuable gains in the long term or at the next big update.

You can read more about that reasoning and how we make decisions on inconclusive test results in our blog post “How to make sense of SEO A/B tests.”

If you want to learn more about this test or our split-testing platform more generally, please feel free to get in touch.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.