Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

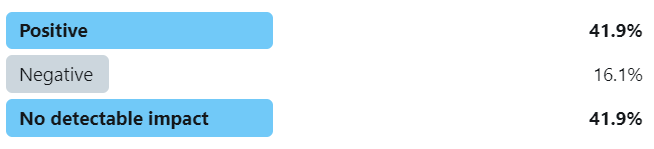

This week, we asked our Twitter followers what they think happened to organic traffic when we moved a travel client’s flight search widget from the bottom of the hero image to the top.

This is what our followers thought:

55% believed that there was no detectable impact, followed by 35% who thought this change had a positive impact… In this test though - moving the search widget from the bottom to the top of the hero image had a negative impact on organic rankings. I know, we were surprised too. Read below for the full case study:

The Case Study

Prior to releasing our feature to run full funnel testing through SearchPilot, it was not possible to measure the impact a change had on organic traffic and conversions at the same time. Most conversion rate optimisation (CRO) experiments are single page experiments that rely on JavaScript to make layout changes, blocking the ability to test for Googlebot. For our SEO experiments, we work around this by splitting pages instead of users; while this enables testing for Googlebot, it doesn’t allow for measuring the impact on user behaviour.

Yet changes that impact CRO often impact SEO too and vice versa, meaning that we run the risk of rolling out a positive CRO test only to later find out it tanked our organic traffic. Similarly, we may have implemented a positive SEO test and unknowingly caused a drop in conversions. Over the years we’ve been A/B testing, we’ve seen both scenarios.

To illustrate the importance of full funnel testing, we wanted to share a case study from a client of ours in the travel sector.

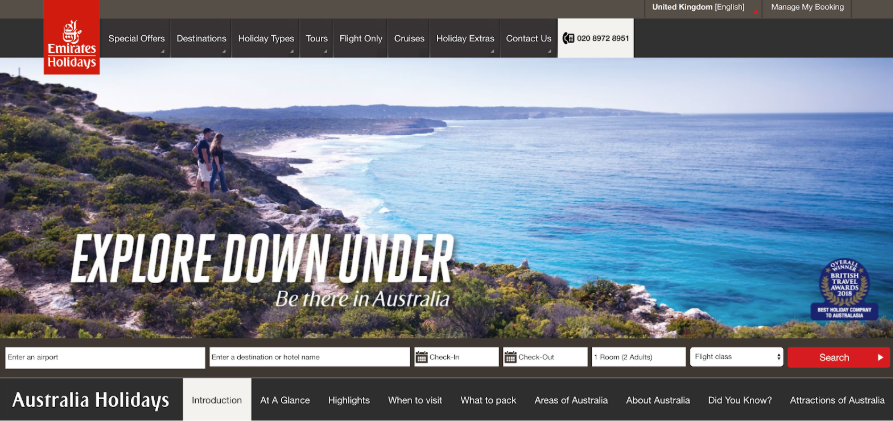

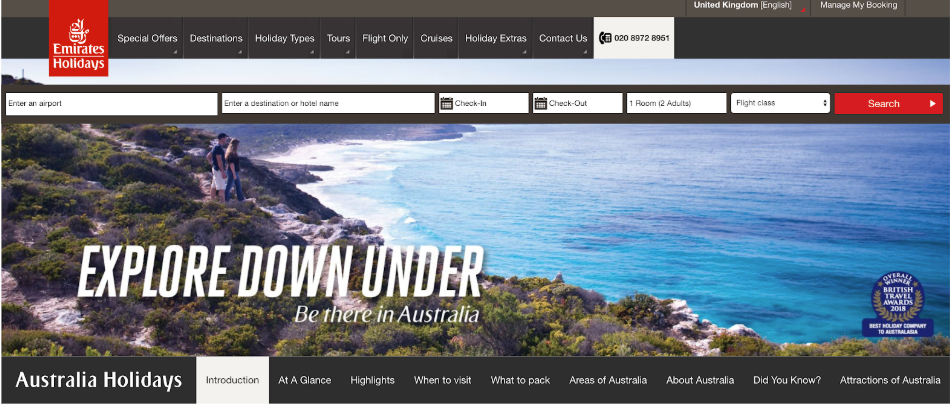

Our client had plans to make changes to their flight search widget, and wanted to confirm there was not going to be a negative impact on SEO before going ahead with the new widget.

To do this, we implemented the planned change with SearchPilot as an SEO split test prior to our client putting it live. The test was to simply move the widget up the page, and not one we expected would have much of an impact on organic traffic, but we decided we’d rather be safe than sorry.

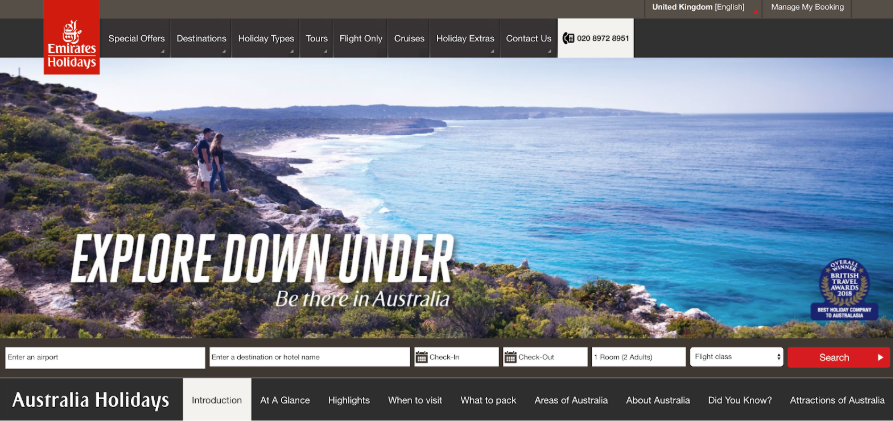

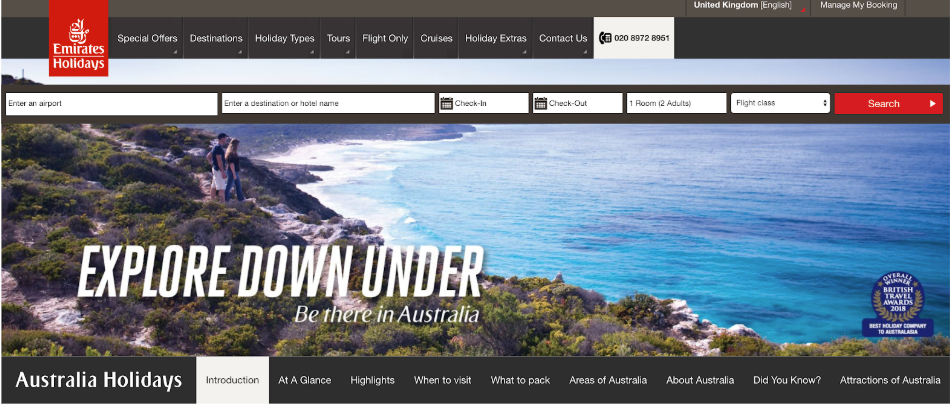

Here is what the change looked like:

| Control | Variant |

|---|---|

|

|

| Control |

|---|

|

| Variant |

|

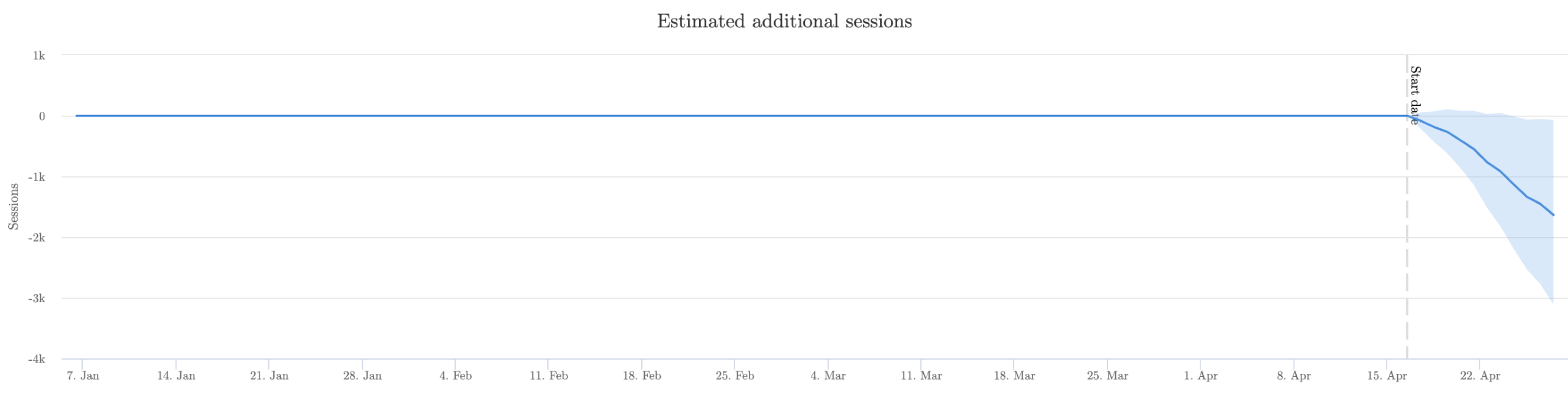

This test result surprised all of us. Not only did the change impact organic traffic, it caused a 7% drop in organic sessions, which would have translated to a whopping estimated loss of 10,000 organic sessions a month! Talk about a justification for full-funnel testing.

Here’s what the result graph looked like:

This test was not a true full funnel test, as we were not able to implement the tracking with our client’s website to run it as one, but it has definitely reaffirmed our belief in the importance of running tests as full funnel - and that was before Google had officially announced page experience will be a ranking factor.

We’ve now seen a number of tests where we made changes that would traditionally be seen as the domain of UX/product teams rather than SEO teams influence both organic traffic and conversions.

This trend and the page experience announcement, in addition to the unpredictability of users, are good reasons to begin working more closely with your product teams if you haven’t been already. As Google informs its algorithm with user signals increasingly more, full funnel testing will only become more important.

Who would have thought moving a search widget up the page could have that dramatic of an impact on organic traffic?

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.