Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

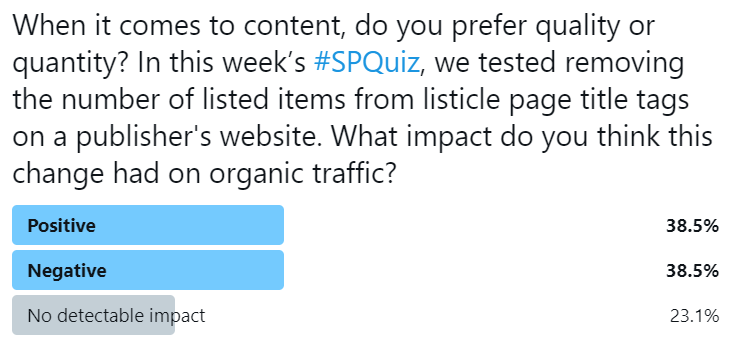

This week’s #SPQuiz on Twitter asked our followers what would happen to organic traffic if we removed the leading number from the title tags on a media brand’s article pages.

Our audience was equally split between this test having a positive or negative impact on organic traffic. While there was no real consensus on what the outcome would be, in this case about a third were correct - this test had a negative impact on organic traffic! Read below for the full case study:

We’ve previously performed title tag tests to better understand the trade offs between including relevant keywords in titles and including attention grabbing words or numbers. One recent example is adding the year in title tags. Striking a balance between these is particularly interesting in the media space - where there are a lot of competitors and also a lot of copycats.

In this industry, quality content does a lot of work as far as rankings are concerned, but once that challenge is met, getting users to click through amongst a sea of similarly-targeted content is the next obstacle.

You’ve almost certainly googled something like “best plants for home office” and seen a list of search results with a plethora of articles luring you to click through to find out the “X best plant species that you won’t kill at home.”

It often feels like an expectation that informational queries like this will be met with a slew of articles, all displaying numbers in their titles to give a sense of the volume of content that they contain. Slight differences between each of these titles in the SERP may make or break a user’s decision to click through.

This made us wonder: what if including the number of listed items in our article pages in the title tag is harming our organic traffic? What if the specificity of the number displayed for each article isn’t actually attractive or causing us to stand-out relative to our competitors in the SERP? What if having no number is a differentiator to influence CTR?

We tested just this, removing the number of listed items from title tags on an editorial site’s “listicle” article pages.

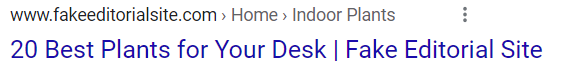

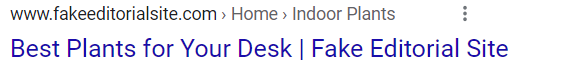

| Control | Variant |

|---|---|

|

|

| Control |

|---|

|

| Variant |

|

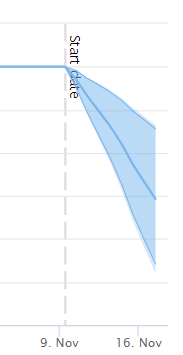

This was the impact on organic sessions:

This test ended in a conclusive negative result, the estimated decrease in organic traffic at about 16%.

What’s the takeaway? Testing user-focused title changes can have big impacts - we’ve seen both positive and negative results in similar tests, and oftentimes the results surprise us. The potential impacts from a title change can also mean taking big risks, but compared to internal linking or content, titles are also one of the most reversible changes. This means we can take risks that might result in a sizeable payoff, or, such as in this case, a lesson that going with the grain of the rest of the competition was important in not losing existing traffic.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.