Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

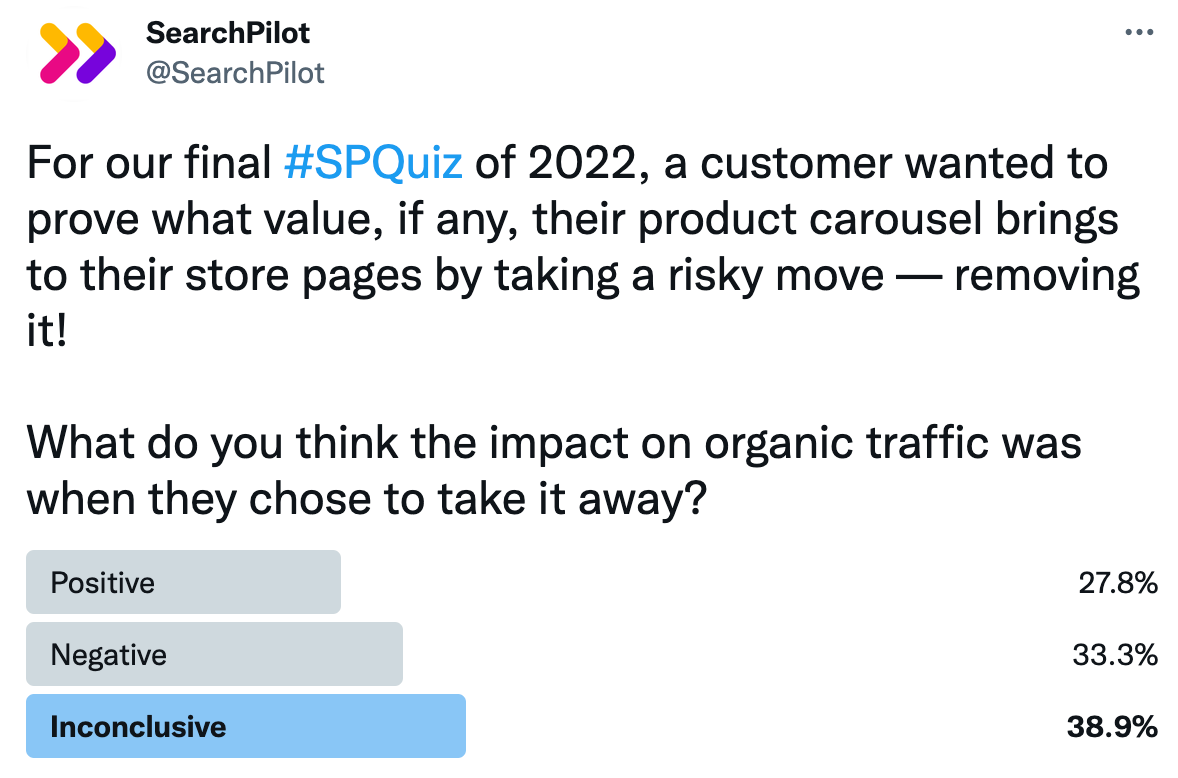

On this week’s #SPQuiz, we looked at the value of removing the product carousel element on a customer’s store pages affected their SEO performance. This change would completely remove the element which could positively or negatively impact the pages organic traffic.

How did this impact organic traffic?

The votes were fairly evenly split, however inconclusive took a narrow lead. In this case though, the minority was correct - this change had a positive impact on organic traffic!

To learn more, read the full case study below.

The Case Study

One of our ecommerce customers' websites was in the process of redesigning their category pages and wanted to test whether their website’s carousel was harming their SEO rankings.

While carousels are a visually appealing way to showcase numerous products, we know there is a possibility that it can harm a website’s performance in several ways, such as site speed. Firstly, carousels can slow down a website’s loading time. These elements often consist of multiple large images and can take longer to load than other elements on the page – leading to poor user experience; users may become frustrated with a slow-loading page and bounce off the page before engaging with the content.

Also, our customer had recently run a similar test on another page type. In that test, removing the carousel had a positive impact; they believed this was because it was showing irrelevant content in the carousel. To find out if the carousel on the category pages was similarly having a negative impact, they ran a test on those pages where we removed the carousel element. Given the hypothesis for this change was that the carousel was harming organic traffic, they expected removing it to improve organic traffic.

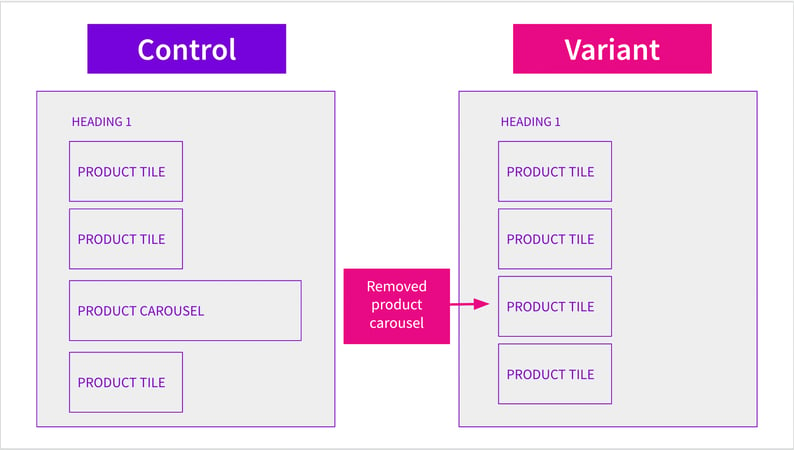

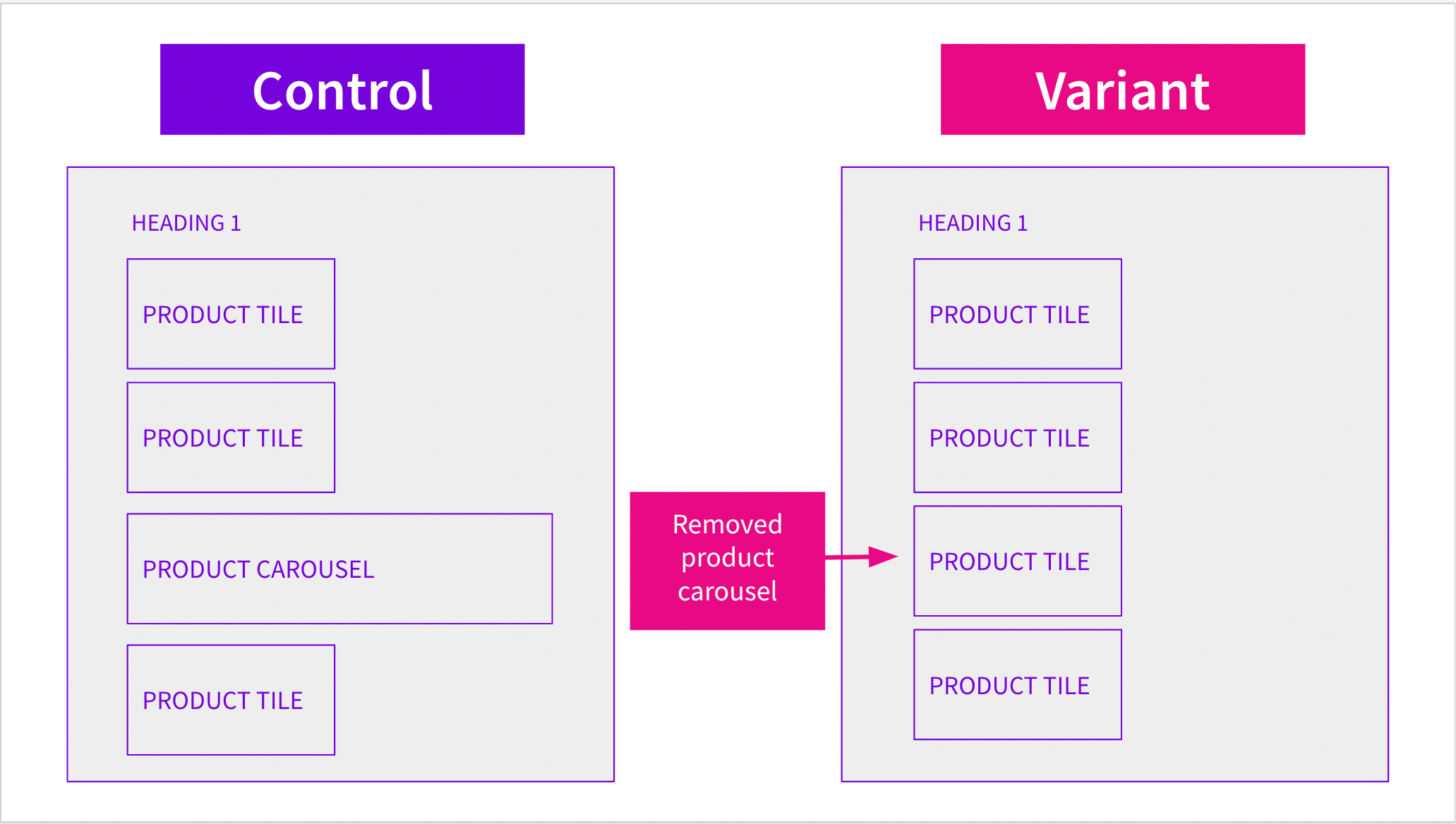

The products in the carousel already existed on the page, nestled between two other blocks of content, so that was another reason to believe the carousel may not be adding value. Here’s a mockup of the change:

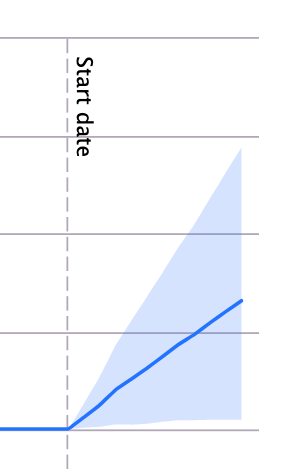

This was the result:

This result was striking - the website’s organic traffic increased by an additional +4,000 sessions in the first day after removing the carousel! This increase continued throughout the 11-day testing period, with a total increase of 29% by the end of the experiment!

The customer believed the increase in organic traffic could be attributed to a couple factors. First, removing the carousel improved the website’s loading time, leading to a better user experience. This potentially impacted their search rankings and made it more likely that visitors would stay on the page, possibly sending positive user signals to Google.

Second, removing the carousel possibly enhanced the freshness signals of the page to Google. Their carousels were not dynamically updated as often as other site elements thus Google could have identified it as stale content when crawling and indexing the page. Without the carousel taking up valuable space on the page, the products and content that users searched for were more prominently displayed and easier to access. This made it more likely that visitors would make a purchase and stay on the page, possibly leading to the increased organic traffic.

This test is an excellent example of the importance of UX and SEO working together harmoniously. Although the carousel was appealing to the users, it negatively impacted the SEO metrics for the page and perhaps was not the most effective way to display content on the page. Sometimes, less is more, and we recommend balancing the pros and cons for your UX and SEO before adding a new element to your website.

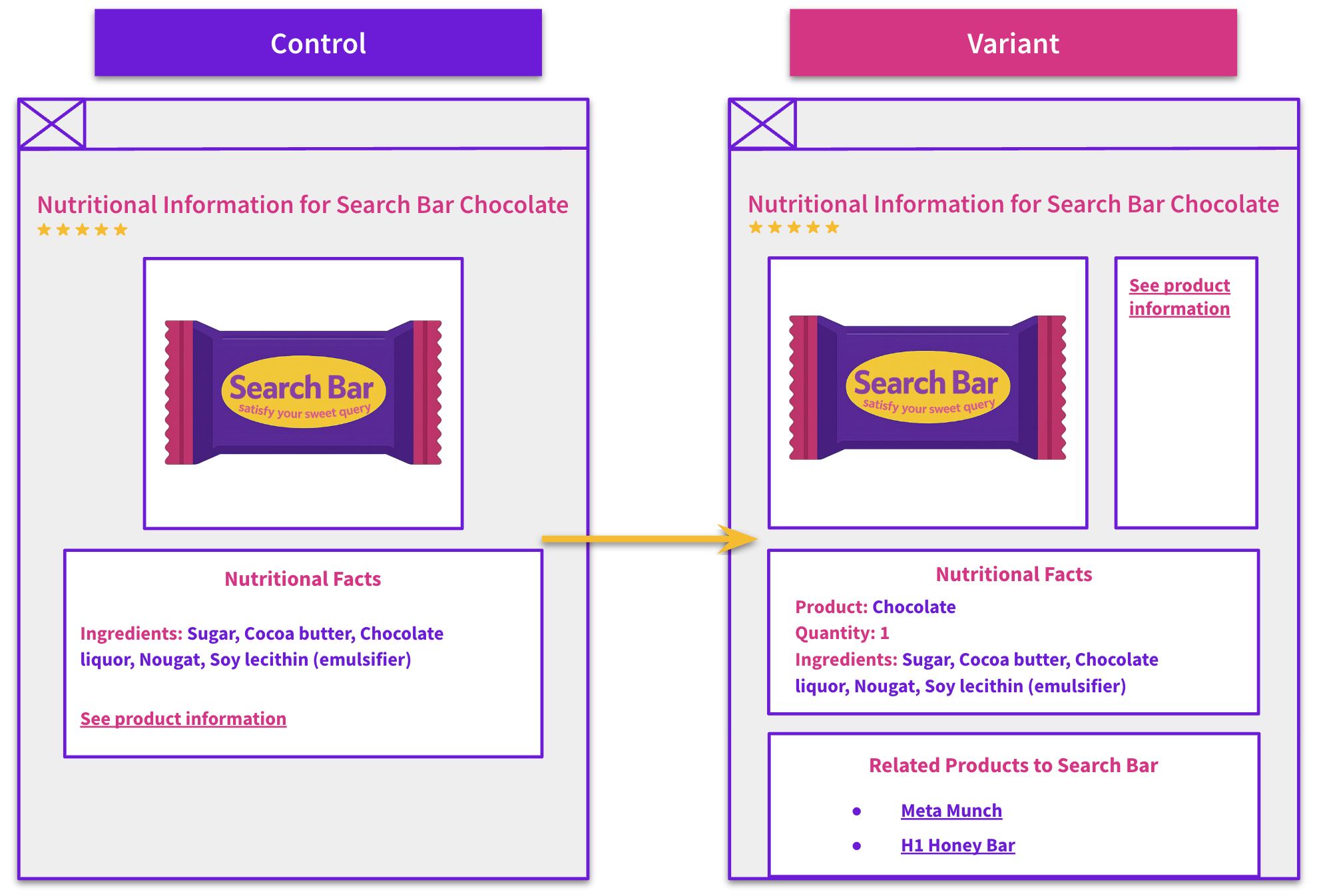

How our SEO split tests work

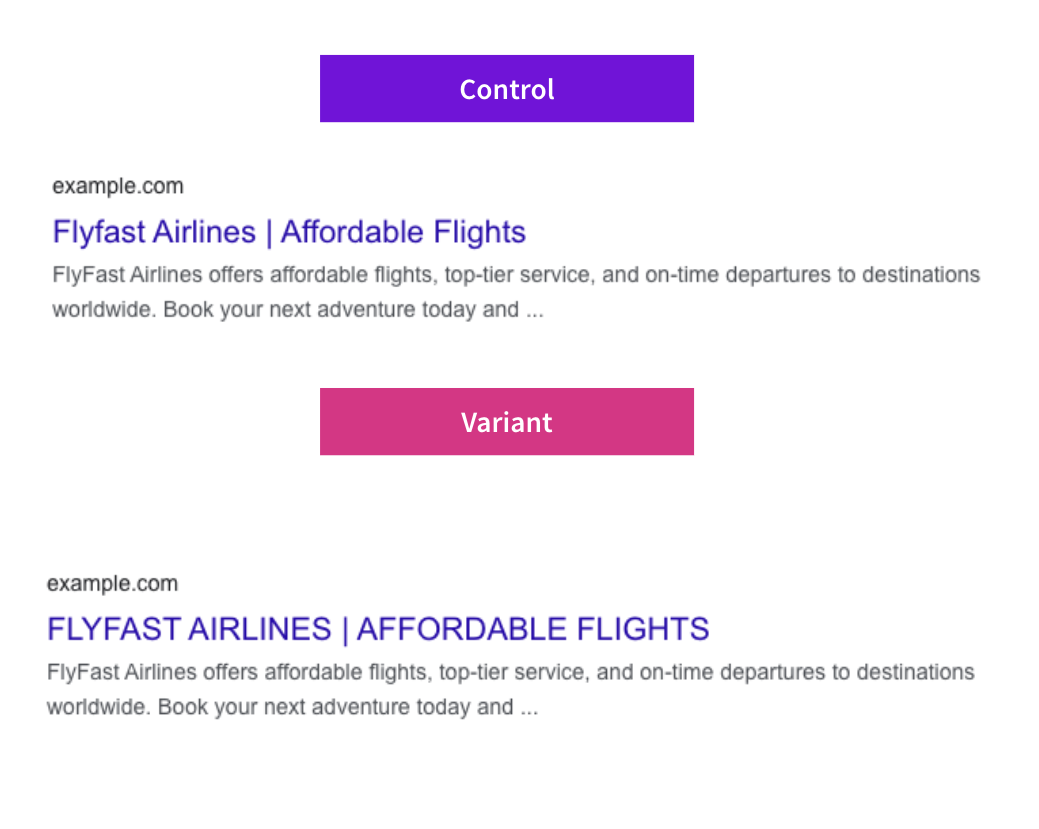

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO A/B testing works or get a demo of the SearchPilot platform.