Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

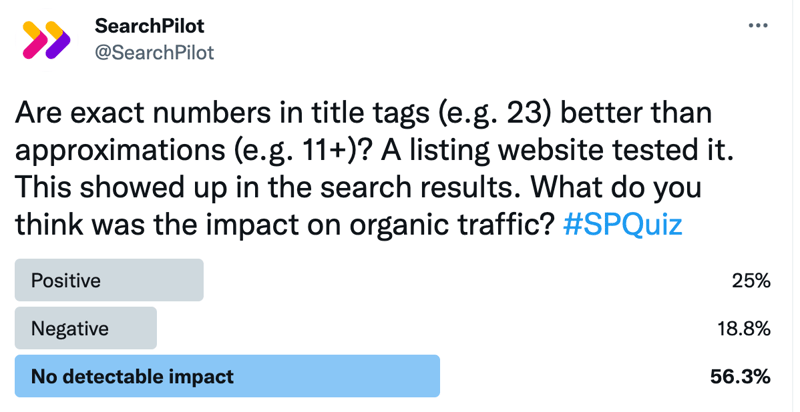

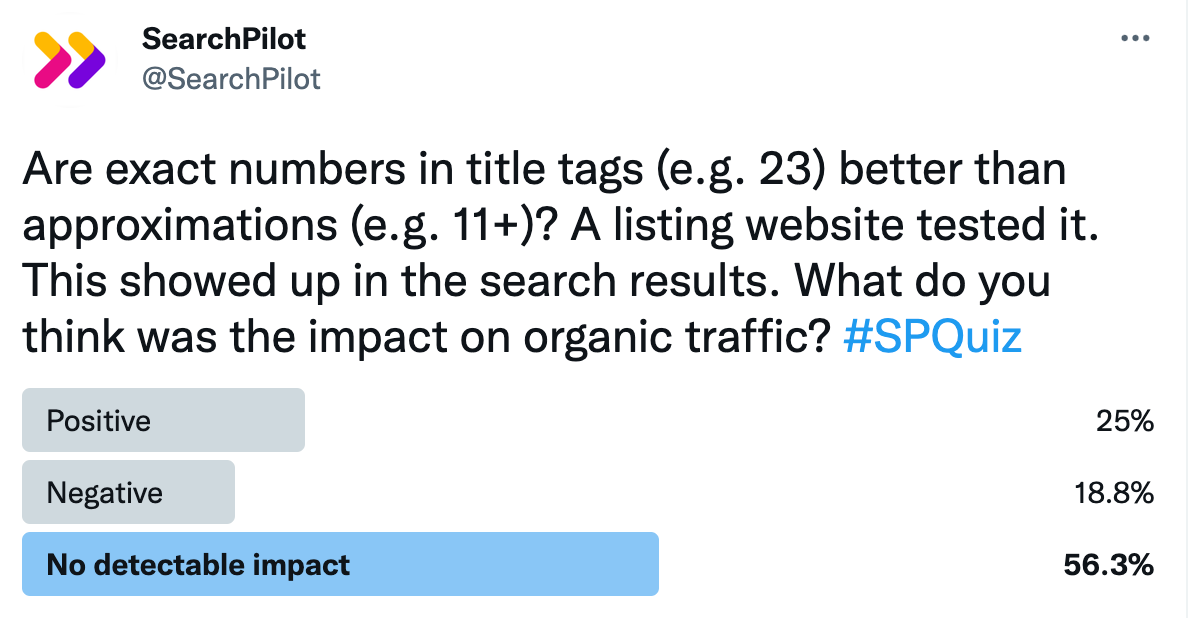

For this week’s #SPQuiz, we were looking at using approximations of results counts for a listings website would impact SEO performance. We reached out on our Twitter page to see what our followers expected the results to be. Here’s what the SEO community felt about this variant title tag version:

The majority of poll respondents felt that the result of this test would be ‘no detectable impact.’ Well! Let’s continue to see if we can trust the instincts of our fellow SEO’ers.

The Case Study

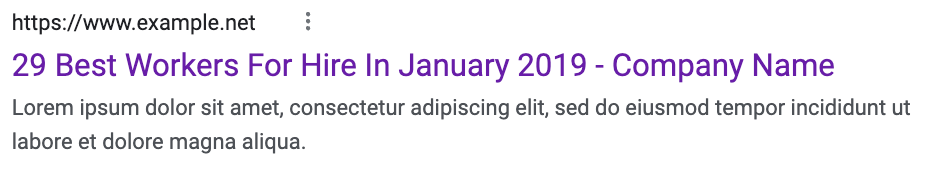

This week’s case study features a customer website that was in the listings industry. The test was part of a series that tested a variety of title tag variations to find the page title template that performed best in the search results pages.

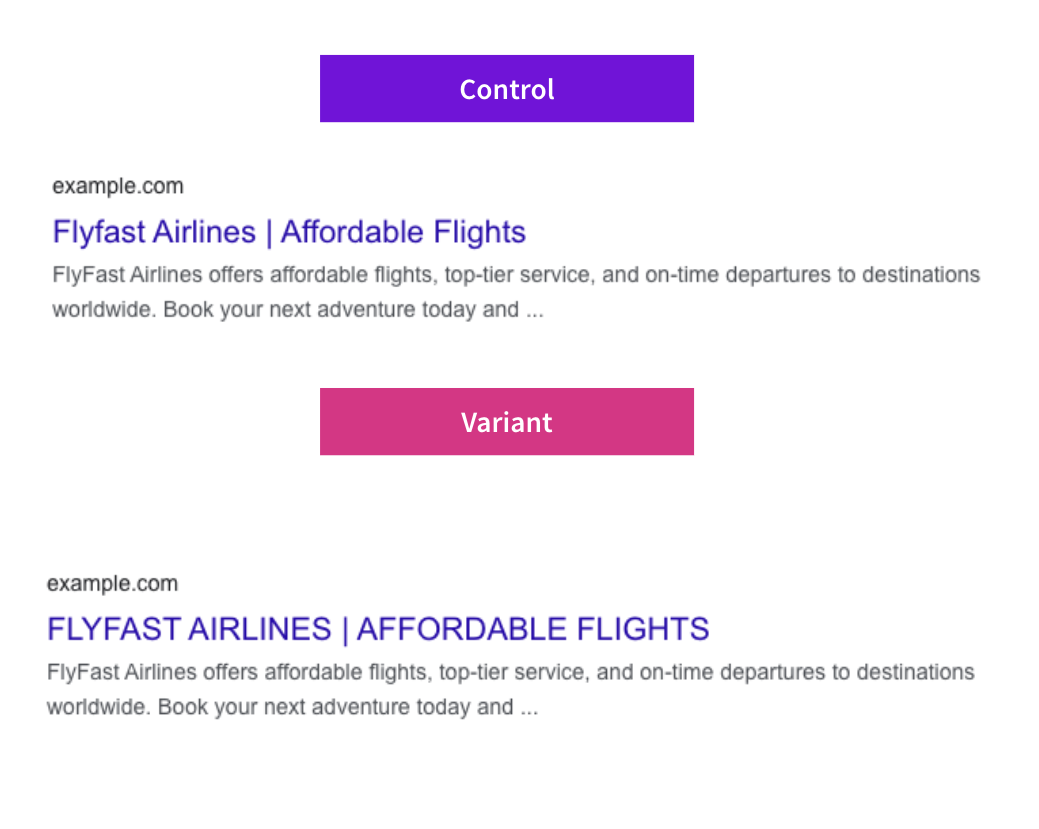

The standing title tag for their listicle page would indicate how many listings were on the page. If there were 29 listed worker postings for a given page, the title tag would look like the following:

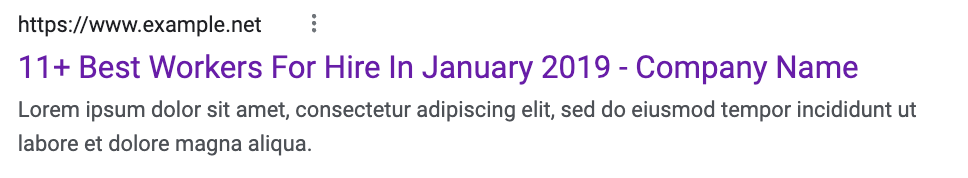

They hypothesized that, because Google would often be slow to crawl these pages, the number in search results would often cause a mismatch with what users were seeing when landing on the page. This may have had a negative impact on user signals, so they thought it may be better to include a vaguer number.

Also, there was a chance that updating the count to ‘11+’ would improve clickthrough rate, because the plus sign would stand out better in the search results and may entice more curiosity than a fixed number.

The variant SERP listing title tag would appear like the following:

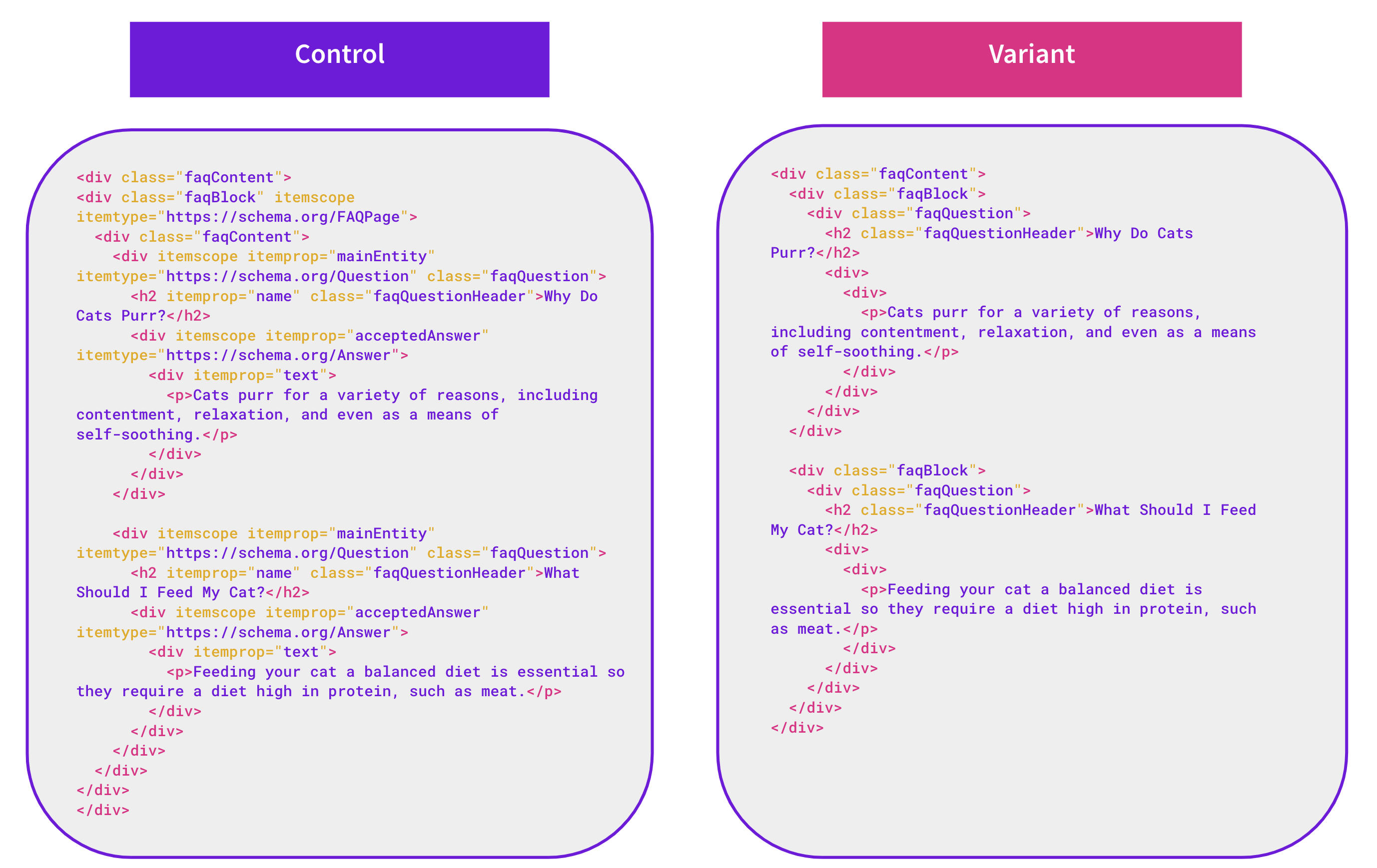

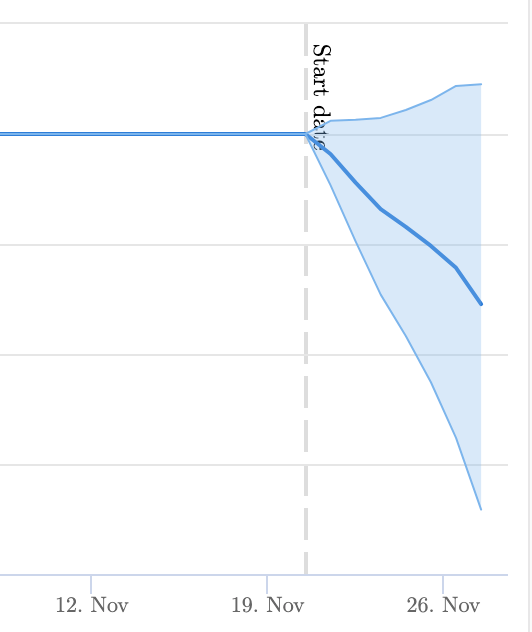

This was the final result:

There was no detectable impact at the 95% confidence interval after seven days of testing. This test result was negative at a lower level of confidence, so we decided to end it to avoid losing more organic traffic.This change was picked up by Google and the variant title tag did display in the search results. Although this was not negative at the 95% confidence level, it was likely to be negative, suggesting that using the specific number of results was preferred by search users.\

Although not successful this time, many of our customers are able to quickly test title tag variations and eventually discover a winning title tag template for different sections of their website.

How our SEO split tests work

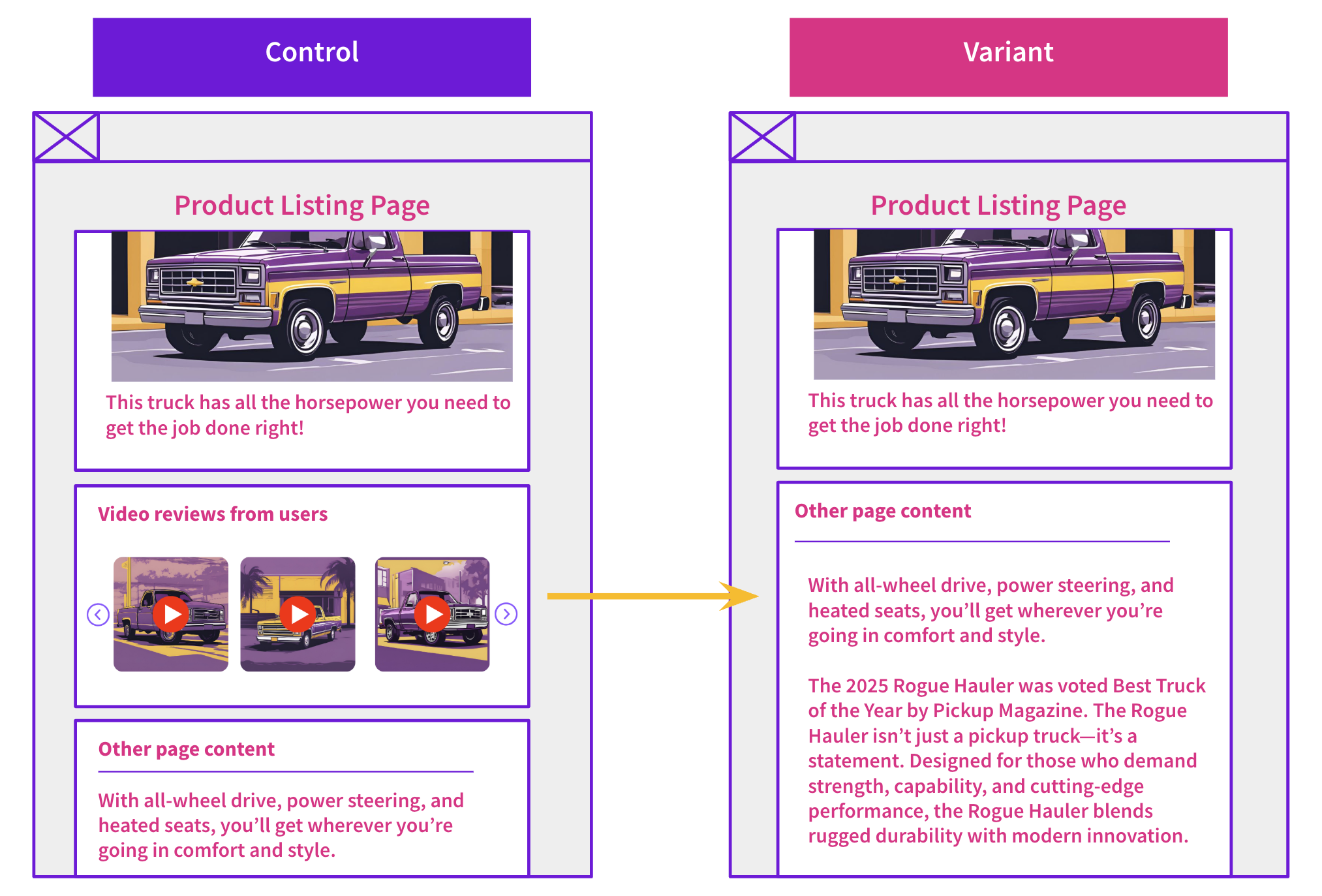

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO A/B testing works or get a demo of the SearchPilot platform.