Start here: how our SEO split tests work

If you aren't familiar with the fundamentals of how we run controlled SEO experiments that form the basis of all our case studies, then you might find it useful to start by reading the explanation at the end of this article before digesting the details of the case study below. If you'd like to get a new case study by email every two weeks, just enter your email address here.

We’ve talked about FAQ schema a few times before– you can revisit those posts here, or here. From our testing we have often seen good returns from including FAQ schema even across different industries. In July of last year, we said that “67% of all FAQ schema tests we’ve run have resulted in a positive impact” and since then the trend has continued with most of our recent FAQ tests being positive too.

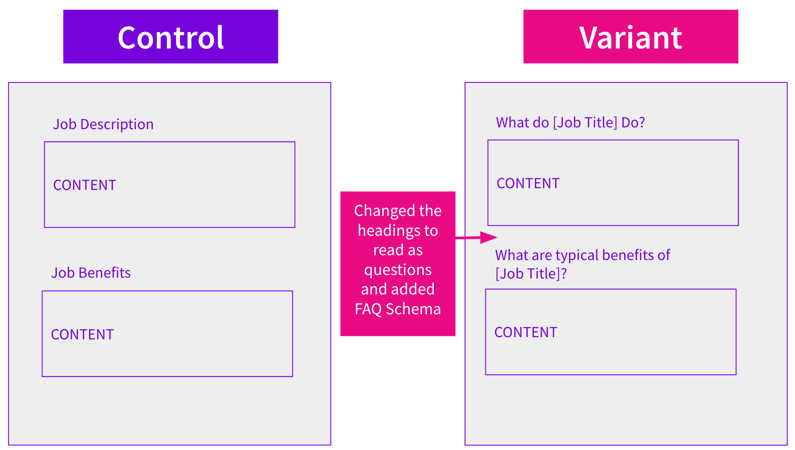

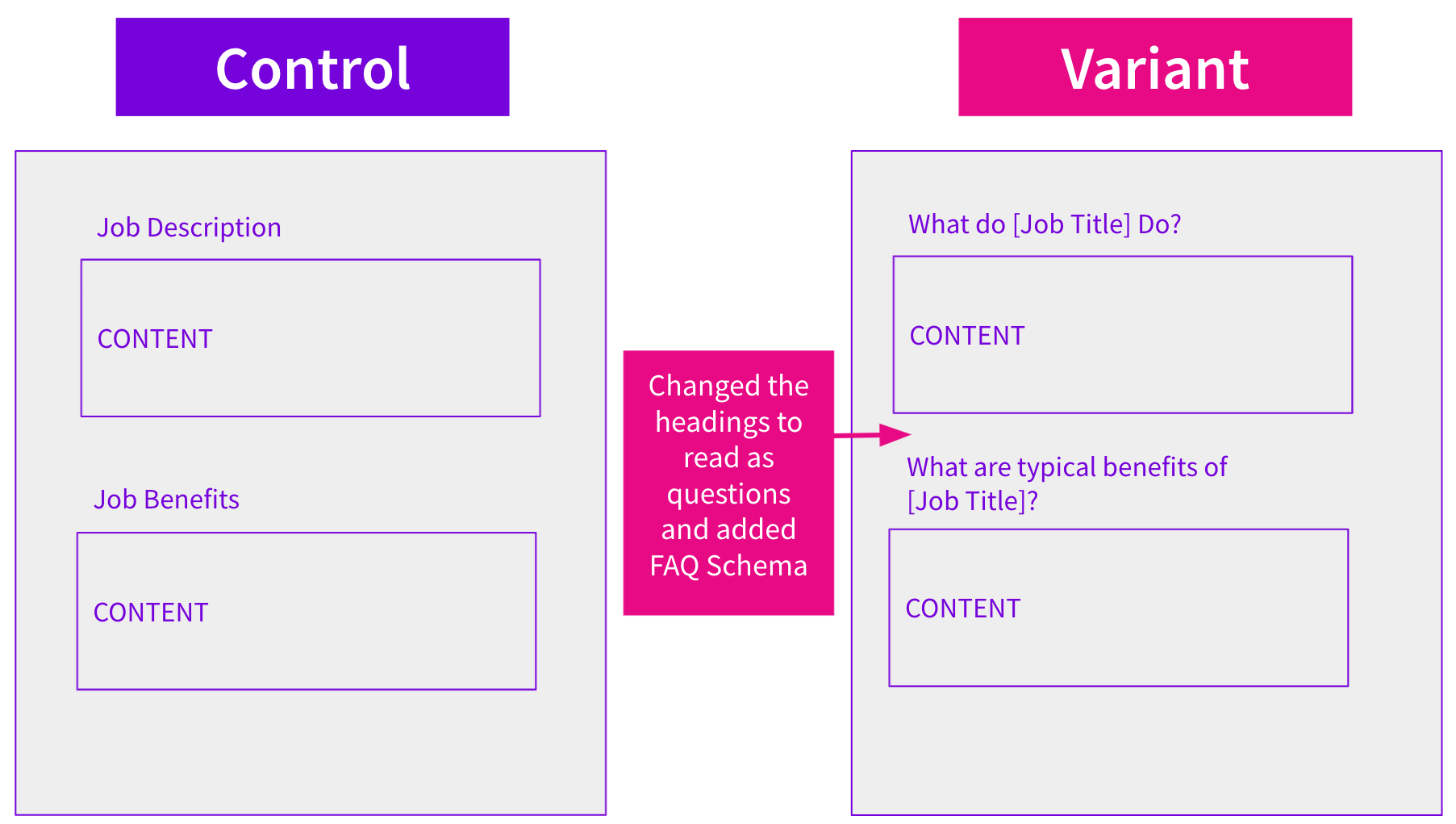

This week, we’re revisiting FAQ schema. A customer wanted to test whether or not FAQ schema would improve their organic search performance on their informational job pages. To accomplish this we used SearchPilot to update the page’s headers into questions and inject FAQ schema.

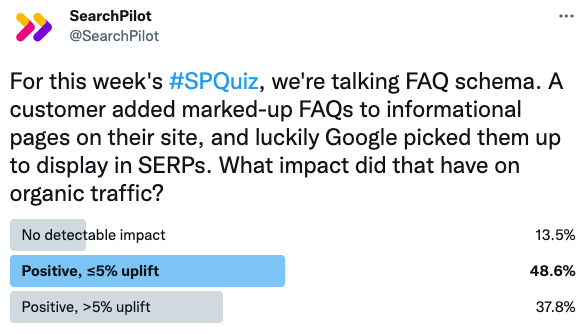

Of course, we surveyed our Twitter correspondents in the weekly #SPQuiz for their weigh-in on the test results. This time, with so many FAQ tests being positive, we decided to ask them to guess the amount of uplift that this change produced on these pages:

The majority of voters thought this test would have in a positive impact. Most thought it would be less than a 5% increase in organic traffic. Turns out that the majority was correct! This had a positive impact, just not one over 5%. Read the full case study below to see how it played out:

The Case Study

For this specific test, we made a slight adjustment to page headings to accommodate FAQ schema. We updated the page headings of each section from statements into questions. The answers were the existing content beneath them – this alone could have been a worthy idea to test out. With the headings transformed into questions, we then applied FAQ schema to the page and launched the test.

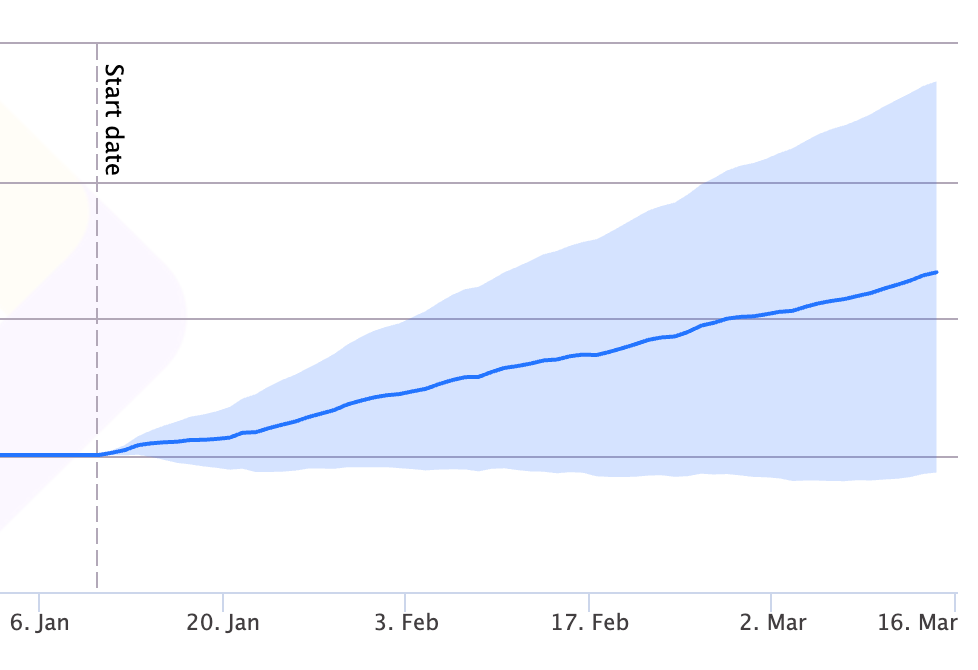

This was the resulting impact on organic traffic:

After 6 weeks of collecting test data, we found that the test was positive with 95% confidence. We estimated that the percentage uplift was 3%.

While we were able to update the headings on the page to become a question, they weren’t worded as naturally as they would have been if they were structured as frequently asked questions from the start. Regardless, this test was able to capture rich snippets on Google during the testing period.

To receive more insights from our testing, sign up for our case study mailing list, and please feel free to get in touch if you want to learn more about this test or our split-testing platform more generally.

How our SEO split tests work

The most important thing to know is that our case studies are based on controlled experiments with control and variant pages:

- By detecting changes in performance of the variant pages compared to the control, we know that the measured effect was not caused by seasonality, sitewide changes, Google algorithm updates, competitor changes, or any other external impact.

- The statistical analysis compares the actual outcome to a forecast, and comes with a confidence interval so we know how certain we are the effect is real.

- We measure the impact on organic traffic in order to capture changes to rankings and/or changes to clickthrough rate (more here).

Read more about how SEO testing works or get a demo of the SearchPilot platform.